Get the free CUDA Application Design and Development

Get, Create, Make and Sign cuda application design and

How to edit cuda application design and online

Uncompromising security for your PDF editing and eSignature needs

How to fill out cuda application design and

How to fill out cuda application design and

Who needs cuda application design and?

CUDA Application Design and Form: A Comprehensive How-to Guide

Understanding CUDA programming

CUDA, or Compute Unified Device Architecture, is a parallel computing platform and programming model developed by NVIDIA. It enables developers to leverage the power of NVIDIA GPUs to accelerate computing applications beyond traditional CPU capabilities. With CUDA, programmers can utilize the GPU's massively parallel processing power to perform complex calculations and data processing more efficiently and quickly.

The key benefits of using CUDA for application design include significantly improved performance due to parallel processing, easier access to hardware functionality, and an extensive ecosystem of libraries and tools tailored for various applications. This enables individuals and teams to push the limits of computational tasks while maintaining effective resource use.

The significance of application design in CUDA

Designing efficient GPU applications is critical for maximizing performance and usability. A well-structured application can reduce execution time, optimize memory usage, and enhance user experience. For instance, poorly designed applications may lead to bottlenecks, robbing the system of potential performance gains by limiting parallel execution.

When planning your CUDA application, considering its design will directly impact its scalability and maintainability. A solid design encompasses not just the algorithms used but also how data flows through the system, which, in turn, will define the overall effectiveness of your project.

Key components of CUDA application design

A robust CUDA application architecture begins with understanding its structural components. The architecture can be broken down into the host, which is typically the CPU and its memory, and the device, which refers to the GPU and its onboard memory. The communication between these two is vital for performance.

Choosing the right algorithm for GPU processing is another fundamental aspect. Developers must consider factors such as the inherent parallelism of an algorithm and how well it maps to the CUDA architecture. Examples of commonly used algorithms in CUDA include matrix multiplication and image processing filters, both of which can drastically benefit from GPU acceleration.

Data management strategies play a crucial role in how efficiently your application runs. Effective memory management entails not only utilizing the different types of memory available on the GPU (Global, Shared, Texture, etc.) but also optimizing data transfer between the host and device to minimize latency.

Essential tools and software for designing CUDA applications

An appropriate development environment is critical when designing CUDA applications. Popular IDEs such as Visual Studio, Eclipse, and JetBrains CLion offer robust support for CUDA development. Coupled with the NVIDIA CUDA Toolkit, these environments provide the necessary tools and libraries for efficient coding.

Debugging and profiling tools like NVIDIA Nsight and Visual Profiler are indispensable for performance optimization. They allow developers to identify bottlenecks and inefficiencies, ensuring the application runs at peak performance.

Step-by-step guide to designing your first CUDA application

To start designing your first CUDA application, you first need to set up your development environment. Install the CUDA Toolkit by following the guidelines provided by NVIDIA, ensuring your system meets the necessary requirements for compatibility.

Creating a simple CUDA kernel begins with writing your first kernel function, which typically looks something like this: __global__ void vectorAdd(float *A, float *B, float *C, int N) { int i = blockIdx.x * blockDim.x + threadIdx.x; if (i < N) C[i] = A[i] + B[i]; } This kernel adds two vectors on the GPU, showcasing basic CUDA syntax and structure.

To compile and run your CUDA application, use the NVIDIA compiler, nvcc. The compilation process involves executing commands that convert your source code into a binary that can be run on a GPU. Once compiled, testing your application in a suitable environment will validate its functionality and performance.

Advanced techniques in CUDA application design

As you advance in CUDA application design, grasping streamlined memory access patterns becomes essential. Optimizing how memory is accessed can common performance pitfall. For example, coalescing memory accesses on the GPU increases throughput by grouping adjacent memory requests and minimizing delays.

Another critical technique is the concurrent execution of kernels. By implementing overlapping data transfer and computation strategies, developers can significantly improve resource usage and overall application performance.

Leverage unified memory in CUDA applications for simplified memory management. Unified memory allows developers to treat CPU and GPU memory as a single address space, enabling automatic data migration and lessening the burden of manual memory management.

Best practices in CUDA application development

Code organization and maintaining modularity are vital to effective CUDA application development. Structuring code into manageable, reusable components enhances readability, making it easier to debug and iterate upon. A well-structured project can greatly facilitate team collaboration and knowledge transfer.

Error handling in CUDA can be tricky, as many issues may arise from mismanagement of resources or incorrect kernel launches. Employing systematic error checking after each CUDA call and using tools like CUDA-GDB for debugging are critical strategies to resolve common errors.

Performance benchmarking and testing

Effective performance measurement methods help ensure that applications meet required benchmarks. Utilize techniques such as timing kernel executions and comparing performance against baseline implementations to quantify improvements. These methods allow developers to iterate on their applications strategically.

Real-world applications of CUDA

CUDA has found numerous successful implementations across various industries, from gaming graphics to scientific simulations. For example, in the field of healthcare, CUDA is used for analyzing medical images with machine learning algorithms to extract valuable insights and improve diagnostics.

As technology evolves, we can expect further innovations in CUDA development. Trends such as increased adoption of AI and machine learning in computation-heavy applications signify a burgeoning future for CUDA technologies.

Integrating CUDA with document management

CUDA’s computational prowess can significantly accelerate document processing tasks, such as rendering complex document formats or conducting rapid OCR operations. By employing CUDA in these processes, organizations can greatly reduce the time spent on manual document handling.

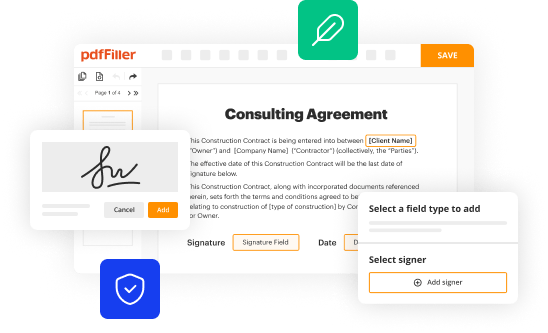

Real-time collaboration and eSigning functionalities can also be enhanced with CUDA applications. Integrating PDF management features enables users to work seamlessly with documents using accelerated processing, ensuring both speed and efficiency in collaborative environments.

Interactive tools for managing your CUDA applications

Utilizing tools like pdfFiller provides a robust solution for documentation. This platform allows developers to create, edit, and manage documentation for CUDA applications efficiently. With easy-to-use features that streamline the documentation process, developers can focus more on building powerful applications.

Interactive templates designed for CUDA developers can further streamline workflow. These resources allow for quick adaptations to existing projects, fostering innovation while reducing the overhead typically associated with documentation.

Final thoughts on building sophisticated CUDA applications

Continuous learning is paramount in the rapidly evolving world of CUDA development. As new updates and features are released, developers must stay informed to leverage the latest capabilities and maintain a competitive edge in application design.

Networking with the CUDA community can provide invaluable resources and support. Various forums and meetups offer opportunities for sharing insights, troubleshooting issues, and collaborating on projects that can benefit from multiple perspectives.

Additional considerations for teams

Fostering collaborative design approaches in teams is essential for streamlining CUDA application development. By utilizing code repositories, version control systems, and collaborative tools, teams can improve their workflow and communication, leading to higher quality output and faster project completion.

Teams must also take cross-platform development into account when building CUDA applications. Ensuring compatibility across different systems not only broadens the potential user base but also streamlines deployment practices, allowing for a smoother user experience across varied environments.

For pdfFiller’s FAQs

Below is a list of the most common customer questions. If you can’t find an answer to your question, please don’t hesitate to reach out to us.

How can I send cuda application design and to be eSigned by others?

How do I execute cuda application design and online?

Can I sign the cuda application design and electronically in Chrome?

What is cuda application design and?

Who is required to file cuda application design and?

How to fill out cuda application design and?

What is the purpose of cuda application design and?

What information must be reported on cuda application design and?

pdfFiller is an end-to-end solution for managing, creating, and editing documents and forms in the cloud. Save time and hassle by preparing your tax forms online.