Get the free Comparing Web Scraped Establishment Survey Frames of Industrial Hemp Growers in Seve...

Get, Create, Make and Sign comparing web scraped establishment

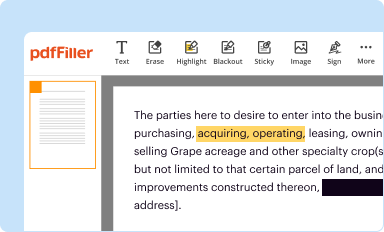

How to edit comparing web scraped establishment online

Uncompromising security for your PDF editing and eSignature needs

How to fill out comparing web scraped establishment

How to fill out comparing web scraped establishment

Who needs comparing web scraped establishment?

Comparing web scraped establishment forms for effective data collection

Understanding web scraping in the business context

Web scraping involves extracting data from websites and converting it into structured formats for analysis. In a business context, web scraping is pivotal for gathering insights that influence strategic decisions, competitive analysis, and market trends. As companies seek to harness digital data streams, understanding how to scrape establishment forms effectively becomes crucial.

Establishment forms typically encompass essential information about businesses, such as contact details, services offered, and operational data. These forms may be found on regulatory sites, business directories, and review platforms. By scraping these data points, organizations can create robust databases that inform marketing strategies and business development efforts.

Evaluating various web scraping methods for establishment forms

When it comes to web scraping establishment forms, selecting the right method is key to success. The two primary approaches are automated scraping and manual scraping, each with its own set of advantages and drawbacks.

Additionally, distinguishing between using APIs and HTML parsing is vital. APIs offer structured access to data, often ensuring accuracy and consistency, while HTML parsing allows for more complex queries but can be more error-prone due to layout changes.

Key factors to consider when comparing web scraping establishment forms

When embarking on web scraping, particularly for establishment forms, ensuring data accuracy is paramount. Inaccurate data can lead to misguided decisions that negatively impact operations. Techniques to enhance data quality include validating scraped information against trusted sources, applying data cleaning methods, and implementing checks to address inconsistencies.

Moreover, speed and efficiency are crucial competitive edges. Various scraping tools offer distinct speeds; some can scrape thousands of records within minutes, while others may take significantly longer. Optimizing scraping routines through parallel processing or dedicated servers can further enhance performance.

It’s also essential to consider compliance and legal frameworks surrounding data scraping. Understanding copyright issues, adhering to the robots.txt file, and following ethical scraping practices are necessary to mitigate legal risks.

Top tools and libraries for web scraping

The landscape of scraping tools is rich and varied, with both open-source and premium options available. Here are some notable tools:

User experiences have highlighted the importance of context when choosing a tool; for instance, Scrapy is ideal for developers, while Octoparse fits non-technical users better. Case studies demonstrate significant efficiency gains and improved accuracy when utilizing the right tool for scraping establishment forms.

Best practices for effective web scraping of establishment forms

Setting up an effective scraping environment is crucial for success. Recommended configurations include using virtual machines or containers to isolate scraping activities, which helps maintain a clean environment and avoid conflicts with other applications.

Integrating useful plugins and browser extensions can enhance scraping capabilities. For instance, tools that simulate user interactions or manage cookies can significantly improve scraping efficiency.

When designing scraping scripts, structuring them for maximum efficiency is key. This includes modular coding practices for easy updates, thorough documentation, and robust error handling to ensure continuity and reliability.

Common challenges in web scraping establishment forms

Web scraping is not without its challenges. Common issues include encountering Captchas, blocked IPs, and dealing with dynamic content that requires more advanced techniques to capture effectively.

To mitigate these challenges, employing techniques such as rotating IP addresses, using headless browsers, and implementing timed pauses between requests can enhance success rates.

Future trends in web scraping for establishments

As technology evolves, so does the landscape of web scraping. Emerging trends include the increased use of machine learning to automate data extraction and predictive analytics to identify valuable insights from scraped data.

Additionally, businesses must stay vigilant regarding potential shifts in compliance regulations. Understanding the legal implications of data scraping and adapting strategies accordingly will be imperative for future operations.

Integrating scraped data into business processes

Successfully leveraging the data acquired from web scraped establishment forms is vital for maximizing its benefits. Integration into marketing strategies allows companies to target audiences more effectively based on accurate, up-to-date information.

Furthermore, utilizing data visualization tools can help convey insights derived from scraped content, facilitating better strategic planning and operational adjustments. Collaboration across teams to share this data enriches decision-making and aligns efforts.

Measuring the ROI of web scraping activities

Focusing on key performance indicators (KPIs) can help organizations measure the return on investment from their web scraping efforts. Possible KPIs include data accuracy, time saved in information retrieval, and the impact on decision-making processes.

For instance, businesses that have adopted web scraping for establishment forms report measurable benefits in increased lead generation and improved marketing campaign targeting, highlighting just how impactful these activities can be in terms of revenue generation.

Leveraging pdfFiller for effective document management

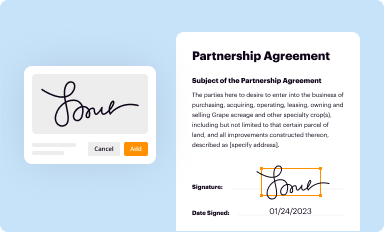

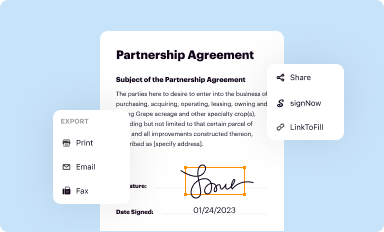

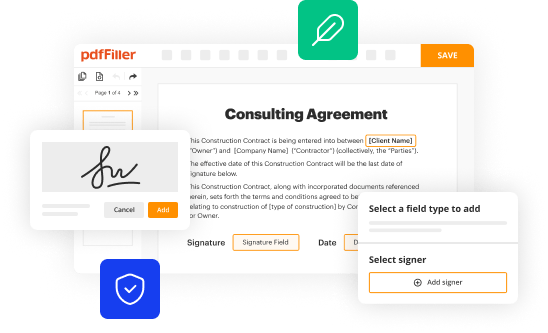

pdfFiller stands out as a cloud-based document management solution that complements web scraping activities. By integrating scraped data into pdfFiller, users can effortlessly create, edit, and manage establishment forms in a seamless manner.

With features like eSigning and collaborative tools, pdfFiller enhances document workflows, making it easier for teams to focus on strategic initiatives rather than administrative tasks. This not only increases productivity but also streamlines overall management efforts.

Next steps for your web scraping journey

For those looking to advance their web scraping skills, numerous resources are available. Online communities and forums provide platforms for sharing experiences, troubleshooting issues, and discovering new technologies.

Engagement in such community spaces can significantly enhance learning and foster collaboration, ultimately empowering users to harness the full potential of web scraping, especially for gathering and managing establishment forms effectively.

For pdfFiller’s FAQs

Below is a list of the most common customer questions. If you can’t find an answer to your question, please don’t hesitate to reach out to us.

Can I sign the comparing web scraped establishment electronically in Chrome?

Can I edit comparing web scraped establishment on an iOS device?

How do I edit comparing web scraped establishment on an Android device?

What is comparing web scraped establishment?

Who is required to file comparing web scraped establishment?

How to fill out comparing web scraped establishment?

What is the purpose of comparing web scraped establishment?

What information must be reported on comparing web scraped establishment?

pdfFiller is an end-to-end solution for managing, creating, and editing documents and forms in the cloud. Save time and hassle by preparing your tax forms online.