Model Calibration by Optimization Form: A Comprehensive Guide

Understanding model calibration

Model calibration refers to the process of adjusting the parameters of a model to improve its predictive accuracy. This adjustment is crucial as it ensures that the model outputs are reliable and correspond closely to the actual outcomes. Accurate model calibration enhances the trustworthiness of models used in various applications, from machine learning to statistical analysis.

In the context of data science and statistics, model calibration plays a vital role as it enables professionals to fine-tune models based on empirical data. This process aids in reducing systematic biases that might skew predictions. Moreover, a well-calibrated model can significantly improve decision-making outcomes across sectors such as finance, healthcare, and engineering.

It enhances the predictive performance of models.

Model calibration allows for better alignment with real-world data.

It helps in recognizing and mitigating overfitting issues.

The role of optimization in calibration

Optimization techniques are foundational in the calibration process, as they systematically adjust model parameters to minimize the difference between predicted outcomes and actual values. By employing optimization, data scientists can identify the most effective settings for their models, thereby enhancing overall accuracy.

The enhancement provided by optimization includes refining the model's learning process and ensuring it generalizes well to unseen data. When integrated with calibration, optimization becomes a powerful tool for improving the performance of predictive models. This is especially relevant when dealing with complex datasets where traditional calibration methods may fall short.

Gradient descent: A widely-used technique that iterates over the parameter landscape to find optimal configurations.

Genetic algorithms: These use population-based heuristics that mimic natural selection processes to discover optimal model parameters.

Bayesian optimization: This probabilistic model determines the numerical values of parameters to test through feedback, minimizing evaluations.

Step-by-step guide to model calibration by optimization

Preparation phase

The first step in effective model calibration involves identifying the model to calibrate and gathering the necessary datasets. Also, clear calibration goals should be formulated, defining benchmarks for success and areas of focus for optimization.

Data preparation

Data collection tools include web scraping, sensors, and database queries. Collecting diverse data can enhance model robustness, ensuring the model performs well across varying conditions. After data collection, rigorous cleaning and preprocessing are needed. Outlier detection, normalization, and filling gaps in data are just a few key processes in this phase.

Choosing the right optimization technique

Selecting the right optimization method is crucial for effective calibration. Key considerations include the complexity of the model, the nature of the data, and computational resources available. Simplistic models might benefit from gradient descent, whereas more complex models could require genetic algorithms or Bayesian techniques.

Implementing the optimization

Running optimization algorithms requires an understanding of their parameters and functions. Establish clear metrics to guide the algorithm's process, ensuring an iterative improvement approach. Monitoring optimization progress will also help identify any deviations in expectations, allowing for timely adjustments.

Evaluating the calibration results

Upon completion, it's essential to evaluate the calibration results. Common metrics for assessing the quality of calibration include Brier score, calibration plots, and reliability diagrams. Identifying potential pitfalls—such as overfitting or disregarding noise—is equally critical to refine and adjust the model calibration process.

Tools for model calibration

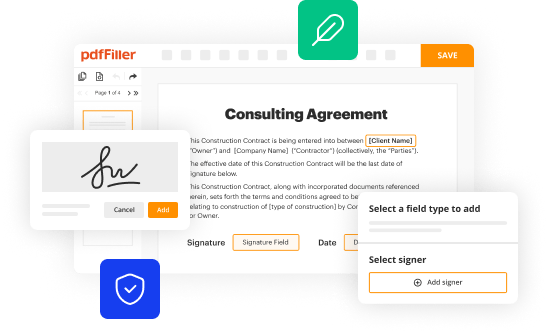

Various tools and software are available for conducting model calibration. Popular packages feature user-friendly interfaces and robust functionality. pdfFiller stands out by offering interactive tools that enhance collaboration and documentation throughout the calibration process. Its cloud-based platform allows teams to seamlessly integrate documents related to calibration.

For instance, pdfFiller's features aid in creating, editing, and managing essential documentation, ensuring that calibration steps are meticulously recorded. This contributes to transparency and eases the recalibration process as models evolve over time.

R: Offers multiple packages specifically designed for model calibration.

Python: Libraries like Scikit-learn and TensorFlow provide extensive functionalities.

MATLAB: Contains built-in functions for model calibration suitable for various applications.

Case studies in model calibration by optimization

Several real-world scenarios demonstrate successful model calibration through optimization techniques. In finance, models predicting stock movements have been refined significantly using Bayesian optimization methods, leading to enhanced risk management strategies.

Another poignant case is in weather forecasting, where the calibration of predictive models using genetic algorithms resulted in improved accuracy rates. Learning from these case studies elucidates critical factors such as data quality, iterative testing, and optimizing for specific contexts, underscoring the practical value of model calibration methods.

Best practices for maintaining calibration

To ensure ongoing accuracy, models must be regularly reviewed and recalibrated. This includes scheduling periodic assessments to identify shifts in data patterns and adjusting models accordingly. Additionally, documentation of the calibration processes is essential for transparency and reproducibility.

Utilizing tools offered by pdfFiller enhances this aspect, allowing users to annotate and organize calibration results effectively. Ensuring a consistent and collaborative approach to model maintenance can significantly uplift the calibration efforts.

Troubleshooting common issues in calibration

Common calibration issues include overfitting, data leakage, and failure to adequately measure calibration quality. Recognizing these challenges is the first step in addressing them. Overfitting, often characterized by high performance on training data but poor predictive power on test data, can be tackled by employing techniques such as cross-validation.

Data leakage, where information from the validation dataset influences model training, can be a critical flaw. Solutions involve ensuring strict separation of training and validation datasets. Regularly reviewing calibration practices and adjusting optimization strategies are critical for overcoming these hurdles.

The future of model calibration

Emerging trends in optimization and model calibration, particularly with the integration of artificial intelligence, are reshaping the landscape. AI-driven techniques enable adaptive calibration, where models continuously learn and adjust based on live data streams. These advancements promise heightened accuracy and efficiency.

Furthermore, innovations such as transfer learning are gaining traction. They allow models to generalize better across different domains, thus reducing the need for exhaustive recalibration processes. These trends highlight the exciting future for model calibration and its crucial role in data-driven decision-making.

Community insights

Engaging with the community opens channels for shared best practices and collective learning. Forums and social media groups can be invaluable for exchanging insights on specific calibration techniques and tools. User contributions often lead to novel strategies and insights that can enhance overall calibration methodologies.

Collaborating with peers provides opportunities for peer review and constructive feedback, which can refine your model calibration processes. It fosters an environment where practitioners can share successes and challenges, creating a richer understanding of the intricacies involved in model calibration.

Frequently asked questions

Several queries often arise regarding model calibration by optimization form. Users frequently ask about the best optimization technique for specific types of data or models. Many express concerns about the risks of overfitting during calibration, while others seek clarity on the metrics used for evaluating calibration success.

Addressing these questions typically involves providing well-documented resources and examples, ensuring practitioners have a solid foundation from which to operate. Expert insights into these queries help demystify the calibration process and guide users toward successful implementations.

Specialized resources

For advanced users, additional tools and resources enhance the calibration experience. Tutorials, webinars, and dedicated forums allow users to deepen their expertise. Engaging with these resources not only broadens knowledge but also establishes connections with industry experts and fellow practitioners.

Understanding nuanced techniques and exploring innovative methodologies can drastically improve model calibration efforts. Continuous learning opportunities empower users to remain competitive while optimizing their approaches to model calibration.

Feedback and user experiences

Many individuals and teams have unique journeys in model calibration. Sharing your calibration experiences can stimulate conversations and innovations, fostering a collaborative spirit among practitioners. This feedback loop encourages adaptations based on diverse experiences, allowing for continual refinement of techniques.

Moreover, discussing optimization techniques that have worked well provides others insights into practical applications, enhancing the collective knowledge in the field of model calibration by optimization form.