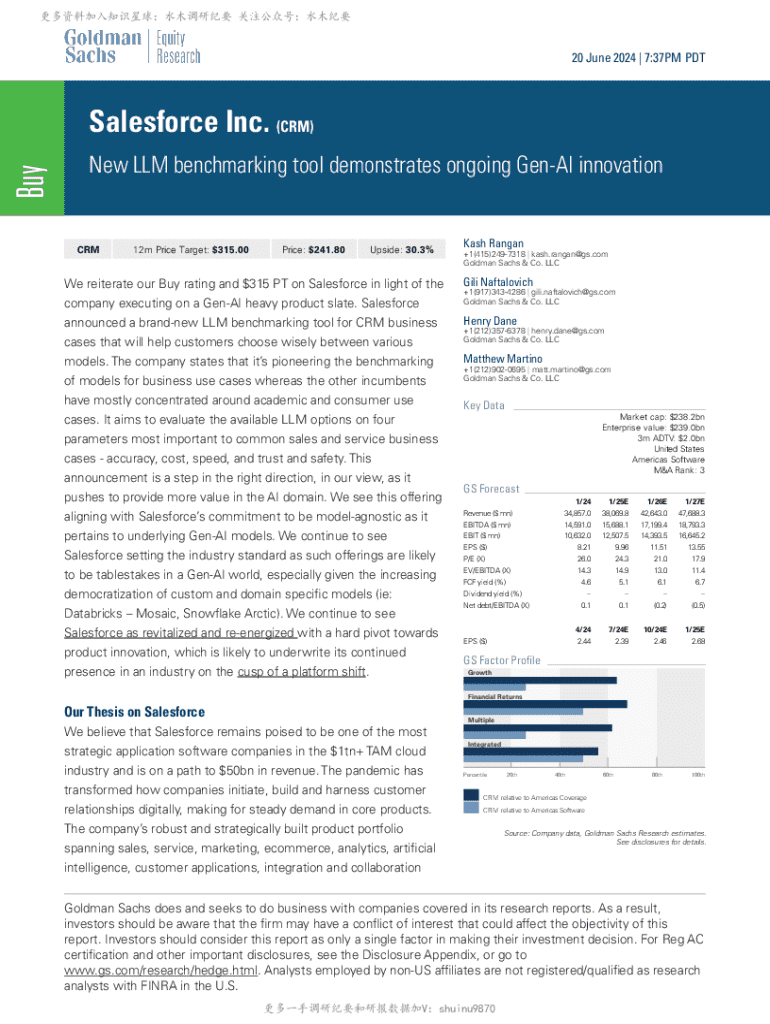

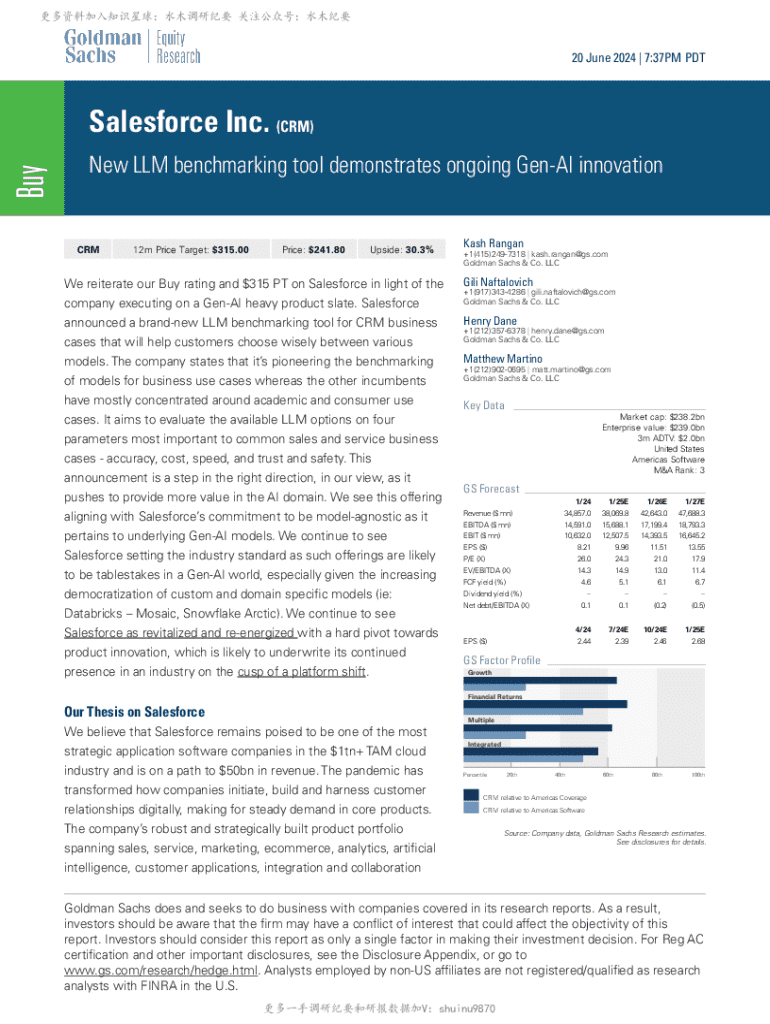

Get the free New LLM benchmarking tool demonstrates ongoing Gen-AI innovation

Get, Create, Make and Sign new llm benchmarking tool

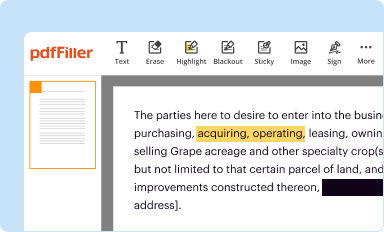

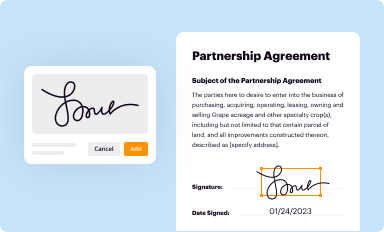

How to edit new llm benchmarking tool online

Uncompromising security for your PDF editing and eSignature needs

How to fill out new llm benchmarking tool

How to fill out new llm benchmarking tool

Who needs new llm benchmarking tool?

Introducing the New Benchmarking Tool Form

Understanding benchmarking

An LLM benchmarking tool is designed to evaluate the effectiveness and performance of large language models (LLMs) in various applications. This tool serves a critical role in the AI landscape by providing developers and researchers with the means to quantify and compare the capabilities of different models. Such comparisons help stakeholders make informed decisions regarding model adoption and deployment.

Benchmarking is essential in AI development, helping ensure that models not only perform well on paper but also in practical applications. By establishing a systematic approach to performance evaluation, developers can identify strengths and weaknesses in their models, fostering continuous improvement.

Key features of effective benchmarking tools

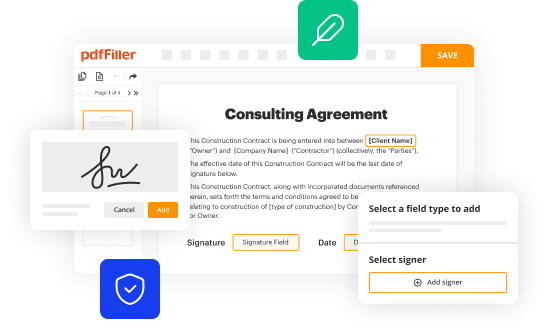

An effective LLM benchmarking tool should offer a user-friendly interface, enabling both technical and non-technical users to navigate easily. An intuitive design not only enhances user experience but also promotes broader accessibility across various devices, ensuring everyone can participate in the benchmarking process.

One of the core elements of any LLM benchmarking tool is its comprehensive evaluation metrics. Metrics such as accuracy, F1 score, and precision provide users with a clear understanding of model performance. Transparency in reporting results is crucial, as it allows users to trust the findings and make data-driven decisions.

Integration capabilities are vital, allowing the benchmarking tool to interface seamlessly with existing systems and workflows. With cloud-based platforms like pdfFiller, users can easily access and manage benchmarking data from anywhere, enhancing collaboration and efficiency.

The benchmarking process explained

Benchmarking LLMs follows a systematic process starting with the selection of models to evaluate. Identifying the right candidates is crucial, as it directly influences the relevance of the results. Following this, setting up the benchmarking environment involves configuring hardware and software to ensure consistent and accurate performance assessments.

After executing benchmarking tests, the critical step is analyzing the results. It's important to understand what the scores mean and how they relate to model performance. By recognizing patterns and trends in the data, teams can determine which models best suit their specific applications, paving the way for informed decision-making.

Types of benchmarks to consider

When it comes to evaluating LLMs, there are two primary benchmarking approaches: standard benchmarks and customized benchmarks. Standard benchmarks provide a reliable framework based on established criteria, making them suitable for quick assessments. However, when specific needs arise, creating customized benchmarks that align with organizational goals can significantly enhance evaluation relevance and accuracy.

Popular benchmark suites like GLUE and SuperGLUE have gained prominence in the community due to their robust methodologies. These suites encompass diverse tasks and datasets, ensuring a comprehensive evaluation of model capabilities, making them essential tools in the benchmarking landscape.

Limitations and challenges of benchmarking

Despite their importance, LLM benchmarking tools come with inherent limitations and challenges. Common pitfalls include issues of overfitting, where models may perform well on test datasets but fail to generalize in real-world applications. Similarly, inherent biases in benchmark design can skew results, making it imperative for evaluators to approach benchmarking with a critical eye.

Mitigating these challenges requires implementing strategies to enhance evaluation accuracy, including using diverse training data and employing various metrics to cover more aspects of model performance. By prioritizing a mix of qualitative and quantitative assessments, teams can foster a more holistic understanding of their models.

Advanced topics in benchmarking

The future of LLM evaluation techniques lies in the evolution of benchmarking methodologies as advancements in AI continue to unfold. Emerging tools and techniques are being developed, which leverage AI to streamline the benchmarking process and improve model evaluation accuracy. As artificial intelligence grows more capable, incorporating adaptive learning features into LLMs can further refine their responses based on benchmarking feedback.

By utilizing feedback from benchmarking processes, LLMs can continuously learn and adapt, ultimately resulting in improved performance over time. The interplay between model training and performance evaluation is crucial for developing robust, reliable language models.

Tools and resources for benchmarking

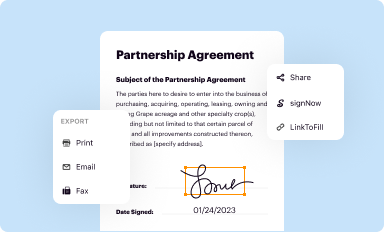

Integrating with pdfFiller's platform revolutionizes how users approach document management and LLM evaluation. By leveraging a cloud-based solution, pdfFiller users enjoy the benefits of seamless document editing, collaboration, and storage, all in one interactive environment. This integration allows teams to efficiently benchmark their models without the overhead associated with traditional evaluation methods.

For those looking to deepen their knowledge, numerous resources are available, including relevant articles, research papers, and online communities dedicated to LLM evaluation. Engaging with these resources can enhance understanding of best practices and new trends within the field.

Frequently asked questions (FAQs)

Choosing the right LLM benchmarking tool can significantly impact your evaluation process. Factors to consider include ease of use, compatibility with existing systems, and the robustness of evaluation metrics provided. It's essential to select a tool that aligns with your organization's objectives and specific use cases.

Real-time performance data from various models is often accessible through platforms like pdfFiller, allowing for immediate insights into benchmarking results. If your model underperforms, troubleshooting may involve revisiting your data quality, adjusting parameters, or exploring additional training datasets to improve overall performance.

Community insights and user experiences

Case studies highlighting successful use of LLM benchmarking tools illustrate the transformative impact effective evaluation can have on AI projects. For instance, a tech startup may implement a new benchmarking process to gauge model performance, resulting in to faster deployment and improved user satisfaction. By sharing such experiences, the community fosters a supportive environment where users can learn and grow together.

User reviews and feedback on popular tools reveal preferences related to ease of use, integration capabilities, and the depth of evaluation metrics. Understanding what other users value can guide your choice, ensuring you select the tool that best meets your benchmarking needs.

For pdfFiller’s FAQs

Below is a list of the most common customer questions. If you can’t find an answer to your question, please don’t hesitate to reach out to us.

How do I make changes in new llm benchmarking tool?

Can I create an electronic signature for signing my new llm benchmarking tool in Gmail?

How can I fill out new llm benchmarking tool on an iOS device?

What is new llm benchmarking tool?

Who is required to file new llm benchmarking tool?

How to fill out new llm benchmarking tool?

What is the purpose of new llm benchmarking tool?

What information must be reported on new llm benchmarking tool?

pdfFiller is an end-to-end solution for managing, creating, and editing documents and forms in the cloud. Save time and hassle by preparing your tax forms online.