Get the free How Does LLM Reasoning Work for Code? A Survey and ...

Get, Create, Make and Sign how does llm reasoning

Editing how does llm reasoning online

Uncompromising security for your PDF editing and eSignature needs

How to fill out how does llm reasoning

How to fill out how does llm reasoning

Who needs how does llm reasoning?

How Does Reasoning Form

Understanding reasoning

Large Language Models (LLMs) are a significant class of artificial intelligence systems designed to understand and generate human-like text. They leverage vast amounts of data and complex algorithms to process language, simulate conversations, and perform various language-related tasks.

Reasoning in LLMs is crucial as it allows them to go beyond mere text generation. Effective reasoning enhances their ability to comprehend context, draw conclusions, and provide insightful responses through logical deduction and induction.

Defining reasoning models

A reasoning model consists of mechanisms that enable LLMs to interpret information, make inferences, and solve problems. Key characteristics include the ability to process inputs logically, manage contextual awareness, and derive conclusions that align with human reasoning.

Reasoning models can primarily be classified into deductive and inductive types. Deductive reasoning involves drawing specific conclusions from general principles, while inductive reasoning extrapolates general rules from observed instances. Each type has unique applications, from ethical decision-making to statistical analysis.

When to utilize reasoning models

Certain scenarios can greatly benefit from the reasoning capabilities of LLMs. For example, in business contexts, LLMs can streamline decision-making processes by analyzing data trends and providing actionable insights. In education, they can tailor learning experiences by assessing student queries and offering customized feedback.

However, LLM reasoning is not without its limitations. Challenges arise in scenarios requiring nuanced understanding of human emotions or when dealing with ambiguous queries where human context is vital.

The training pipeline for reasoning models

Training LLMs involves multiple stages, such as data gathering, pre-processing, architecture design, and model training. The initial phase focuses on assembling diverse text data, ensuring a well-rounded understanding of language.

Data characteristics significantly impact the efficacy of reasoning capabilities. High-quality datasets with varied contexts help train models to recognize patterns, establish connections, and predict relevant outcomes effectively.

Building and improving reasoning models

To enhance reasoning efficiency in LLMs, various sophisticated methods can be applied. Supervised fine-tuning, leveraging labeled datasets, is one effective strategy. This method aligns a model more closely with task-specific requirements, ensuring it generates more contextually relevant outputs.

Another innovative approach is chain-of-thought (CoT) prompting. This technique encourages the model to lay out its reasoning steps explicitly before arriving at a conclusion, fostering transparency in the decision-making process.

Analyzing inference-time scaling

Improving response quality during inference involves several optimization techniques. For instance, a well-defined prompt can significantly enhance the relevance of responses. Furthermore, harnessing techniques like attention mechanisms allows models to focus on critical information, refining their outputs.

The effective scaling of LLM reasoning capabilities has profound implications for real-world applications. Enhanced reasoning contributes to more precise interactions in customer service, improved advice in healthcare applications, and better content generation tasks.

Unique approaches to reasoning modelling

Exploring pure supervised fine-tuning showcases its benefits, including a sharp focus on specific tasks but comes with potential pitfalls like overfitting. Therefore, a delicate balance is necessary when choosing this method to ensure model robustness across varied applications.

Distillation also plays a vital role in refining reasoning models. This technique streamlines performance by transferring knowledge from larger models to smaller ones, thus enhancing efficiency while preserving essential reasoning capabilities.

Understanding the mechanism of reasoning

LLMs exhibit reasoning capabilities through layers of neural networks that interpret input data and generate responses. Each layer processes information sequentially, which helps in capturing context and constructing coherent outputs.

The learning process is iterative, focusing on adjusting model weights based on the accuracy of predictions compared to real outcomes. By continuously optimizing these adjustments, LLMs evolve their reasoning capabilities over time.

Expanding on AI thinking processes

Understanding the nuances between thinking and remembering is essential to grasp how LLMs function. While remembering involves retrieving stored information, thinking encompasses the ability to generate new ideas or conclusions based on that memory.

LLMs face various thinking problems, such as logical deductions in complex queries or interpreting intricate directives. They must manage inherent uncertainties and generate relevant responses, making accurate reasoning paramount.

Practical examples and case studies

In real-world scenarios, LLM reasoning shines in various applications. For instance, companies like Google use LLMs for content recommendations, where the models analyze user data to suggest relevant articles. Another example is the use of LLMs in legal tech, which assists lawyers in drafting contracts by ensuring all legal terms are accurately represented.

The lessons learned from these applications highlight the importance of context and understanding user intent in refining and adapting reasoning models for better performance.

Future of reasoning

Emerging trends in reasoning models will likely see advancements fueled by continual improvements in computational power and the availability of more diverse datasets. Innovations such as hybrid models combining symbolic reasoning with neural networks can enhance LLMs’ ability to carry out complex reasoning tasks.

As we advance, challenges such as biases in datasets and the need for ethical guidelines in AI use will shape the landscape of LLM reasoning. These considerations will provide organizations with opportunities to develop responsible AI systems that augment decision-making processes.

Engagement and interactivity tools

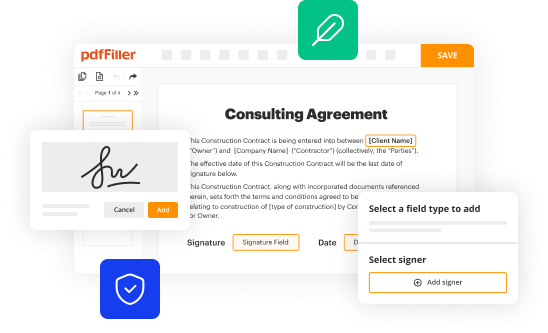

Leveraging interactive tools like those offered by pdfFiller can significantly enhance document management for individuals and teams. With features enabling seamless editing, e-signing, and collaboration, users can interactively engage with their documents.

Tools provided within pdfFiller facilitate centralized collaboration, allowing teams to manage and share documents effectively. This streamlining enhances overall productivity and communication among team members.

FAQs about reasoning

Common questions about how LLM reasoning forms often revolve around its effectiveness in various domains. Users may wonder how to leverage reasoning capabilities to maximize productivity or whether specific applications are suitable for LLMs.

Clarifications on misconceptions emphasize that LLMs, while powerful, require careful oversight, particularly in sensitive applications where human reasoning is critical.

For pdfFiller’s FAQs

Below is a list of the most common customer questions. If you can’t find an answer to your question, please don’t hesitate to reach out to us.

How can I modify how does llm reasoning without leaving Google Drive?

How do I edit how does llm reasoning online?

Can I create an electronic signature for signing my how does llm reasoning in Gmail?

What is how does llm reasoning?

Who is required to file how does llm reasoning?

How to fill out how does llm reasoning?

What is the purpose of how does llm reasoning?

What information must be reported on how does llm reasoning?

pdfFiller is an end-to-end solution for managing, creating, and editing documents and forms in the cloud. Save time and hassle by preparing your tax forms online.