Get the free meg/fineweb-bias-man-sentencesDatasets at Hugging ...

Get, Create, Make and Sign megfineweb-bias-man-sentencesdatasets at hugging

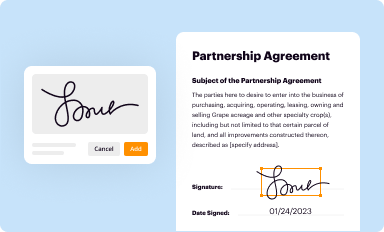

How to edit megfineweb-bias-man-sentencesdatasets at hugging online

Uncompromising security for your PDF editing and eSignature needs

Understanding the megfineweb-bias-man sentences datasets at Hugging Face

Understanding bias in sentences: An overview

Bias in natural language processing (NLP) refers to the systematic favoritism or prejudice in the way language models interpret or generate text. These biases often stem from the datasets on which AI models are trained, reflecting historical and social inequalities. This is a crucial issue as language models are increasingly adopted in various applications, affecting sentiments, decisions, and perceptions across diverse user groups.

Addressing bias in language models is imperative for ethical AI deployment. Without diligent scrutiny, biased outputs can perpetuate stereotypes or propagate misinformation. For instance, a biased sentence generator might provide skewed representations of gender or cultural identities, which can, in turn, affect how individuals perceive those groups. It's vital for developers and researchers to prioritize unbiased data creation and curation.

The role of datasets in mitigating bias

Datasets play a fundamental role in analyzing and mitigating biases in NLP. They serve as the foundational training material for language models, shaping their understanding and capability to generate sentences. Two main types of datasets are prominent in this field: structured datasets, such as those organized in tables and databases, and unstructured datasets, like text documents or social media posts.

Another vital factor is diversity in data representation. It’s essential to include a wide array of perspectives and linguistic styles to create a balanced model. High-quality datasets must not only illuminate biases but also present diverse examples and counter-narratives, allowing models to learn from a multitude of viewpoints. For instance, datasets that include various gender identities and ethnic backgrounds lead to more robust models capable of generating inclusive language.

Overview of the megfineweb-bias-man dataset

The 'megfineweb-bias-man' dataset is a noteworthy compilation designed specifically for analyzing sentence-level biases. It is sourced from diverse internet texts, intending to capture a wide spectrum of human language while also highlighting specific instances of bias related to gender and social roles.

This dataset comprises hundreds of thousands of sentences, categorized by various forms of bias such as stereotypes, gender roles, and racial bias. Its structured format allows researchers easy access to the data. Each entry is meticulously tagged with the kind of bias it exemplifies, facilitating targeted analysis and ensuring that users can efficiently find relevant examples for their studies or applications.

Techniques for analyzing bias in sentences

Analyzing bias within sentences requires robust methodologies capable of exposing underlying prejudices. Statistical methods such as frequency analysis can reveal how often certain biased phrases occur compared to neutral expressions. This quantitative approach helps establish patterns and prevalence of bias across a dataset.

Comparative analysis is another effective technique. By juxtaposing biased sentences against their neutral counterparts, researchers can better understand how bias alters meaning or sentiment. Machine learning models, including supervised and unsupervised algorithms, also lend great assistance in bias detection; these frameworks can learn from the labeled data to identify and help eliminate bias in word choice or sentiment generation.

Practical applications of the megfineweb-bias-man dataset

The 'megfineweb-bias-man' dataset serves as an invaluable resource across several NLP applications. One primary use case is in training bias-aware language models. By integrating this dataset, developers can create models that are not only aware of but can also minimize the impact of biased sentence generation, taking a crucial step toward ethical AI practices.

Moreover, this dataset enhances sentiment analysis tools, allowing them to better differentiate between subjective and objective sentiments influenced by bias. Additionally, when combined with frameworks like Hugging Face Transformers, developers can leverage prebuilt models for their analyses, ensuring more balanced and effective outputs.

Step-by-step guide to using the dataset

To utilize the 'megfineweb-bias-man' dataset from Hugging Face, follow a systematic approach. The first step involves accessing the dataset directly from Hugging Face's repository. Users can simply navigate to the appropriate page, ensuring they select the right version suited for their use case, then proceed to download it.

After downloading, prepare the data by employing essential data cleaning techniques. This includes removing any unnecessary noise and ensuring that the dataset is normalized. Developers should also consider anonymizing the data to maintain privacy standards in compliance with applicable regulations. The next step involves implementing sentence bias detection using statistical or machine learning frameworks.

Collaborative efforts in bias reduction

Addressing bias in NLP is a collaborative effort requiring engagement between researchers, developers, and practitioners. Sharing insights and findings is paramount to building a repository of knowledge that can inform future datasets. Open-source contributions foster an inclusive landscape, encouraging diverse perspectives to enhance dataset integrity.

Consistency across various datasets is essential for achieving reliable bias detection and mitigation. Engaging in collaborative projects can help unify efforts, combining different datasets and methodologies to create a comprehensive resource for researchers tackling biases in language models.

Future trends in sentence bias mitigation

As the field of NLP continues to evolve, emerging tools and technologies are developing new approaches to handle bias. Innovations in AI ethics and model training pave the way for systems that can actively learn from user interactions, allowing for continuous improvement in bias handling.

Moreover, community involvement is a key factor in enhancing datasets. Encouraging input from diverse user groups ensures that the development of future datasets aligns with a broader spectrum of human experiences. This will ultimately lead to language models that are more equitable and representative.

Conclusion on the necessity of bias-aware data practices

In summary, the conversation surrounding bias in language models is urgent and essential. Datasets like 'megfineweb-bias-man' not only help in exposing biases within language but also provide practical frameworks for improvement. Developers and researchers must prioritize collecting and using bias-aware datasets to build models that reflect a more inclusive society.

Continuous learning and adaptation are vital in this ever-evolving field. By remaining open to new methodologies and embracing collaborative efforts, the NLP community can advance toward reducing biases in language generation and interpretation.

For pdfFiller’s FAQs

Below is a list of the most common customer questions. If you can’t find an answer to your question, please don’t hesitate to reach out to us.

How can I manage my megfineweb-bias-man-sentencesdatasets at hugging directly from Gmail?

How can I modify megfineweb-bias-man-sentencesdatasets at hugging without leaving Google Drive?

How do I complete megfineweb-bias-man-sentencesdatasets at hugging online?

What is megfineweb-bias-man-sentencesdatasets at hugging?

How to fill out megfineweb-bias-man-sentencesdatasets at hugging?

What information must be reported on megfineweb-bias-man-sentencesdatasets at hugging?

pdfFiller is an end-to-end solution for managing, creating, and editing documents and forms in the cloud. Save time and hassle by preparing your tax forms online.