Get the free Open Source Web Crawler - FTP Directory Listing - Auburn University - ftp eng auburn

Show details

Open Source Web Crawler A Distributed Network-Bound Data-Intensive Application Van A. Norris Department of Computer Science and Software Engineering Auburn University Technical Report CSSE04-04 April

We are not affiliated with any brand or entity on this form

Get, Create, Make and Sign open source web crawler

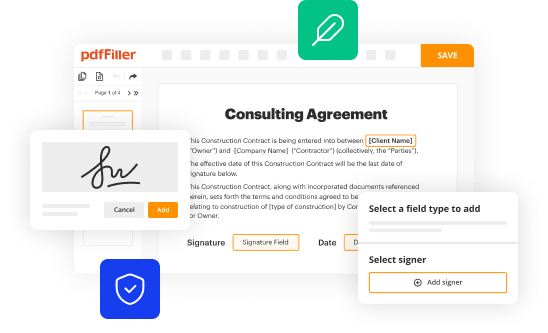

Edit your open source web crawler form online

Type text, complete fillable fields, insert images, highlight or blackout data for discretion, add comments, and more.

Add your legally-binding signature

Draw or type your signature, upload a signature image, or capture it with your digital camera.

Share your form instantly

Email, fax, or share your open source web crawler form via URL. You can also download, print, or export forms to your preferred cloud storage service.

How to edit open source web crawler online

To use our professional PDF editor, follow these steps:

1

Log in to account. Start Free Trial and register a profile if you don't have one.

2

Prepare a file. Use the Add New button. Then upload your file to the system from your device, importing it from internal mail, the cloud, or by adding its URL.

3

Edit open source web crawler. Rearrange and rotate pages, add and edit text, and use additional tools. To save changes and return to your Dashboard, click Done. The Documents tab allows you to merge, divide, lock, or unlock files.

4

Get your file. Select your file from the documents list and pick your export method. You may save it as a PDF, email it, or upload it to the cloud.

With pdfFiller, it's always easy to work with documents. Try it out!

Uncompromising security for your PDF editing and eSignature needs

Your private information is safe with pdfFiller. We employ end-to-end encryption, secure cloud storage, and advanced access control to protect your documents and maintain regulatory compliance.

How to fill out open source web crawler

How to fill out an open source web crawler:

01

Install the open source web crawler software on your computer or server. This can be done by downloading the software from the official website or using package managers if available.

02

Configure the web crawler by specifying the starting point or the initial URL from where the crawler should begin its search. This can be a single website, a list of URLs, or even a sitemap.

03

Set the crawling rules and parameters according to your requirements. This includes determining the depth of the crawling, handling duplicate content, managing the crawl frequency, and defining any specific filters or constraints for the crawler.

04

Customize the output settings by specifying how you want the crawled data to be stored or processed. This can include options such as exporting data to a database, saving to a file, or integrating with other software or APIs.

05

Test and validate the configuration by running the web crawler on a small subset of URLs before scaling it up for a larger crawl. Monitor the crawling process and make any necessary adjustments to fine-tune the performance and ensure desired results.

Who needs an open-source web crawler:

01

Researchers and academics: Open-source web crawlers can be useful for conducting research, collecting data, or studying web-related phenomena. They provide a scalable and customizable solution for gathering web content.

02

SEO professionals: Web crawlers can help SEO practitioners gather data on competitor websites, analyze page rankings, identify broken links, and perform various SEO audits to optimize websites for search engines.

03

Data scientists and analysts: Open-source web crawlers enable data scientists and analysts to collect and process large volumes of web data for analysis, trend identification, or building machine learning models.

04

Web developers and testers: Web crawlers can be used by developers and testers to validate website functionality, identify broken links, check for accessibility issues, and test user experience on different devices.

Overall, open-source web crawlers have a wide range of applications and can be beneficial for anyone who needs to gather, analyze, or process data from the web.

Fill

form

: Try Risk Free

For pdfFiller’s FAQs

Below is a list of the most common customer questions. If you can’t find an answer to your question, please don’t hesitate to reach out to us.

How do I modify my open source web crawler in Gmail?

Using pdfFiller's Gmail add-on, you can edit, fill out, and sign your open source web crawler and other papers directly in your email. You may get it through Google Workspace Marketplace. Make better use of your time by handling your papers and eSignatures.

Can I sign the open source web crawler electronically in Chrome?

You can. With pdfFiller, you get a strong e-signature solution built right into your Chrome browser. Using our addon, you may produce a legally enforceable eSignature by typing, sketching, or photographing it. Choose your preferred method and eSign in minutes.

How can I fill out open source web crawler on an iOS device?

Install the pdfFiller app on your iOS device to fill out papers. If you have a subscription to the service, create an account or log in to an existing one. After completing the registration process, upload your open source web crawler. You may now use pdfFiller's advanced features, such as adding fillable fields and eSigning documents, and accessing them from any device, wherever you are.

What is open source web crawler?

An open source web crawler is a software program or tool that is used to automatically navigate and gather data from websites on the internet. It is designed to crawl through web pages, extracting information such as links, images, text, and other relevant data.

Who is required to file open source web crawler?

There is no specific filing requirement for open source web crawlers as they are typically freely available for anyone to use. However, if you are using a web crawler for commercial purposes or to collect personal data, you may need to comply with certain legal and privacy regulations, and may be required to inform users about the data you are collecting.

How to fill out open source web crawler?

Unlike traditional forms that need to be filled out, open source web crawler generally require a setup and configuration process. You may need to specify the websites or pages you want the crawler to visit, define the data you want to extract, and set any additional parameters or filters. This can usually be done by providing input arguments or modifying configuration files in the web crawler software.

What is the purpose of open source web crawler?

The purpose of an open source web crawler can vary depending on the needs of the user. Some common purposes include data gathering, web indexing, content analysis, website monitoring, search engine optimization, and research. It allows users to automate the process of exploring, retrieving, and analyzing web content on a large scale.

What information must be reported on open source web crawler?

There is typically no formal reporting requirement for open source web crawlers as they are used for data gathering and analysis. However, if you are collecting data that is subject to legal regulations or privacy restrictions, you may need to ensure compliance with those rules. This may include obtaining consent from website owners or users, anonymizing or encrypting collected data, and protecting user privacy.

Fill out your open source web crawler online with pdfFiller!

pdfFiller is an end-to-end solution for managing, creating, and editing documents and forms in the cloud. Save time and hassle by preparing your tax forms online.

Open Source Web Crawler is not the form you're looking for?Search for another form here.

Relevant keywords

Related Forms

If you believe that this page should be taken down, please follow our DMCA take down process

here

.

This form may include fields for payment information. Data entered in these fields is not covered by PCI DSS compliance.