Get the free Inter-rater Reliability for Student Teaching Performance Assessment - niagara

Show details

This document outlines the procedure and assessments for evaluating the reliability of raters in assessing student teaching performance within an educational context. It details the participating

We are not affiliated with any brand or entity on this form

Get, Create, Make and Sign inter-rater reliability for student

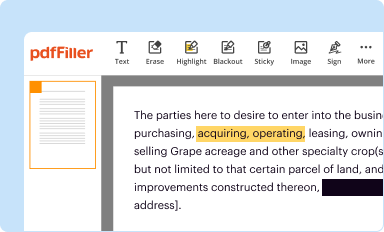

Edit your inter-rater reliability for student form online

Type text, complete fillable fields, insert images, highlight or blackout data for discretion, add comments, and more.

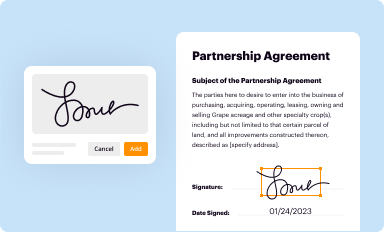

Add your legally-binding signature

Draw or type your signature, upload a signature image, or capture it with your digital camera.

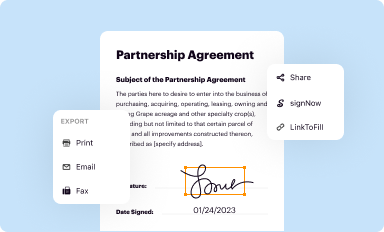

Share your form instantly

Email, fax, or share your inter-rater reliability for student form via URL. You can also download, print, or export forms to your preferred cloud storage service.

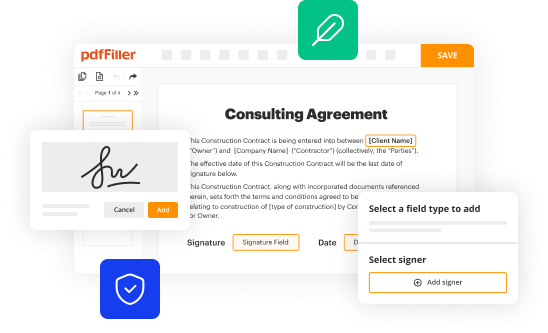

Editing inter-rater reliability for student online

To use the professional PDF editor, follow these steps below:

1

Create an account. Begin by choosing Start Free Trial and, if you are a new user, establish a profile.

2

Upload a document. Select Add New on your Dashboard and transfer a file into the system in one of the following ways: by uploading it from your device or importing from the cloud, web, or internal mail. Then, click Start editing.

3

Edit inter-rater reliability for student. Rearrange and rotate pages, add and edit text, and use additional tools. To save changes and return to your Dashboard, click Done. The Documents tab allows you to merge, divide, lock, or unlock files.

4

Get your file. Select your file from the documents list and pick your export method. You may save it as a PDF, email it, or upload it to the cloud.

With pdfFiller, it's always easy to work with documents.

Uncompromising security for your PDF editing and eSignature needs

Your private information is safe with pdfFiller. We employ end-to-end encryption, secure cloud storage, and advanced access control to protect your documents and maintain regulatory compliance.

How to fill out inter-rater reliability for student

How to fill out Inter-rater Reliability for Student Teaching Performance Assessment

01

Identify the assessment criteria for student teaching performance.

02

Select the raters who will evaluate the student teachers.

03

Provide training for all raters to ensure consistent understanding of the evaluation criteria.

04

Have raters independently assess the same sample of student teachers using the established criteria.

05

Collect and compile the ratings from each rater.

06

Calculate Inter-rater Reliability using appropriate statistical methods, such as Cohen's Kappa or Intraclass Correlation Coefficient (ICC).

07

Analyze the results to determine the level of agreement among raters.

08

Discuss any discrepancies in ratings and refine the assessment process as needed.

Who needs Inter-rater Reliability for Student Teaching Performance Assessment?

01

Educational institutions that are conducting student teaching programs.

02

Teacher education faculty who need to ensure fair and consistent evaluation of student teachers.

03

Administrators overseeing teacher training programs.

04

Accrediting bodies that require evidence of reliable assessment methods.

05

Policy makers interested in the effectiveness of teacher training programs.

Fill

form

: Try Risk Free

People Also Ask about

What is interrater reliability in assessment?

And, inter-rater reliability (IRR) is a measure of how consistently different raters score the same individuals using assessment instruments.

What is inter-rater reliability in teaching?

Interrater reliability is an online certification process that gives your teachers the opportunity to evaluate sample child portfolios and compare their ratings with those of Teaching Strategies' master raters.

What is an example of inter-rater reliability in assessment?

It simply calculates the proportion of instances in which raters concur, directly reflecting the frequency of their agreement. For example, if two raters align in their judgments 85% of the time, their percentage agreement is 85%.

What is inter-rater reliability in language testing?

Inter-rater reliability is a measure of reliability used to assess the extent to which different judges or raters agree in their assessment decisions. This is because two teachers will not necessarily interpret answers the same way.

Are the scores of students using performance based assessment always reliable?

The reliability and validity of scores in a performance assessment and evaluation practice are limited by the quality of the scoring key. Scoring keys are tools that researchers develop for scoring the products or performances of students.

What is an example of inter-rater reliability?

For example, a candidate for a job should have the same chance of being chosen to move forward in the hiring process no matter when or by whom they are considered. This is where inter-rater reliability is helpful. This is a measure of the level of agreement between judges.

For pdfFiller’s FAQs

Below is a list of the most common customer questions. If you can’t find an answer to your question, please don’t hesitate to reach out to us.

What is Inter-rater Reliability for Student Teaching Performance Assessment?

Inter-rater Reliability for Student Teaching Performance Assessment is a measure of the degree to which different evaluators or raters agree in their assessments of the performance of student teachers. It ensures that the evaluation process is consistent and that assessments are not significantly influenced by individual raters' biases.

Who is required to file Inter-rater Reliability for Student Teaching Performance Assessment?

Educational institutions and teacher preparation programs that conduct student teaching assessments are typically required to file Inter-rater Reliability reports. This includes the faculty and staff involved in evaluating student teaching performance.

How to fill out Inter-rater Reliability for Student Teaching Performance Assessment?

To fill out the Inter-rater Reliability for Student Teaching Performance Assessment, evaluators must independently assess the same student teaching performance using a standardized rubric or criteria. After scoring, a statistical analysis is conducted to calculate the level of agreement among the raters, which is then documented in the report.

What is the purpose of Inter-rater Reliability for Student Teaching Performance Assessment?

The purpose of Inter-rater Reliability for Student Teaching Performance Assessment is to ensure the validity and reliability of the evaluation process. It helps to identify inconsistencies in assessments, improves rater training, and enhances the overall quality of the teacher preparation program.

What information must be reported on Inter-rater Reliability for Student Teaching Performance Assessment?

The report must include the number of raters, the assessment scores assigned by each rater, the calculation of reliability coefficients (such as Cohen's Kappa or Intraclass Correlation Coefficient), and any observed discrepancies or suggestions for improving rater agreement.

Fill out your inter-rater reliability for student online with pdfFiller!

pdfFiller is an end-to-end solution for managing, creating, and editing documents and forms in the cloud. Save time and hassle by preparing your tax forms online.

Inter-Rater Reliability For Student is not the form you're looking for?Search for another form here.

Relevant keywords

Related Forms

If you believe that this page should be taken down, please follow our DMCA take down process

here

.

This form may include fields for payment information. Data entered in these fields is not covered by PCI DSS compliance.