Get the free Map/Reduce Tutorial - lamsade dauphine

Show details

This document comprehensively describes all user-facing facets of the Hadoop Map/Reduce framework and serves as a tutorial.

We are not affiliated with any brand or entity on this form

Get, Create, Make and Sign mapreduce tutorial - lamsade

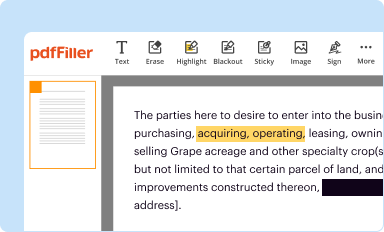

Edit your mapreduce tutorial - lamsade form online

Type text, complete fillable fields, insert images, highlight or blackout data for discretion, add comments, and more.

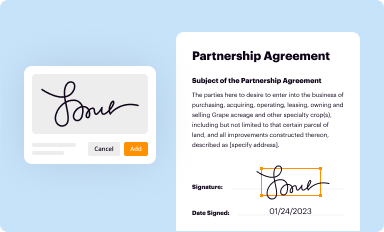

Add your legally-binding signature

Draw or type your signature, upload a signature image, or capture it with your digital camera.

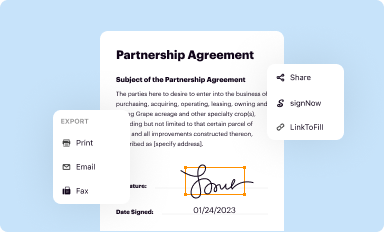

Share your form instantly

Email, fax, or share your mapreduce tutorial - lamsade form via URL. You can also download, print, or export forms to your preferred cloud storage service.

Editing mapreduce tutorial - lamsade online

Use the instructions below to start using our professional PDF editor:

1

Register the account. Begin by clicking Start Free Trial and create a profile if you are a new user.

2

Simply add a document. Select Add New from your Dashboard and import a file into the system by uploading it from your device or importing it via the cloud, online, or internal mail. Then click Begin editing.

3

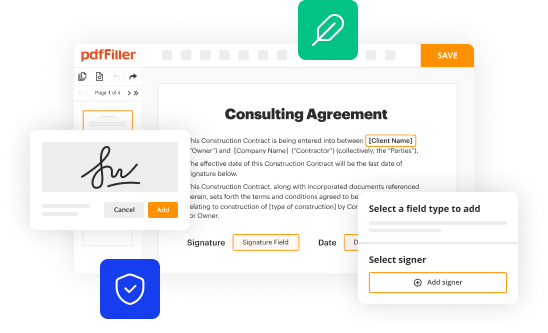

Edit mapreduce tutorial - lamsade. Rearrange and rotate pages, insert new and alter existing texts, add new objects, and take advantage of other helpful tools. Click Done to apply changes and return to your Dashboard. Go to the Documents tab to access merging, splitting, locking, or unlocking functions.

4

Save your file. Select it from your list of records. Then, move your cursor to the right toolbar and choose one of the exporting options. You can save it in multiple formats, download it as a PDF, send it by email, or store it in the cloud, among other things.

pdfFiller makes dealing with documents a breeze. Create an account to find out!

Uncompromising security for your PDF editing and eSignature needs

Your private information is safe with pdfFiller. We employ end-to-end encryption, secure cloud storage, and advanced access control to protect your documents and maintain regulatory compliance.

How to fill out mapreduce tutorial - lamsade

How to fill out Map/Reduce Tutorial

01

Begin by understanding the basics of Map/Reduce and its core concepts.

02

Set up your development environment with the necessary libraries and frameworks.

03

Follow the tutorial step-by-step, starting with the Map function and its input-output process.

04

Implement the Reduce function, ensuring it correctly aggregates the results from the Map function.

05

Test the Map/Reduce job with sample data to validate its functionality.

06

Optimize code and performance as needed based on the results.

07

Review any advanced topics or best practices mentioned in the tutorial.

Who needs Map/Reduce Tutorial?

01

Data engineers looking to process large datasets efficiently.

02

Developers wanting to understand distributed computing concepts.

03

Analysts who need to analyze big data applications.

04

Students or professionals learning about big data technologies.

Fill

form

: Try Risk Free

People Also Ask about

Is MapReduce deprecated?

The MapReduce model is now officially obsolete, so the new data processing models we use are called Flume (for the processing pipeline definition) and MillWheel (for the real-time dataflow orchestration).

Is MapReduce outdated?

That's not because MapReduce itself is outdated, but rather because the problems that it solves are situations we now try to avoid. Hadoop is great when you want to do all your processing on one big cluster, and the data is already there.

Why did Google stop using MapReduce?

The main reason behind it is map reduce was hindering their ability to provide near real time updates to their index. So they migrated their Search infrastructure to a Bigtable distributed database.

What are the steps involved in MapReduce?

How MapReduce Works Map. The input data is first split into smaller blocks. Reduce. After all the mappers complete processing, the framework shuffles and sorts the results before passing them on to the reducers. Combine and Partition. Example Use Case. Map. Combine. Partition. Reduce.

What is MapReduce explained simply?

2:22 9:08 And this is fairly simple concept to understand. There is this map function that transforms the dataMoreAnd this is fairly simple concept to understand. There is this map function that transforms the data into key value pairs. The key value pairs are shuffered around and reorganized. They are then

What has replaced MapReduce?

Spark was originally built around the primitives of MapReduce, and you see still see that in the description of its operations (exchange, collect). However, spark and all the other modern frameworks realized that: - users did not care mapping and reducing, they wanted higher level primitives (filtering, joins, )

Is MapReduce still used?

The MapReduce model is now officially obsolete, so the new data processing models we use are called Flume (for the processing pipeline definition) and MillWheel (for the real-time dataflow orchestration). They are known externally as Cloud Dataflow / Apache Beam.

How do I run a MapReduce job?

You can run a MapReduce job with a single method call: submit() on a Job object (you can also call waitForCompletion() , which submits the job if it hasn't been submitted already, then waits for it to finish). This method call conceals a great deal of processing behind the scenes.

For pdfFiller’s FAQs

Below is a list of the most common customer questions. If you can’t find an answer to your question, please don’t hesitate to reach out to us.

What is Map/Reduce Tutorial?

Map/Reduce Tutorial is a guide that explains the principles and techniques of the MapReduce programming model, which is used for processing large data sets by dividing tasks into manageable pieces that can be executed in parallel.

Who is required to file Map/Reduce Tutorial?

Typically, individuals and organizations that intend to use the MapReduce programming model for data processing and analysis, particularly in environments like Hadoop, are encouraged to reference the Map/Reduce Tutorial.

How to fill out Map/Reduce Tutorial?

To fill out a Map/Reduce Tutorial, one should follow the structured steps outlined in the tutorial, including understanding the Map and Reduce functions, implementing them in code, and testing the solution with sample data sets.

What is the purpose of Map/Reduce Tutorial?

The purpose of the Map/Reduce Tutorial is to educate users on how to efficiently use the MapReduce framework for distributed data processing, helping them to address complex data analysis challenges.

What information must be reported on Map/Reduce Tutorial?

The Map/Reduce Tutorial should report information such as the data input format, the mapping and reducing functions, performance metrics, and the expected output format after processing.

Fill out your mapreduce tutorial - lamsade online with pdfFiller!

pdfFiller is an end-to-end solution for managing, creating, and editing documents and forms in the cloud. Save time and hassle by preparing your tax forms online.

Mapreduce Tutorial - Lamsade is not the form you're looking for?Search for another form here.

Relevant keywords

Related Forms

If you believe that this page should be taken down, please follow our DMCA take down process

here

.

This form may include fields for payment information. Data entered in these fields is not covered by PCI DSS compliance.