Get the free crawl:

Show details

The ND The Ravens are heading back to Ocean City, MD for the 5annual Beach Bash presented by Miller Lite Thursday, June 2nd rd Saturday, June 4. Back by popular demand is our Beach Bash Bar Crawl

We are not affiliated with any brand or entity on this form

Get, Create, Make and Sign crawl

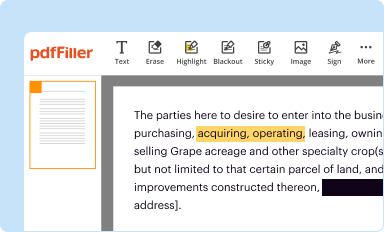

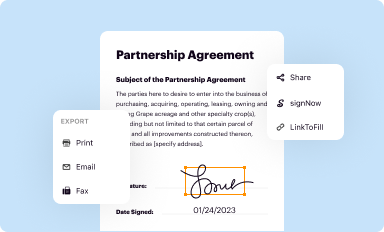

Edit your crawl form online

Type text, complete fillable fields, insert images, highlight or blackout data for discretion, add comments, and more.

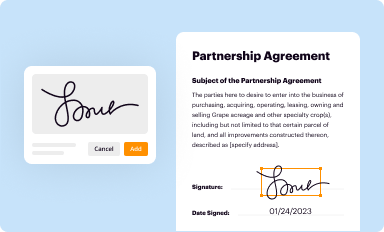

Add your legally-binding signature

Draw or type your signature, upload a signature image, or capture it with your digital camera.

Share your form instantly

Email, fax, or share your crawl form via URL. You can also download, print, or export forms to your preferred cloud storage service.

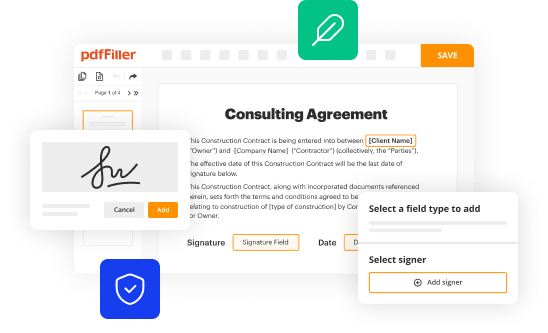

Editing crawl online

Use the instructions below to start using our professional PDF editor:

1

Create an account. Begin by choosing Start Free Trial and, if you are a new user, establish a profile.

2

Upload a file. Select Add New on your Dashboard and upload a file from your device or import it from the cloud, online, or internal mail. Then click Edit.

3

Edit crawl. Rearrange and rotate pages, add and edit text, and use additional tools. To save changes and return to your Dashboard, click Done. The Documents tab allows you to merge, divide, lock, or unlock files.

4

Get your file. Select your file from the documents list and pick your export method. You may save it as a PDF, email it, or upload it to the cloud.

Dealing with documents is always simple with pdfFiller. Try it right now

Uncompromising security for your PDF editing and eSignature needs

Your private information is safe with pdfFiller. We employ end-to-end encryption, secure cloud storage, and advanced access control to protect your documents and maintain regulatory compliance.

How to fill out crawl

How to fill out crawl

01

Step 1: Start by identifying the purpose of the crawl. Determine the specific information or data you want to gather from the crawl.

02

Step 2: Choose the appropriate crawl tool or software that suits your requirements. There are various web crawling tools available, such as Scrapy, BeautifulSoup, or Selenium.

03

Step 3: Define the scope of the crawl. Decide which website or web pages you want to crawl and extract data from.

04

Step 4: Set up the necessary configurations for the crawl, including the maximum depth or levels of crawling, the rate of requests, and the crawl speed.

05

Step 5: Develop the crawl script or program using the chosen tool or software. This script should specify the crawling logic, data extraction methods, and handling of any obstacles.

06

Step 6: Run the crawl and monitor its progress. Keep an eye on any errors or issues that may arise during the crawling process.

07

Step 7: Extract and analyze the crawled data. Process the data according to your specific needs and objectives.

08

Step 8: Store or present the crawled data in a suitable format, such as a database, CSV file, or visual representation, depending on the intended use of the data.

09

Step 9: Regularly update and maintain the crawl to ensure accuracy and relevance of the extracted data.

10

Step 10: Continuously improve the crawl process by optimizing the script, refining the data extraction methods, and adjusting the crawl configurations as needed.

Who needs crawl?

01

Researchers: Researchers often need to crawl websites to collect data for academic studies or market research.

02

Web Developers: Web developers may use crawling to scrape data for their applications or to analyze competitor websites.

03

SEO Specialists: SEO specialists rely on crawling to gather information about website rankings, backlinks, and keyword analysis.

04

E-commerce Businesses: E-commerce companies may need to crawl competitor websites to collect pricing information, product details, or customer reviews.

05

Social Media Marketers: Social media marketers can benefit from crawling to extract data related to user engagement, sentiment analysis, or competitor insights.

06

Content Aggregators: Content aggregators use crawling to gather articles, blog posts, or news from various sources for their platforms.

07

Data Analysts: Data analysts utilize crawling to gather large sets of data for analysis, visualization, or machine learning purposes.

08

Law Enforcement: Law enforcement agencies may require crawling to collect evidence, track illegal activities, or monitor online content.

Fill

form

: Try Risk Free

For pdfFiller’s FAQs

Below is a list of the most common customer questions. If you can’t find an answer to your question, please don’t hesitate to reach out to us.

How can I modify crawl without leaving Google Drive?

People who need to keep track of documents and fill out forms quickly can connect PDF Filler to their Google Docs account. This means that they can make, edit, and sign documents right from their Google Drive. Make your crawl into a fillable form that you can manage and sign from any internet-connected device with this add-on.

Can I create an electronic signature for the crawl in Chrome?

As a PDF editor and form builder, pdfFiller has a lot of features. It also has a powerful e-signature tool that you can add to your Chrome browser. With our extension, you can type, draw, or take a picture of your signature with your webcam to make your legally-binding eSignature. Choose how you want to sign your crawl and you'll be done in minutes.

How do I complete crawl on an iOS device?

Make sure you get and install the pdfFiller iOS app. Next, open the app and log in or set up an account to use all of the solution's editing tools. If you want to open your crawl, you can upload it from your device or cloud storage, or you can type the document's URL into the box on the right. After you fill in all of the required fields in the document and eSign it, if that is required, you can save or share it with other people.

What is crawl?

Crawl is a process of systematically browsing websites and indexing their content for search engines.

Who is required to file crawl?

Crawl filing requirements vary depending on the jurisdiction and applicable laws and regulations.

How to fill out crawl?

Crawl can be filled out manually or through automated web crawling tools.

What is the purpose of crawl?

The purpose of crawl is to gather information from websites and make it searchable for users.

What information must be reported on crawl?

Information such as website URLs, page titles, meta descriptions, keywords, and content may need to be reported on crawl.

Fill out your crawl online with pdfFiller!

pdfFiller is an end-to-end solution for managing, creating, and editing documents and forms in the cloud. Save time and hassle by preparing your tax forms online.

Crawl is not the form you're looking for?Search for another form here.

Relevant keywords

Related Forms

If you believe that this page should be taken down, please follow our DMCA take down process

here

.

This form may include fields for payment information. Data entered in these fields is not covered by PCI DSS compliance.