Get the free #robots.txt for https://www.sxu.edu Sitemap: https://www.sxu.edu User ...

Show details

World LanguageAssessment Handbook

September 2017edTPA×LA×06edTPA World Language Assessment HandbookedTPA stems from a twentyfiveyear history of developing performance based assessments of

teaching

We are not affiliated with any brand or entity on this form

Get, Create, Make and Sign robotstxt for httpswwwsxuedu sitemap

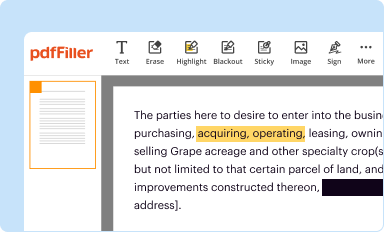

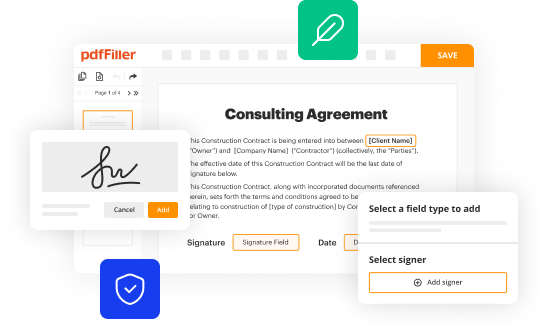

Edit your robotstxt for httpswwwsxuedu sitemap form online

Type text, complete fillable fields, insert images, highlight or blackout data for discretion, add comments, and more.

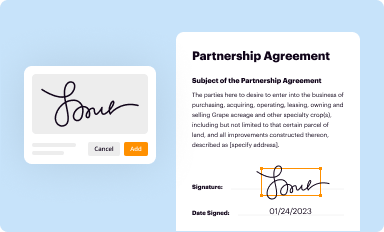

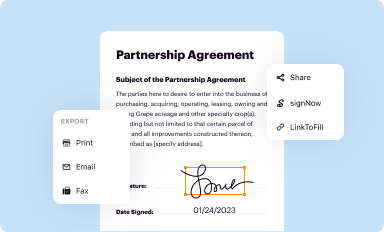

Add your legally-binding signature

Draw or type your signature, upload a signature image, or capture it with your digital camera.

Share your form instantly

Email, fax, or share your robotstxt for httpswwwsxuedu sitemap form via URL. You can also download, print, or export forms to your preferred cloud storage service.

Editing robotstxt for httpswwwsxuedu sitemap online

To use the professional PDF editor, follow these steps:

1

Register the account. Begin by clicking Start Free Trial and create a profile if you are a new user.

2

Upload a file. Select Add New on your Dashboard and upload a file from your device or import it from the cloud, online, or internal mail. Then click Edit.

3

Edit robotstxt for httpswwwsxuedu sitemap. Replace text, adding objects, rearranging pages, and more. Then select the Documents tab to combine, divide, lock or unlock the file.

4

Save your file. Choose it from the list of records. Then, shift the pointer to the right toolbar and select one of the several exporting methods: save it in multiple formats, download it as a PDF, email it, or save it to the cloud.

pdfFiller makes dealing with documents a breeze. Create an account to find out!

Uncompromising security for your PDF editing and eSignature needs

Your private information is safe with pdfFiller. We employ end-to-end encryption, secure cloud storage, and advanced access control to protect your documents and maintain regulatory compliance.

How to fill out robotstxt for httpswwwsxuedu sitemap

How to fill out robotstxt for httpswwwsxuedu sitemap

01

To fill out the robotstxt for httpswwwsxuedu sitemap, follow these steps:

02

Open a text editor or any text editing platform.

03

Start with the User-agent field, which defines the search engine bots you want to give instructions to.

04

List the User-agents one by one, specifying their behavior for the sitemap.

05

For each User-agent, use the Disallow field to indicate the paths or directories that should not be crawled or indexed.

06

Specify the Sitemap field to direct search engine bots to the location of your sitemap file.

07

Save the file as 'robotstxt' without any file extension.

08

Upload the 'robotstxt' file to the root directory of your website (httpswwwsxuedu).

09

Test the correctness of the file using a robots.txt tester tool.

10

Make any necessary adjustments and re-upload the file if needed.

Who needs robotstxt for httpswwwsxuedu sitemap?

01

Any website owner or administrator who wants to control how their website is crawled and indexed by search engine bots needs a robotstxt file for httpswwwsxuedu sitemap.

02

It allows them to give specific instructions to search engine bots regarding which pages or directories should be crawled, and which should be excluded from indexing.

03

By using a robotstxt file, website owners can improve their website's search engine optimization (SEO) and prevent the crawling of sensitive or private content.

04

Additionally, it provides a way to direct search engine bots to the location of the website's sitemap, which helps in efficient and comprehensive indexing of website content.

Fill

form

: Try Risk Free

For pdfFiller’s FAQs

Below is a list of the most common customer questions. If you can’t find an answer to your question, please don’t hesitate to reach out to us.

How do I complete robotstxt for httpswwwsxuedu sitemap online?

pdfFiller has made filling out and eSigning robotstxt for httpswwwsxuedu sitemap easy. The solution is equipped with a set of features that enable you to edit and rearrange PDF content, add fillable fields, and eSign the document. Start a free trial to explore all the capabilities of pdfFiller, the ultimate document editing solution.

How do I fill out robotstxt for httpswwwsxuedu sitemap using my mobile device?

Use the pdfFiller mobile app to fill out and sign robotstxt for httpswwwsxuedu sitemap. Visit our website (https://edit-pdf-ios-android.pdffiller.com/) to learn more about our mobile applications, their features, and how to get started.

How do I complete robotstxt for httpswwwsxuedu sitemap on an Android device?

Use the pdfFiller mobile app and complete your robotstxt for httpswwwsxuedu sitemap and other documents on your Android device. The app provides you with all essential document management features, such as editing content, eSigning, annotating, sharing files, etc. You will have access to your documents at any time, as long as there is an internet connection.

What is robotstxt for httpswwwsxuedu sitemap?

The robots.txt file for httpswww.sxu.edu sitemap is a text file that instructs search engine crawlers on how to interact with the website's pages and which pages to crawl or not crawl.

Who is required to file robotstxt for httpswwwsxuedu sitemap?

Website owners or administrators are required to create and maintain the robots.txt file for their website's sitemap.

How to fill out robotstxt for httpswwwsxuedu sitemap?

To fill out the robots.txt for httpswww.sxu.edu sitemap, you need to specify directives for user-agents and rules for different sections of the website, such as disallowing certain pages or directories from being crawled.

What is the purpose of robotstxt for httpswwwsxuedu sitemap?

The purpose of the robots.txt file for httpswww.sxu.edu sitemap is to control how search engine crawlers access and index the website's content, ultimately influencing the website's search engine visibility and ranking.

What information must be reported on robotstxt for httpswwwsxuedu sitemap?

The robots.txt file for httpswww.sxu.edu sitemap must include directives for user-agents, such as 'Disallow' and 'Allow,' and specify rules for different sections of the website, including disallowing certain pages or directories from being crawled.

Fill out your robotstxt for httpswwwsxuedu sitemap online with pdfFiller!

pdfFiller is an end-to-end solution for managing, creating, and editing documents and forms in the cloud. Save time and hassle by preparing your tax forms online.

Robotstxt For Httpswwwsxuedu Sitemap is not the form you're looking for?Search for another form here.

Relevant keywords

Related Forms

If you believe that this page should be taken down, please follow our DMCA take down process

here

.

This form may include fields for payment information. Data entered in these fields is not covered by PCI DSS compliance.