Get the free Web crawler - Wikipedia

Show details

The City of San DiegoReport to the Historical Resources Board DATE ISSUED:August 11, 2022REPORT NO. HRB22028HEARING DATE:August 25, 2022SUBJECT:ITEM #02 John and Zelda Schelling/ William Weinberger

We are not affiliated with any brand or entity on this form

Get, Create, Make and Sign web crawler - wikipedia

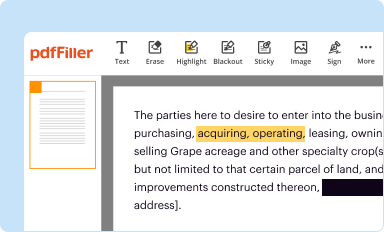

Edit your web crawler - wikipedia form online

Type text, complete fillable fields, insert images, highlight or blackout data for discretion, add comments, and more.

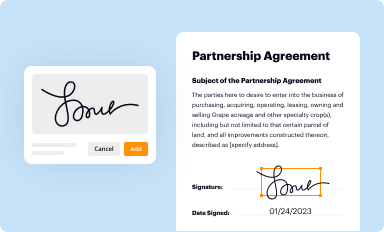

Add your legally-binding signature

Draw or type your signature, upload a signature image, or capture it with your digital camera.

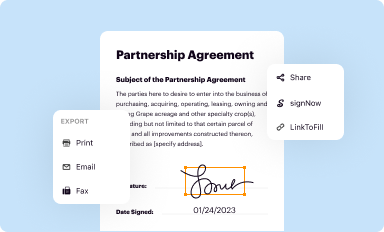

Share your form instantly

Email, fax, or share your web crawler - wikipedia form via URL. You can also download, print, or export forms to your preferred cloud storage service.

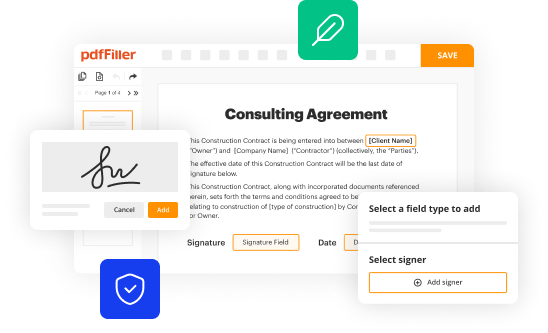

Editing web crawler - wikipedia online

Here are the steps you need to follow to get started with our professional PDF editor:

1

Log in. Click Start Free Trial and create a profile if necessary.

2

Upload a document. Select Add New on your Dashboard and transfer a file into the system in one of the following ways: by uploading it from your device or importing from the cloud, web, or internal mail. Then, click Start editing.

3

Edit web crawler - wikipedia. Rearrange and rotate pages, add new and changed texts, add new objects, and use other useful tools. When you're done, click Done. You can use the Documents tab to merge, split, lock, or unlock your files.

4

Save your file. Select it from your list of records. Then, move your cursor to the right toolbar and choose one of the exporting options. You can save it in multiple formats, download it as a PDF, send it by email, or store it in the cloud, among other things.

pdfFiller makes working with documents easier than you could ever imagine. Register for an account and see for yourself!

Uncompromising security for your PDF editing and eSignature needs

Your private information is safe with pdfFiller. We employ end-to-end encryption, secure cloud storage, and advanced access control to protect your documents and maintain regulatory compliance.

How to fill out web crawler - wikipedia

How to fill out web crawler - wikipedia

01

Start by identifying the information you want to extract from Wikipedia using the web crawler. This could be specific data from a particular page or a collection of information from multiple pages.

02

Choose a programming language or a web scraping tool to build your web crawler. Python is commonly used for web scraping and has libraries like Beautiful Soup and Scrapy that can make the process easier.

03

Create a code or script that sends requests to Wikipedia's server and retrieves the web pages you want to crawl. Make sure to set up appropriate headers and follow ethical scraping practices to avoid any legal issues.

04

Use HTML parsing techniques to extract the desired data from the retrieved web pages. This could involve searching for specific HTML elements, using regular expressions, or employing advanced techniques like XPath or CSS selectors.

05

Store the extracted data in a structured format like JSON, CSV, or a database. This will make it easier to analyze and work with the collected information.

06

Implement error handling and robustness in your web crawler code. This could involve handling timeouts, connection errors, or handling CAPTCHA challenges.

07

Continuously test and validate your web crawler to ensure it is functioning correctly. Monitor any changes in Wikipedia's layout or structure that may affect the crawling process and make necessary adjustments to your code.

08

Consider adding additional features to your web crawler, such as handling pagination, limiting the crawling speed, or implementing parallel processing to enhance performance.

09

Documentation and maintenance are crucial for the long-term viability of your web crawler. Keep track of any modifications, updates, or improvements made to the codebase and document the purpose and usage of the crawler.

10

Respect website owner's terms and conditions while using a web crawler. Avoid overloading the server with excessive requests and consider using techniques like caching or request throttling to minimize the impact on the website's performance.

Who needs web crawler - wikipedia?

01

Web crawlers - Wikipedia can be useful for a variety of individuals and businesses:

02

- Researchers or data analysts who require large amounts of data from Wikipedia for analysis or academic purposes.

03

- Content aggregators or news portals that need to gather information from Wikipedia to supplement their articles and provide additional context.

04

- SEO professionals who utilize web crawlers to analyze Wikipedia's content and structure for search engine optimization purposes.

05

- Developers or programmers who want to extract specific information from Wikipedia to build applications, create databases, or perform text mining tasks.

06

- Entrepreneurs or businesses looking to gather market intelligence, monitor competition, or track changes and updates on Wikipedia related to their industry.

07

Overall, anyone who needs a vast amount of structured or unstructured data from Wikipedia can benefit from using a web crawler.

Fill

form

: Try Risk Free

For pdfFiller’s FAQs

Below is a list of the most common customer questions. If you can’t find an answer to your question, please don’t hesitate to reach out to us.

Where do I find web crawler - wikipedia?

It's simple with pdfFiller, a full online document management tool. Access our huge online form collection (over 25M fillable forms are accessible) and find the web crawler - wikipedia in seconds. Open it immediately and begin modifying it with powerful editing options.

Can I create an electronic signature for signing my web crawler - wikipedia in Gmail?

It's easy to make your eSignature with pdfFiller, and then you can sign your web crawler - wikipedia right from your Gmail inbox with the help of pdfFiller's add-on for Gmail. This is a very important point: You must sign up for an account so that you can save your signatures and signed documents.

How do I complete web crawler - wikipedia on an Android device?

Complete your web crawler - wikipedia and other papers on your Android device by using the pdfFiller mobile app. The program includes all of the necessary document management tools, such as editing content, eSigning, annotating, sharing files, and so on. You will be able to view your papers at any time as long as you have an internet connection.

What is web crawler - wikipedia?

A web crawler, sometimes called a spider or spiderbot and often shortened to crawler, is an internet bot that systematically browses the World Wide Web, typically for the purpose of web indexing.

Who is required to file web crawler - wikipedia?

There is no specific requirement for individuals or entities to file a web crawler - wikipedia as it is a program used to index information on websites.

How to fill out web crawler - wikipedia?

Web crawlers are automated programs that follow links to discover and index web content, there is no need to fill out any forms to use a web crawler - wikipedia.

What is the purpose of web crawler - wikipedia?

The purpose of a web crawler - wikipedia is to index content on websites and make it searchable for users.

What information must be reported on web crawler - wikipedia?

No specific information needs to be reported on a web crawler - wikipedia as it is a tool used for indexing web content.

Fill out your web crawler - wikipedia online with pdfFiller!

pdfFiller is an end-to-end solution for managing, creating, and editing documents and forms in the cloud. Save time and hassle by preparing your tax forms online.

Web Crawler - Wikipedia is not the form you're looking for?Search for another form here.

Relevant keywords

Related Forms

If you believe that this page should be taken down, please follow our DMCA take down process

here

.

This form may include fields for payment information. Data entered in these fields is not covered by PCI DSS compliance.