Get the free Design and Evolution of the Apache Hadoop File System(HDFS) - snia

Show details

This document details the architecture, design goals, and operational principles of the Hadoop Distributed File System (HDFS), addressing its scalability, fault tolerance, and performance capabilities.

We are not affiliated with any brand or entity on this form

Get, Create, Make and Sign design and evolution of

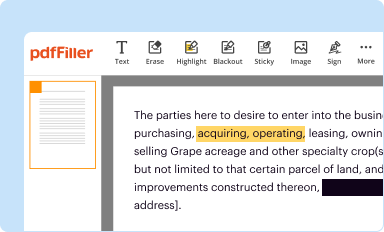

Edit your design and evolution of form online

Type text, complete fillable fields, insert images, highlight or blackout data for discretion, add comments, and more.

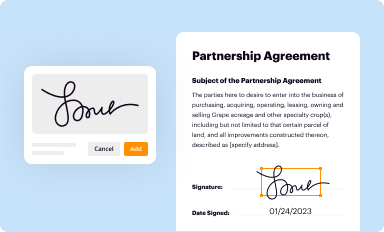

Add your legally-binding signature

Draw or type your signature, upload a signature image, or capture it with your digital camera.

Share your form instantly

Email, fax, or share your design and evolution of form via URL. You can also download, print, or export forms to your preferred cloud storage service.

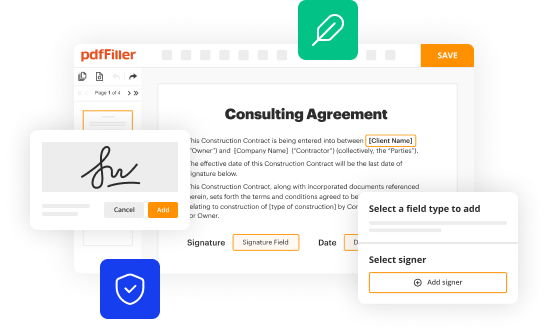

Editing design and evolution of online

Follow the guidelines below to take advantage of the professional PDF editor:

1

Register the account. Begin by clicking Start Free Trial and create a profile if you are a new user.

2

Prepare a file. Use the Add New button to start a new project. Then, using your device, upload your file to the system by importing it from internal mail, the cloud, or adding its URL.

3

Edit design and evolution of. Rearrange and rotate pages, insert new and alter existing texts, add new objects, and take advantage of other helpful tools. Click Done to apply changes and return to your Dashboard. Go to the Documents tab to access merging, splitting, locking, or unlocking functions.

4

Save your file. Select it from your list of records. Then, move your cursor to the right toolbar and choose one of the exporting options. You can save it in multiple formats, download it as a PDF, send it by email, or store it in the cloud, among other things.

With pdfFiller, it's always easy to work with documents. Try it!

Uncompromising security for your PDF editing and eSignature needs

Your private information is safe with pdfFiller. We employ end-to-end encryption, secure cloud storage, and advanced access control to protect your documents and maintain regulatory compliance.

How to fill out design and evolution of

How to fill out Design and Evolution of the Apache Hadoop File System(HDFS)

01

Start with a clear understanding of HDFS architecture.

02

Outline the key components of HDFS, such as NameNode, DataNode, and Secondary NameNode.

03

Document the design choices made during the development of HDFS.

04

Highlight the challenges faced in the evolution of HDFS and how they were addressed.

05

Include diagrams to illustrate HDFS operations and data flow.

06

Discuss performance improvements and scalability features added over time.

07

Conclude with future directions for HDFS development.

Who needs Design and Evolution of the Apache Hadoop File System(HDFS)?

01

Software engineers and developers working with big data technologies.

02

Data scientists looking to leverage Hadoop for large-scale data processing.

03

System architects involved in designing distributed storage solutions.

04

Researchers studying file systems and distributed computing.

05

Organizations planning to implement or migrate to Hadoop-based systems.

Fill

form

: Try Risk Free

People Also Ask about

What is the evolution of Hadoop?

Developed by Doug Cutting and Mike Cafarella, Hadoop was inspired by Google's papers on the Google File System and MapReduce. Since its inception in 2006, Hadoop has undergone significant evolution, becoming a powerful tool for processing and analyzing massive amounts of data.

What is HDFS and explain the design of HDFS?

Hadoop Distributed File System (HDFS) is a file system that manages large data sets that can run on commodity hardware. HDFS is the most popular data storage system for Hadoop and can be used to scale a single Apache Hadoop cluster to hundreds and even thousands of nodes.

What is the data architecture of Hadoop?

HDFS is a distributed file system designed to store large datasets across multiple nodes in a cluster. It follows a master-slave architecture consisting of a NameNode and multiple DataNodes. This is a master node that manages the file system namespace and regulates client access to files.

What is Apache Hadoop HDFS?

Hadoop Distributed File System (HDFS) – A distributed file system that runs on standard or low-end hardware. HDFS provides better data throughput than traditional file systems, in addition to high fault tolerance and native support of large datasets.

What is the difference between Hadoop and HDFS?

HDFS follows master/slave architecture with NameNode as master and DataNode as slave. Each cluster comprises a single master node and multiple slave nodes. Internally the files get divided into one or more blocks, and each block is stored on different slave machines depending on the replication factor.

What is the Hadoop architecture of HDFS?

HDFS Architecture. The Hadoop Distributed File System is implemented using a master-worker architecture, where each cluster has one master node and numerous worker nodes. The files are internally divided into several blocks, each stored on different worker machines based on their replication factor.

What is the architecture of Hadoop and HDFS?

HDFS Architecture. The Hadoop Distributed File System is implemented using a master-worker architecture, where each cluster has one master node and numerous worker nodes. The files are internally divided into several blocks, each stored on different worker machines based on their replication factor.

For pdfFiller’s FAQs

Below is a list of the most common customer questions. If you can’t find an answer to your question, please don’t hesitate to reach out to us.

What is Design and Evolution of the Apache Hadoop File System(HDFS)?

The Design and Evolution of the Apache Hadoop File System (HDFS) refers to the architectural principles and development over time that have shaped HDFS into a reliable, scalable, and distributed file system. It is designed to store large files across multiple machines, ensuring data replication and fault tolerance while supporting large-scale data processing.

Who is required to file Design and Evolution of the Apache Hadoop File System(HDFS)?

There is no formal filing requirement for the Design and Evolution of the Apache Hadoop File System (HDFS). However, contributors to the project, including software engineers, data architects, and organizations using HDFS, may document their insights or changes to the system as part of their contribution to the Apache Hadoop community.

How to fill out Design and Evolution of the Apache Hadoop File System(HDFS)?

To fill out the Design and Evolution of the Apache Hadoop File System (HDFS), one should first provide a detailed description of the design principles, technical specifications, and evolution of the file system. This includes the historical context, major updates, and how it meets the requirements of large-scale data storage and processing.

What is the purpose of Design and Evolution of the Apache Hadoop File System(HDFS)?

The purpose of documenting the Design and Evolution of the Apache Hadoop File System (HDFS) is to provide transparency in its architecture, facilitate understanding among developers and users, and serve as a reference for future enhancements and maintenance. It helps in sharing knowledge around the operational and design decisions made over time.

What information must be reported on Design and Evolution of the Apache Hadoop File System(HDFS)?

Information that must be reported on the Design and Evolution of the Apache Hadoop File System (HDFS) includes its architectural design, key components, data replication strategies, performance optimizations, scalability features, and significant changes made during its development. Additionally, it may contain insights into user feedback and adaptation to emerging technology trends.

Fill out your design and evolution of online with pdfFiller!

pdfFiller is an end-to-end solution for managing, creating, and editing documents and forms in the cloud. Save time and hassle by preparing your tax forms online.

Design And Evolution Of is not the form you're looking for?Search for another form here.

Relevant keywords

Related Forms

If you believe that this page should be taken down, please follow our DMCA take down process

here

.

This form may include fields for payment information. Data entered in these fields is not covered by PCI DSS compliance.