Get the free Neural Network Compression in the Context of Federated Learning and Edge Devices

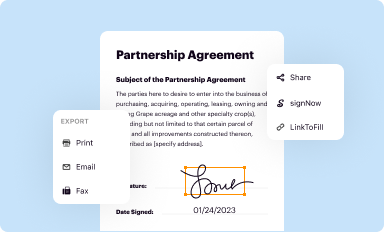

Get, Create, Make and Sign neural network compression in

How to edit neural network compression in online

Uncompromising security for your PDF editing and eSignature needs

How to fill out neural network compression in

How to fill out neural network compression in

Who needs neural network compression in?

Neural Network Compression in Form - How-to Guide Long-Read

Understanding neural network compression

Neural network compression refers to techniques used to reduce the size and complexity of neural networks while maintaining their predictive performance. This facet of machine learning is crucial for optimizing models for deployment on resource-constrained devices without significant loss in accuracy.

The significance of neural network compression arises from the increasing use of deep learning models in applications where computational resources are limited, such as mobile devices, embedded systems, and edge computing. This drive for efficiency is not just about speed; it's also about enabling machine learning implementations across diverse environments.

The need for compression in neural networks

As neural networks grow in complexity and size, several challenges emerge when deploying these models. First, large networks often require substantial computational resources, which can be prohibitive for deployment in real-time applications. For instance, the deployment of a heavy neural network in a mobile application can lead to increased energy consumption, longer loading times, and in some cases, failure to run on devices with limited processing power.

The benefits of compression are manifold. Compressed models result in reduced model sizes, directly influencing storage requirements and transmission costs. Additionally, these optimized models are designed to yield faster inference times, which means quicker responses in applications such as image recognition, speech processing, and real-time data analysis. Lower latency ultimately enhances user experiences and facilitates smoother integration into existing workflows.

Key techniques for implementing neural network compression

Pruning techniques

Pruning is a powerful technique for neural network compression that involves removing less significant weights or entire neurons, thereby reducing the model's complexity. This can be accomplished through different methods, including weight pruning and neuron pruning. Weight pruning focuses on zeroing out certain weights based on their magnitude, while neuron pruning eliminates entire neurons with little impact on the overall performance of the network.

To prune your neural network, follow these steps: first, train your model normally until saturation; then identify the weights or neurons to prune based on their importance; finally, fine-tune the remaining weights to recover any lost performance. Properly executed pruning can lead to a significant reduction in model size while retaining most of the original accuracy, making it a preferred method among practitioners.

Quantization

Quantization involves reducing the number of bits that represent the weights and activations in a neural network. By converting floating-point numbers into lower-precision formats, quantization can significantly decrease the model size and enhance computational efficiency. There are two primary forms of quantization: post-training quantization, applied after the model has been fully trained, and quantization-aware training, where the model learns to accommodate lower precision during training.

To implement quantization effectively, you can start by choosing the quantization approach that aligns best with your needs. For post-training quantization, apply the method after training; for quantization-aware training, modify the training pipeline to include quantization in the process. This can lead to reduced model size while ensuring performance levels that do not deviate significantly from the original.

Knowledge distillation

Knowledge distillation is a technique where a smaller model, known as the student, learns from a larger, pre-trained model referred to as the teacher. This process allows the student to capture the knowledge and generalization abilities of the the teacher model without needing to replicate its size and complexity. Selecting an appropriate teacher model is critical, as it should be well-trained and capable of delivering high accuracy on the target tasks.

Once the right teacher is identified, training the student model involves using the outputs of the teacher model to refine the student's learning process. This can significantly enhance the smaller model's performance, making it a viable alternative for tasks typically requiring larger networks, achieving comparable results with much lower resource demands.

Practical applications of neural network compression

Compressed neural networks have found widespread applications in diverse industries where the balance between performance and efficiency is paramount. For instance, in image recognition tasks, organizations leverage compressed models to deploy solutions that can run efficiently on mobile devices or low-power environments without sacrificing accuracy. This has proven valuable for applications like facial recognition, object detection, and augmented reality.

Furthermore, natural language processing (NLP) also benefits immensely from neural network compression techniques. Compressed models can facilitate fast and responsive chatbots, voice assistants, and translation services. By optimizing these models, businesses can offer enhanced user experiences while keeping operational costs in check. The trend of using compressed networks in industry underscores their relevance as powerful tools that not only save resources but also enhance service delivery.

Tools and libraries for neural network compression

A range of tools and libraries are available to facilitate neural network compression processes. The TensorFlow Model Optimization Toolkit is a popular resource that provides capabilities for pruning, quantization, and clustering, making it accessible for developers looking to optimize their TensorFlow models. Additionally, PyTorch offers various compression libraries that include functionalities to easily implement techniques like quantization and pruning within your PyTorch framework.

To utilize these tools effectively, start by reviewing their documentation to understand the features and functionalities they provide. For instance, with TensorFlow, you can follow a structured approach to apply optimization techniques sequentially. Similarly, for PyTorch, familiarizing yourself with libraries specific to your compression needs will streamline your workflow. By leveraging these tools, you will enhance the effectiveness of your model optimization endeavors.

Evaluating the performance of compressed models

When evaluating the performance of compressed models, it is vital to deploy metrics that accurately reflect compression quality. Key metrics include model accuracy, which helps gauge how well the compressed model performs on given tasks, and inference time, which measures the speed at which the model processes inputs. These metrics will determine if the trade-off between size and performance is acceptable for the specific application.

Additionally, comparative analysis between compressed and uncompressed models is essential. Employ benchmarking procedures to test both types of models under similar conditions, measuring key performance indicators such as accuracy and speed. Visualizing the results through graphs can meaningfully showcase the differences, enabling more informed decisions regarding which model to deploy in production.

Challenges and limitations of neural network compression

Despite the advantages, there are notable challenges and limitations associated with neural network compression. One primary concern is the trade-off between compression and accuracy; aggressively pruning or quantizing a model might lead to reduced performance if not executed carefully. This necessitates finding an optimal balance that maintains model efficacy while achieving desired reductions in size.

Common issues encountered during compression include convergence problems, where a model might struggle to regain accuracy after applying techniques like pruning or quantization. Future directions in neural network compression research may focus on developing more sophisticated algorithms that minimize these issues and enhance the overall efficacy of compressed models while leveraging emerging technologies and techniques.

Interactive tools for managing compressed models

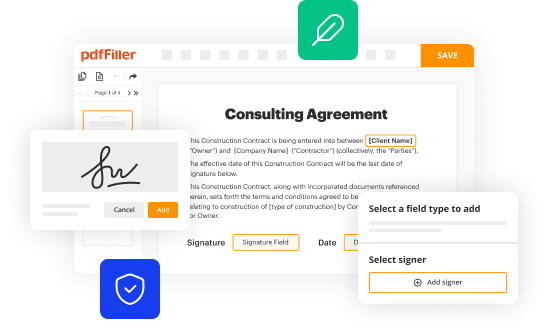

An often-overlooked aspect of neural network compression is the documentation and management of compressed models. Tools like pdfFiller enhance the process by enabling users to efficiently edit, sign, and collaborate on documents related to model development. This adds an essential layer of organization and professionalism to any machine learning project.

Using pdfFiller's document creation features, you can generate customized PDFs that outline your models' compression processes, methodologies, and results. The ability to create templates ensures that your documentation remains consistent and professional, which is vital for sharing insights with team members or stakeholders, or even for educational purposes.

Best practices for documenting neural network compression processes

Clear and comprehensive documentation is vital in the field of machine learning and neural network compression. Effective documentation practices can significantly enhance project communications and ensure that all team members are aligned with the latest updates and methodologies. Structuring your files logically based on project phases facilitates easy access and understanding.

Moreover, incorporating visual aids and diagrams can play an instrumental role in conveying complex ideas succinctly. This not only aids comprehension but also fosters collaboration among team members. Reviewing examples of well-documented projects can inspire your documentation style, ensuring that it meets high standards of clarity and professionalism.

Future trends in neural network compression

The landscape of neural network compression is rapidly evolving, with emerging techniques such as dynamic sparsity and hardware-aware training gaining traction. These developments aim to strike a balance between compression rates and maintaining high performance standards for models. As the demand for efficient models increases, future projections indicate that more refined techniques will emerge, ensuring that machine learning remains impactful across various sectors.

Additionally, the role of cloud-based solutions in compression strategies will likely expand. Leveraging cloud computing can facilitate more complex compression techniques without being constrained by local resource limitations. As organizations seek to optimize their models for a range of environments, keeping an eye on these trends will ensure you stay ahead of the curve in the field of neural network compression.

For pdfFiller’s FAQs

Below is a list of the most common customer questions. If you can’t find an answer to your question, please don’t hesitate to reach out to us.

How can I edit neural network compression in from Google Drive?

How do I complete neural network compression in online?

Can I create an electronic signature for the neural network compression in in Chrome?

What is neural network compression?

Who is required to file neural network compression?

How to fill out neural network compression?

What is the purpose of neural network compression?

What information must be reported on neural network compression?

pdfFiller is an end-to-end solution for managing, creating, and editing documents and forms in the cloud. Save time and hassle by preparing your tax forms online.