Get the free Object Detection Via a Multi-region & Semantic Segmentation-aware Cnn Model

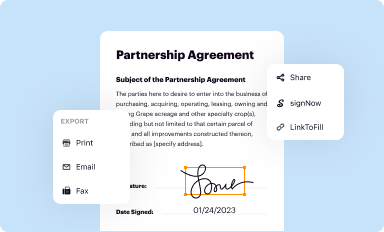

Get, Create, Make and Sign object detection via a

How to edit object detection via a online

Uncompromising security for your PDF editing and eSignature needs

How to fill out object detection via a

How to fill out object detection via a

Who needs object detection via a?

Object Detection via a Form: A Comprehensive Guide

Understanding Object Detection

Object detection is a computer vision task that involves identifying and locating objects within images or videos. While image classification simply categorizes images into predefined classes, object detection goes a step further by providing detailed bounding boxes around the identified objects, as well as class labels for each one. This capability is critical in a variety of applications, from self-driving cars that must recognize pedestrians and traffic signs to security systems that differentiate between authorized personnel and intruders.

The importance of object detection cannot be understated; it plays a pivotal role in safety, automation, and efficiency across multiple industries. For instance, in healthcare, object detection can facilitate medical imaging analysis, accurately identifying tumors or abnormalities. In retail, it enhances customer experience by enabling smart shopping solutions where customers can simply point their mobile devices at products to obtain information.

Historical Context of Object Detection

The journey of object detection evolved significantly over the last few decades. In the early stages, researchers relied on hand-crafted features and simple classifiers, utilizing methods such as Haar cascades which were common for face detection. The advent of machine learning further enhanced feature extraction capabilities, allowing for more robust detection systems.

Key milestones in this journey include the release of the R-CNN architecture in 2014, which combined region proposal with convolutional networks, demonstrating unprecedented accuracy in object detection tasks. This laid the groundwork for a series of advancements that followed, including Fast R-CNN, Faster R-CNN, and newer frameworks like YOLO, which further streamlined the detection process.

The Object Detection Landscape

Object detection techniques can be broadly classified into traditional (non-neural) methods and neural network-based methods. Traditional methods like Scale-Invariant Feature Transform (SIFT) and Histogram of Oriented Gradients (HOG) utilize predefined features to identify objects, which can be computationally intense and less accurate compared to modern approaches.

Neural network-based methods have revolutionized object detection, offering state-of-the-art performance. Convolutional Neural Networks (CNNs) have become the backbone of many object detection systems, as they can automatically learn spatial hierarchies of features from images. Notably, the YOLO (You Only Look Once) framework optimizes speed and accuracy by processing images in real-time, making it an industry-standard in scenarios demanding immediate results.

Comparative overview of popular object detection algorithms

When diving into specific algorithms, YOLO stands out due to its unique architecture that predicts bounding boxes and class probabilities directly from full images in a single evaluation. This contrasts with R-CNN and its variants, such as Faster R-CNN and Mask R-CNN, which first extract regions of features for later classification, often resulting in higher accuracy but slower processing speeds.

Other noteworthy algorithms include Single Shot Detector (SSD) and RetinaNet, which strike a balance between detection accuracy and processing speed, making them suitable for various applications. EfficientDet stands out for its efficiency, employing a compound scaling method that adjusts model depth, width, and resolution, making it a popular choice in resource-constrained environments.

Understanding the YOLO architecture

The YOLO architecture is ingeniously designed to optimize performance through its backbone network, which is responsible for feature extraction. Usually built on a CNN, the backbone includes several convolutional layers that capture essential features of the input image. This is followed by the anchor box mechanism, where predefined boxes of various sizes and aspect ratios are used during training to help the model predict bounding boxes effectively.

A critical aspect of YOLO's functionality is its multi-scale detection process. The architecture allows for detecting objects of varying sizes by analyzing different layers within the network. This hierarchical approach enables YOLO to maintain robustness across various contexts and scenarios, ensuring it performs well across diverse datasets.

The loss function formulation is another integral component, comprising multiple terms that account for classification loss, localization loss, and confidence loss, ensuring that accuracy and precision in predictions are finely tuned through training.

Setting up for object detection

Before developing a custom object detection model, users must set up their environment. This involves installing dependencies such as TensorFlow or PyTorch, libraries that facilitate the implementation of deep learning models. Utilizing package management tools like pip or conda simplifies this process, allowing users to specify the required packages and their versions.

Once the environment is ready, it’s essential to import necessary libraries and frameworks into your coding environment. This might include importing CV2 for image analysis, NumPy for numerical operations, and Matplotlib for visualizing output. A structured file organization can further streamline the development workflow when moving forward with the implementation.

Training a custom object detection model

Selecting an appropriate dataset is crucial for the success of training an object detection model. Popular datasets like COCO or Pascal VOC provide a rich array of annotated images that can be leveraged, or one might choose to create a custom dataset tailored to specific needs.

Annotating this data is also critical; tools like LabelImg and VoTT streamline this process, introducing bounding boxes around each identified object to facilitate learning during training. Executing model training involves defining hyperparameters such as learning rate, batch size, and number of epochs, each of which significantly impacts the model's performance.

Monitoring model performance through various evaluation metrics will ensure that it meets accuracy needs in real-world implementations. Implementations might range from integrating the model into web apps for real-time predictions to mobile applications where immediate detection capabilities are essential.

Using object detection in real scenarios

Object detection finds utility across a plethora of industries, each reaping its distinct benefits. In automated surveillance, systems utilize object detection algorithms to identify and track subjects, enhancing security protocols and response times. For quality control in manufacturing, object detection technology is employed to ensure products meet standards through defect detection during the production phase.

Another exciting application lies within augmented reality (AR) environments, where object detection provides interaction contexts that enhance user experiences by making virtual elements responsive to the physical surroundings. This interaction creates immersive environments, appealing to tech-savvy users and industries seeking novel enhancements to customer engagement.

Tools and platforms for object detection

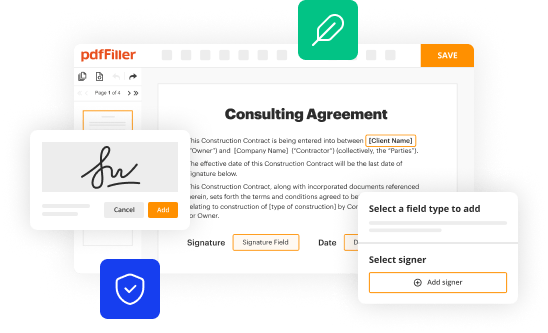

As technologies advance, numerous cloud-based solutions have emerged for document management and object detection integration. These platforms enable users to leverage powerful algorithms without the need for extensive local computing power. One such platform is pdfFiller, where users can access integrated object detection functionality within document workflows, enhancing productivity.

With pdfFiller, users can not only manage documents but also augment their forms with object detection capabilities, aiding in data collection and analysis. This combination proves invaluable for teams aiming to streamline their processes and improve accuracy in data handling.

Key performance metrics

Evaluating the effectiveness of object detection models involves several key performance metrics. Intersection over Union (IoU) is a widely adopted metric that quantifies the overlap between the predicted bounding boxes and the actual ground truth. A higher IoU indicates better accuracy and an effective model.

Mean Average Precision (mAP) is another vital metric, providing a comprehensive measure of model performance by evaluating precision and recall across multiple thresholds. Evaluating detection accuracy and efficiency helps refine your model and ensures it meets the end-user demands.

Future trends in object detection

As technology evolves, so too do the capabilities of object detection. Emerging technologies such as transformers and self-supervised learning are reshaping the landscape, providing avenues for even more efficient and sophisticated algorithms. Predictions suggest that future object detection algorithms will likely focus on greater accuracy with a reduced computational footprint, making them accessible for mobile and edge computing.

Furthermore, the integration of object detection in real-time applications will continue to expand, enhancing user experience and enabling smarter systems that can interact with users in meaningful ways.

Innovative strategies for improving object detection

To address common challenges in object detection, innovative strategies are being explored. Data augmentation techniques, such as flipping, rotation, and color variations, can significantly enhance the robustness of the model by preventing overfitting during training.

Leveraging transfer learning from pre-trained models is another effective strategy, allowing users to begin with established models and fine-tune them for their specific task rather than training from scratch. These approaches help tackle common limitations faced in real-world object detection scenarios, ensuring higher accuracy and functionality.

Step-by-step guide to creating and managing object detection forms

Creating effective forms for data collection in the context of object detection can be accomplished through pdfFiller. Users can access a suite of object detection tools, filling out forms seamlessly to collect relevant data, such as annotating objects within images. The platform’s user-friendly interface makes it easy to customize fields specifically tailored to unique project requirements, ensuring efficient data management.

Once the form is filled out, collaborative features within pdfFiller facilitate electronic signing and sharing, enabling teams to engage in rapid iterations and feedback loops that enhance both content and compliance. This interactive experience not only fosters teamwork but also enriches the data quality collected for detection purposes.

Final thoughts on object detection via forms

Summing up, the intricate relationship between object detection and form management within pdfFiller reveals an empowerment route for users. By skillfully utilizing object detection methods in form analytics, teams can strategically manage data collection, ensuring precision and efficiency in their workflows. The pivotal role that effective documentation plays in enhancing object detection processes cannot be overstated.

Choosing the right tools and services, such as those offered by pdfFiller, promotes increased accuracy and helps drive innovation while simplifying the complexities of document management in a data-driven landscape.

Community involvement and resources

Engaging with the community around object detection can be incredibly beneficial for individuals and teams looking to innovate and improve their practices. Forums and discussion groups provide platforms for enthusiasts and experts alike to exchange ideas, share insights, and contribute to the evolving field of computer vision.

Learning from real-world case studies and success stories can also illuminate best practices and inspire practitioners to adopt novel approaches. These contributions are invaluable as the landscape of object detection continues to advance, encouraging broader participation and exploration within the community.

For pdfFiller’s FAQs

Below is a list of the most common customer questions. If you can’t find an answer to your question, please don’t hesitate to reach out to us.

How can I modify object detection via a without leaving Google Drive?

How do I complete object detection via a online?

How do I edit object detection via a on an iOS device?

What is object detection via a?

Who is required to file object detection via a?

How to fill out object detection via a?

What is the purpose of object detection via a?

What information must be reported on object detection via a?

pdfFiller is an end-to-end solution for managing, creating, and editing documents and forms in the cloud. Save time and hassle by preparing your tax forms online.