Get the free Apache Spark

Get, Create, Make and Sign apache spark

Editing apache spark online

Uncompromising security for your PDF editing and eSignature needs

How to fill out apache spark

How to fill out apache spark

Who needs apache spark?

Apache Spark form: A comprehensive how-to guide

Understanding Apache Spark and its use cases

Apache Spark is an open-source distributed computing system designed for speed and ease of use, enabling the processing of large datasets across a cluster of computers. Unlike traditional batch processing systems, Spark allows for both batch and real-time data processing, leveraging in-memory computing to improve performance dramatically.

Key features of Apache Spark include its ability to handle various programming languages, such as Java, Scala, and Python, and its rich ecosystem that offers libraries for machine learning (MLlib), graph processing (GraphX), and SQL queries (Spark SQL). Furthermore, its unified engine allows seamless transitions between analytics workloads, significantly reducing the complexity typically associated with big data.

Forms and templates in Apache Spark

In the context of Apache Spark, forms refer to the data structures that facilitate the organization and manipulation of data. These forms are essential for ensuring that data is structured correctly and accessible for analysis, particularly when using various Spark capabilities.

There are several types of forms commonly used in Spark applications, including DataFrame forms, RDD forms, and SQL forms. Each type serves unique purposes and suits different data processing needs, making understanding their applications vital for any Spark user.

Creating and managing forms in Apache Spark

Creating and managing forms in Apache Spark involves several key steps that set the foundation for effective data analysis. The first step is to set up your Spark environment, ensuring that you have the necessary libraries and dependencies installed.

Next, you'll need to connect to various data sources, such as databases or distributed file systems like HDFS or S3. Defining schemas for DataFrames is crucial, as it determines how Spark interprets and interacts with the data. Any inconsistencies in the schema can lead to complications during data processing and analysis.

Detailed insights into Apache Spark forms

DataFrames in Apache Spark serve as one of the most powerful tools for managing structured data. Given their performance capabilities, DataFrames excel in scenarios requiring efficient operations on large datasets, such as aggregations, filtering, and transformations. The syntax utilized for operations on DataFrames is intuitive, allowing users to perform complex queries with minimal code.

Alternatively, Spark SQL enables users to write SQL-like queries directly against DataFrames, optimizing query execution through its Catalyst optimizer. This facilitates a seamless experience for users transitioning from SQL databases to big data applications.

Returning to RDDs, these are particularly useful when working with unstructured data or when transformations require more explicit control over the dataset. Understanding when to use RDDs versus DataFrames can significantly impact performance and ease of use.

Advanced form features in Apache Spark

One advanced feature of Apache Spark is Structured Streaming, enabling real-time processing of streaming data. This capability allows applications to handle live data streams, providing invaluable insights into time-sensitive information such as website analytics or financial transactions. Essentially, it expands the functionality of Apache Spark beyond batch processing to an interactive real-time environment.

The Java ecosystem around Spark also allows integrations with other data sources, making it a unified engine for large-scale data analytics. This aspect ensures users can leverage consistent tools and APIs, bringing about more straightforward and streamlined data workflows. A comparative analysis with other big data frameworks indicates that Spark's versatility often leads to superior performance and efficiency when executing complex analytic tasks.

Configuration and environment for Apache Spark forms

For optimal performance, it's crucial to configure your Apache Spark environment correctly. Key properties can significantly impact how forms are processed, including memory settings that determine how much data can be loaded into memory at once, thus affecting computation speed and efficiency.

Environment variables also play a critical role in managing Spark applications. For example, configuring Spark's scheduling properties can help in resource allocation that aligns with the workload demands, ensuring high availability and performance during peak times. Managing these settings effectively can drastically enhance the overall job performance.

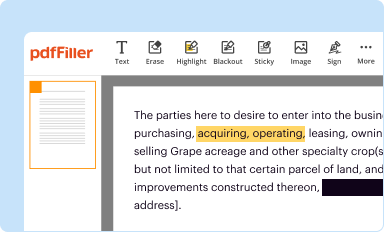

Tools and techniques for form editing and management

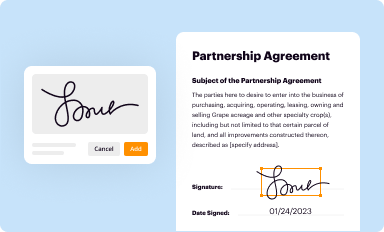

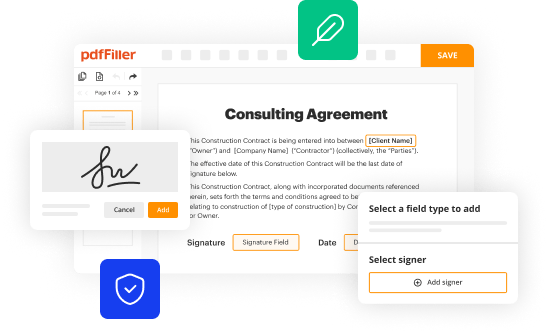

When it comes to managing documentation around Apache Spark forms, interactive tools like pdfFiller can streamline the process. Users can edit PDFs for Spark documentation, providing a user-friendly interface to enhance collaboration and documentation fluidity.

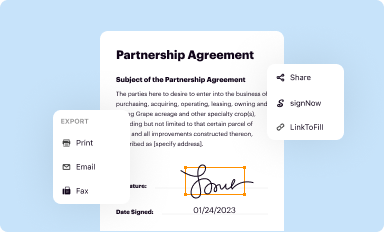

Moreover, pdfFiller offers eSignature features, ensuring secure signing of critical documents and forms, which is particularly valuable in collaborative settings where multiple stakeholders interact with data-driven projects. The collaboration capabilities allow teams to manage document workflows efficiently, making it easier to track changes and maintain version control.

Troubleshooting common issues with Apache Spark forms

Like any robust system, working with Apache Spark forms comes with its challenges. Common issues can arise from schema mismatches or connectivity failures with data sources. One practical way to debug these issues is through detailed logging and monitoring the Spark UI, which provides insights into job performance and failures.

Maintaining the integrity of forms in Spark is vital for ensuring accurate data processing. Best practices involve regularly validating schemas, managing dependencies between different forms, and ensuring that any changes in form structure do not disrupt existing workflows.

Best practices for working with Apache Spark forms

To maximize the efficiency of working with Apache Spark forms, adopting best practices is key. These include optimizing the performance of forms by ensuring that DataFrames and RDDs are appropriately managed. Additionally, secure handling of sensitive information in forms is critical, especially in organizations that deal with data governed by strict data privacy standards.

Efficient collaboration strategies also play a significant role in fostering teamwork, particularly in projects involving multiple data stakeholders. Regular training and updated documentation can empower team members to leverage Apache Spark's features effectively, ultimately enhancing productivity and analytics capabilities.

Future trends in Apache Spark form management

The landscape of big data tools is continually evolving, and Apache Spark is no exception. Anticipating trends in form management, such as greater automation and AI integration, will reshape how teams interact with data. Predictive analytics and machine learning capabilities within Spark are expected to expand, empowering teams to glean deeper insights from their data.

As the demand for real-time data analytics rises, Spark's ability to scale and manage various workloads flexibly will position it as a leading choice among big data frameworks. Teams leveraging these capabilities will thrive in increasingly competitive environments, making form management a vital component of data strategy going forward.

For pdfFiller’s FAQs

Below is a list of the most common customer questions. If you can’t find an answer to your question, please don’t hesitate to reach out to us.

How do I execute apache spark online?

Can I sign the apache spark electronically in Chrome?

How do I complete apache spark on an Android device?

What is apache spark?

Who is required to file apache spark?

How to fill out apache spark?

What is the purpose of apache spark?

What information must be reported on apache spark?

pdfFiller is an end-to-end solution for managing, creating, and editing documents and forms in the cloud. Save time and hassle by preparing your tax forms online.