Get the free Occupancy-based Policy Gradient: Estimation, Convergence, and Optimality

Get, Create, Make and Sign occupancy-based policy gradient estimation

How to edit occupancy-based policy gradient estimation online

Uncompromising security for your PDF editing and eSignature needs

How to fill out occupancy-based policy gradient estimation

How to fill out occupancy-based policy gradient estimation

Who needs occupancy-based policy gradient estimation?

Understanding Occupancy-Based Policy Gradient Estimation Form

Overview of occupancy-based policy gradient estimation

Occupancy-based policy gradient estimation refers to a specialized approach in reinforcement learning that estimates the gradient of expected returns concerning policy parameters using occupancy measures. This method focuses on how often specific states and actions are visited under a given policy, which can significantly improve the performance of training complex models.

Policy gradient methods play a pivotal role in reinforcement learning workflows by directly optimizing policies based on the gradients derived from performance. By employing occupancy-based techniques, researchers and practitioners can refine their policies based on the distribution of states and actions they encounter, leading to more efficient learning algorithms in environments requiring nuanced decision-making.

Occupancy-based methods are particularly advantageous when dealing with high-dimensional action spaces or continuous actions, as they can leverage data more effectively than traditional policy gradient methods.

Understanding occupancy measures

Occupancy measures are statistical representations that capture the average time or frequency a state-action pair is visited during interactions with an environment. In the context of policy gradient methods, occupancy measures quantify how often specific actions lead to certain states under a specified policy, providing a more comprehensive understanding of the interaction dynamics.

Mathematically, occupancy measures can be represented as distributions over state-action pairs, calculated through frequency counts from trajectories generated by the policy. The significance of these measures lies in their ability to account for the impact of policy changes on the overall system behavior, making them integral to the optimization process.

For instance, in robotic navigation, occupancy measures can help in understanding how often specific paths (state-action pairs) lead to successful navigation outcomes, facilitating more informed policy adjustments.

The role of policy gradient estimations

Policy gradient estimation methods are at the heart of almost all contemporary reinforcement learning frameworks. They provide a means to optimize policy parameters directly based on the expected rewards, creating an iterative feedback loop from actions taken. These methods differentiate themselves by parameterizing the policy and updating it through gradients derived from observed outcomes.

One significant advantage of policy gradient approaches emerges when contrasted with value-based methods. While value-based approaches estimate value functions and derive policies indirectly, policy gradients, especially those using occupancy measures, allow for direct expressive optimization of policy outputs. This direct approach results in more flexible and robust learning, especially in scenarios where traditional value functions may struggle.

Step-by-step guide to using the occupancy-based policy gradient estimation form

Using the occupancy-based policy gradient estimation form effectively requires careful preparation and structured execution. Below is a concise guide elaborating on each step.

Preparation

Before beginning, ensure that you have the necessary computing software and specified data inputs to support your computational needs. It’s essential to clarify the desired outcome of your policy gradient estimation to align your actions effectively.

Data collection

Gathering relevant data is fundamental to the process. Sources may include recorded simulations, historical logs from prior experiments, or even synthetic data generated from model environments. Depending on your specific scenario, implement tools such as web scraping software or data integration tools to efficiently compile your data.

Form setup and configuration

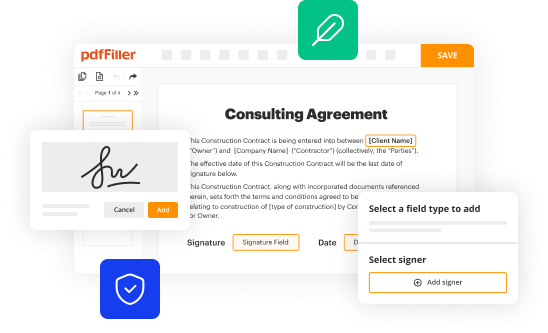

Setting up your form on pdfFiller is straightforward. Follow these steps to start: log into your account, navigate to the form template library, and select the designated occupancy-based policy gradient estimation form. Once selected, customize the fields as required to suit your specific data inputs and objectives.

Filling out the form

Entering data into the form should be approached systematically. Ensure that all necessary fields are filled accurately. To avoid common mistakes, double-check your dataset inputs before submission, as an error in the data can propagate through your models and yield incorrect estimations.

Editing and modifying the form

After filling out the form, editing capabilities within pdfFiller allow you to revise your entries effortlessly. Use the editing tools to adjust specific entries without needing to start afresh, promoting efficiency in your workflow.

Collaborating with your team

Utilizing pdfFiller's collaboration features will enable you to effectively work with your team. Share documents with designated access roles, ensuring that everyone involved can view and edit where appropriate while maintaining document integrity.

Signing and finalizing the form

Once the data is finalized, utilize pdfFiller’s digital signature functionality to certify your document. Make sure all signatures are in place and all necessary annotations are complete before considering the form ready for submission.

Advanced techniques in occupancy-based policy gradient estimation

Exploring advanced techniques within occupancy-based policy gradient estimation can reveal further efficiencies and optimizations. One promising area is the integration of other estimation strategies that leverage existing data more effectively, providing richer insights and results.

To enhance performance, consider implementing optimization techniques designed to reduce variance in policy gradient estimates. Approaches such as using baselines can stabilize the learning process, allowing for more robust estimates with fewer sample requirements. Recent advancements in the field, including the use of neural architectures and hierarchical reinforcement learning strategies, have shown impressive results towards more sophisticated applications in real-world environments.

Troubleshooting common issues

Problems can arise at various stages of the occupancy-based policy gradient estimation process. Common issues include data inconsistencies, calculation errors, and unexpected outcomes. A proactive approach involves thoroughly validating data inputs before processing and utilizing debugging tools within software to identify discrepancies early. Maintain a clear record of changes made during the estimation to facilitate problem resolution.

To ensure form accuracy and efficiency, conduct regular reviews of your inputs, calculations, and model outcomes. Engaging in iterative testing can often highlight areas for refinement or correction.

Practical applications and case studies

There are numerous real-life applications where occupancy-based policy gradient estimation has made a significant impact. For instance, in autonomous vehicle navigation, employing these methods allows systems to better understand movement patterns and optimize routing in complex urban environments. Case studies reveal notable success in diverse application areas, including robotics, resource allocation in networks, and game-playing AI, showcasing how leveraging occupancy-based estimations leads to better decision-making outcomes.

Success stories indicate that organizations applying these techniques experienced improvements in efficiency and accuracy, demonstrating the practical importance of understanding how policies affect state-action distributions.

Leveraging pdfFiller for enhanced form management

pdfFiller offers a robust platform designed for effective document management, enhancing the user experience in filling out and managing the occupancy-based policy gradient estimation form. The platform’s cloud-based infrastructure ensures accessibility from anywhere, facilitating real-time collaboration and updates without the need for cumbersome file transfers.

Utilizing features such as automatic version control, comments, and stored templates can maximize the efficiency of ongoing projects, ensuring that users have the necessary tools to keep their documents organized and current. It also empowers teams to strategize and optimize their workflows by reducing bureaucratic overhead.

Getting support and feedback

Accessing customer support through pdfFiller is user-friendly, with dedicated channels available for immediate assistance regarding your form-related queries. The comprehensive Help Center and responsive customer service can quickly resolve any issues you encounter while working with your documents.

Moreover, utilizing feedback mechanisms for form improvement will help optimize future uses, enabling users to share insights on what worked or what could be enhanced in the form’s layout and functions.

For pdfFiller’s FAQs

Below is a list of the most common customer questions. If you can’t find an answer to your question, please don’t hesitate to reach out to us.

How can I send occupancy-based policy gradient estimation to be eSigned by others?

How do I make changes in occupancy-based policy gradient estimation?

How do I edit occupancy-based policy gradient estimation on an Android device?

What is occupancy-based policy gradient estimation?

Who is required to file occupancy-based policy gradient estimation?

How to fill out occupancy-based policy gradient estimation?

What is the purpose of occupancy-based policy gradient estimation?

What information must be reported on occupancy-based policy gradient estimation?

pdfFiller is an end-to-end solution for managing, creating, and editing documents and forms in the cloud. Save time and hassle by preparing your tax forms online.