Get the free Stochastic Chameleons: Irrelevant Context Hallucinations Reveal Class-based (mis)gen...

Get, Create, Make and Sign stochastic chameleons irrelevant context

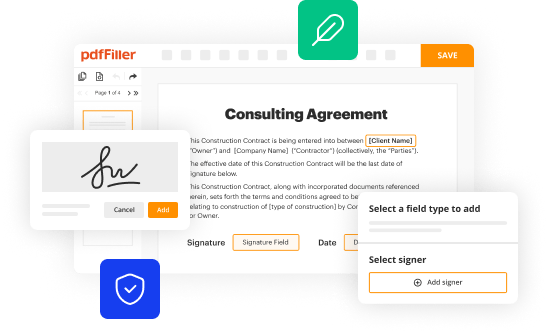

Editing stochastic chameleons irrelevant context online

Uncompromising security for your PDF editing and eSignature needs

How to fill out stochastic chameleons irrelevant context

How to fill out stochastic chameleons irrelevant context

Who needs stochastic chameleons irrelevant context?

Understanding stochastic chameleons and their implications in language models

Understanding stochastic chameleons

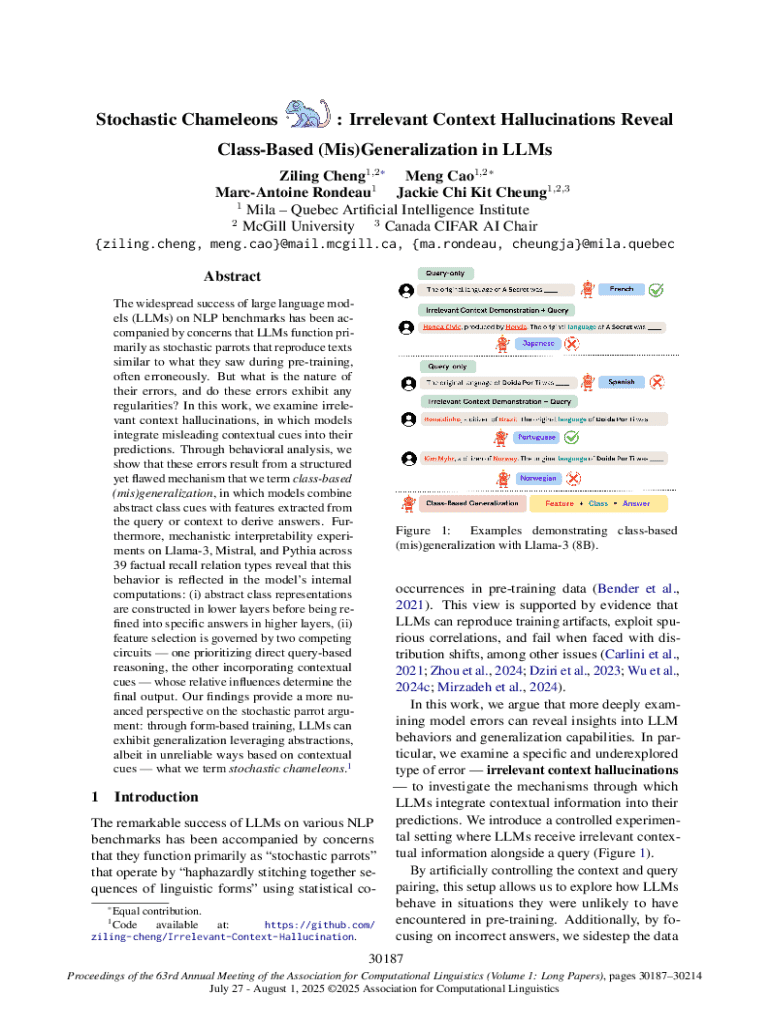

Stochastic chameleons refer to the adaptive behavior observed in certain language models, exhibiting a tendency to adjust output in accordance with their context, sometimes overextending or misapplying context in irrelevant ways. Originating from research into generative AI, this phenomenon highlights the complex interaction between input data and model output. Particularly in language learning models, the concept of irrelevant context suggests that models can misinterpret situational cues, thereby generating outputs that don't align with the query's intent.

Instances of stochastic chameleons can be exemplified through various scenarios in natural language processing (NLP). For example, when a model trained primarily on formal documents encounters conversational data, it may erroneously apply academic language structures, resulting in a tone and style that feels out of place. Such case studies underscore a critical contrast between models showcasing stochastic chameleonic tendencies and those adhering strictly to a defined output signature.

Mechanisms behind irrelevant context hallucinations

The hallucinations generated by irrelevant context in language models are notably tied to how data is encoded and represented. When a model encounters data during training that is either too broad or irrelevant, its ability to accurately mimic human language can falter. This misrepresentation can lead to misgeneralizations that contextualize information inappropriately, resulting in responses that deviate significantly from user expectations.

One prominent mechanism behind this misgeneralization is class-based failure. When models are categorized based on training classes, they may erroneously associate traits of one class with seemingly unrelated ones, leading to inappropriate conclusions. For instance, a model trained predominantly on technical writing may misinterpret creative prompts as technical inquiries, producing outputs that lack the intended creativity.

The impact of stochastic chameleons on performance

Evaluating the effectiveness of language models necessitates awareness of how stochastic chameleons affect performance metrics. Accuracy, coherence, and relevance are primary metrics for measuring output quality. When these models misapply context, they risk not only generating irrelevant content but also losing the user’s trust, particularly in applications like legal document drafting or customer-facing chatbots.

Understanding model limitations becomes crucial, especially in high-stakes environments. Certain situations, such as those demanding precise legal language or medical terminology, highlight where misgeneralizations can have serious implications. Misinterpretations can mislead users or induce errors, sparking a need for vigilance in language model applications.

Techniques for mitigating hallucinations

To combat the challenges posed by stochastic chameleons, several techniques can be employed to enhance training data quality. Ensuring that models encounter diverse and representative samples during training is paramount. This diversity can aid in refining the model’s ability to accurately contextualize prompts, thereby minimizing instances of irrelevant context hallucinations.

Another vital step involves developing robust evaluation frameworks that benchmark model performance against standardized metrics. It’s not enough to rely solely on training data quality; consistent evaluation and adjustment are essential to ensure models maintain high contextual accuracy. Context-aware mechanisms, such as attention layers and contextual embeddings, can also be implemented to improve responsiveness to situational cues.

Practical guidelines for researchers and developers

Researchers and developers need actionable steps to identify and analyze stochastic chameleons within their models. Regular audits of model output are essential to pinpoint inconsistencies and hallucinations. By developing a checklist of criteria for analyzing outputs, developers can systematically address issues before they escalate.

Additionally, there are a wealth of tools and resources available that can enhance model evaluation. Frameworks like Hugging Face’s Transformers and TensorFlow allow for easy comparisons and experimentation with different model configurations. Engaging with the broader AI community through forums and research groups can also amplify learning opportunities and best practices.

Exploring broader implications in AI and NLP

The phenomenon of irrelevant context in AI raises significant ethical considerations. Understanding how language models make mistakes can inform guidelines for responsible use. Furthermore, insights gained from studying stochastic chameleons can influence cognitive science and psychology, particularly in understanding the nature of human language processing and decision-making.

As language models evolve, the research into stochastic chameleons could inform future developments that enable models to better align with human cognition. Enhanced understanding could lead to innovations not just in NLP but also in how AI interacts with users across varied contexts.

Interactive tools for hands-on learning

Utilizing interactive tools can significantly enhance research in AI and NLP. Platforms like pdfFiller offer customizable document templates, which allow researchers to create well-organized documentation related to their findings. With features for editing PDFs, eSigning, and collaboration, researchers can streamline workflows and maintain consistency across their documentation.

The versatile editing capabilities enable users to manage research documents efficiently, assigning access to team members while ensuring security. This level of organization not only boosts productivity but also fosters greater collaboration among research teams, ultimately paving the way for innovative breakthroughs in the understanding of stochastic chameleons.

Best practices in document management for researchers

Effective document management is essential for success in research. The organization ensures easy retrieval of past work and maintains consistency as projects progress. Implementing version control strategies helps track changes, particularly in collaborative environments where multiple authors are involved.

Moreover, cloud-based solutions provide researchers the flexibility to access documents anywhere. This not only enhances collaboration among remote teams but also offers extra layers of security for sensitive research data. The combination of these best practices can lead to a highly efficient, organized research methodology tailored for modern-day challenges in AI and NLP.

Featured case studies

Examining success stories can provide valuable insights about effectively addressing stochastic chameleons. Organizations that have successfully navigated challenges related to irrelevant context have often employed an iterative approach, refining their training datasets while incorporating feedback loops for continuous improvement.

Insights gathered from these implementations can inform future AI endeavors. Notably, emerging innovations focus on refining models to enhance contextual understanding without compromising their generative capabilities. Studies indicate that a balanced approach may lead to robust systems capable of navigating complex language dynamics.

Engaging with the research community

Networking within the AI and NLP fields can significantly magnify research impact. Conferences and workshops serve as platforms for knowledge exchange, while webinars provide opportunities for ongoing education. Engaging with the research community aids in staying abreast of the latest trends and breakthroughs, which is vital in such a rapidly evolving domain.

Furthermore, collaborative projects often yield new insights and innovative solutions to common challenges, including those posed by stochastic chameleons. By participating actively in discussions and sharing findings, researchers contribute collectively toward enhanced understanding and development of more effective AI models.

For pdfFiller’s FAQs

Below is a list of the most common customer questions. If you can’t find an answer to your question, please don’t hesitate to reach out to us.

How do I edit stochastic chameleons irrelevant context online?

How do I make edits in stochastic chameleons irrelevant context without leaving Chrome?

Can I create an electronic signature for the stochastic chameleons irrelevant context in Chrome?

What is stochastic chameleons irrelevant context?

Who is required to file stochastic chameleons irrelevant context?

How to fill out stochastic chameleons irrelevant context?

What is the purpose of stochastic chameleons irrelevant context?

What information must be reported on stochastic chameleons irrelevant context?

pdfFiller is an end-to-end solution for managing, creating, and editing documents and forms in the cloud. Save time and hassle by preparing your tax forms online.