Get the free Building Classifiers With Independency Constraints

Get, Create, Make and Sign building classifiers with independency

How to edit building classifiers with independency online

Uncompromising security for your PDF editing and eSignature needs

How to fill out building classifiers with independency

How to fill out building classifiers with independency

Who needs building classifiers with independency?

Building classifiers with independency form: A comprehensive guide

Understanding classifiers and their importance

Classifiers are algorithms or models that sort data into categories based on input features. They play a critical role in data analysis and machine learning, enabling applications such as spam detection, image recognition, and credit scoring.

Different types of classifiers exist, including supervised learning classifiers like logistic regression and decision trees, as well as unsupervised ones like clustering algorithms. Each classifier has its own strengths and weaknesses, which makes the choice of the right model vital.

The development of classifiers is incomplete without independent validation, which ensures that the model's performance is robust. This step is crucial for confirming the model’s applicability in real-world scenarios.

Independent validation concepts

Independent validation is a process designed to test a classifier’s performance on an unseen dataset, separate from the data used to train the model. This method helps quantify the model's ability to generalize to new data.

It differs from cross-validation, which involves partitioning the training dataset to assess the model. While cross-validation is beneficial for estimating model performance during training, independent validation provides an added layer by testing the model on entirely new data.

Key metrics for evaluating classifier accuracy include precision, recall, F1 score, and the area under the receiver operating characteristic curve (AUC-ROC). Additionally, several factors can impact classifier performance, such as feature quality, data distribution, and algorithm choice.

Steps to build a classifier with independency form

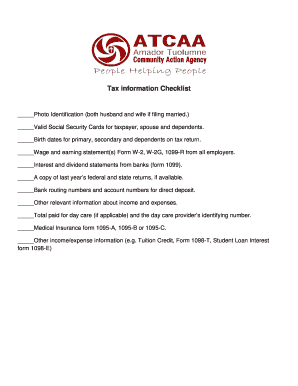

Building a classifier involves several key steps that begin with data collection. Identifying the right data sources is essential, as the quality of your data can significantly influence your results. Sources can include public datasets, organizational databases, or sensor data.

Once the data is collected, the next step entails data preprocessing, which includes cleaning and preparing the data. Techniques for handling missing data might involve imputation or removal, while outliers could be addressed through normalization or transformation.

Choosing the right classifier can be pivotal to the project's success. For instance, decision trees are intuitive and easy to use, support vector machines (SVM) are powerful for high-dimensional data, while neural networks are suitable for complex patterns. Consider factors such as data size, the complexity of relationships, and the problem type when selecting the classifier.

Once the classifier is chosen, coding the classifier typically involves utilizing libraries such as Scikit-learn or TensorFlow. These libraries provide a user-friendly interface to create and customize classifiers efficiently.

The independent validation process involves setting up a framework where a portion of your dataset is reserved for validation. You can conduct validation tests by running the classifier on the reserved data and measuring performance based on the established metrics.

Finally, interpreting validation results is essential to understand how well your classifier can predict unseen data. Insights from these results can guide further improvements in your model.

Advanced techniques for improving classifier performance

Feature selection and engineering are critical steps that involve selecting the most relevant features for your model. This not only enhances model accuracy but also reduces overfitting. Common techniques for effective feature engineering include feature scaling, log transformations, and creating interaction features.

Another important aspect is hyperparameter tuning, which involves optimizing the parameters that govern the learning process of the classifier. Methods like grid search and random search can be effective in this aspect. For instance, with a decision tree, tuning parameters such as the maximum depth and minimum samples per leaf can lead to significant performance gains.

Ensemble methods, such as bagging and boosting, further enhance performance by combining multiple classifiers to reduce variance and improve predictions. Techniques such as Random Forests and Gradient Boosting Machines leverage the strengths of different models and can outperform single classifiers in complex tasks.

Case studies and practical applications

Real-world implementations of classifiers are abundant across various industries. In healthcare, for instance, classifiers can predict diseases using patient data, potentially improving diagnosis speed and accuracy. Financial institutions utilize classifiers for credit scoring and detecting fraudulent transactions, thereby enhancing their risk assessments.

Additionally, marketing sectors employ classifiers to segment consumers and personalize offers, leading to increased customer engagement. Case studies highlighting the successful utilization of independent validation can showcase how thorough testing results in robustness against overfitting and delivers reliable predictive performance.

Lessons learned from these case studies include recognizing common pitfalls such as data leakage and the importance of complete datasets in fostering classifier reliability.

Tools and resources for classifier development

When venturing into classifier development, utilizing the right tools is crucial. Software solutions like Scikit-learn, Keras, and TensorFlow offer user-friendly environments for building machines learning models, while Tableau and Power BI help visualize outcomes.

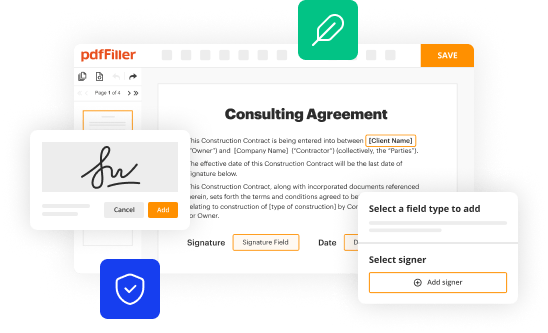

Furthermore, pdfFiller proves to be invaluable for managing documents related to data gathering. Its platform enables users to create, edit, and sign PDFs efficiently, ensuring that documentation flows smoothly alongside data processing.

Online resources and communities can greatly assist in continuous learning. Engaging with forums, webinars, and tutorials can provide the latest insights and best practices in classifier development.

Challenges and limitations of classifiers

Classifier development is not without challenges, including acquiring sufficient high-quality data, dealing with skewed distributions in labels, and ensuring computational efficiency. These obstacles can significantly impact the ability to create accurate models.

Additionally, independent validation has its limitations. A major challenge is that it necessitates a sufficiently large dataset to be effective; otherwise, the results may not generalize well. Strategies like data augmentation and regularization can help mitigate these issues.

Future trends in classifier development

Emerging trends in machine learning and classifier technology are pointing towards greater reliance on AI and automation to enhance accuracy. Techniques like automated machine learning (AutoML) aim to simplify model building by automating hyperparameter tuning and feature engineering.

Moreover, advancements in deep learning continue to reveal new potentials for classifiers, particularly in fields like natural language processing and image recognition. Predictions indicate that the future of classifier research will increasingly focus on interpretability and ethical AI, addressing biases and enhancing user trust.

Summary of key takeaways and next steps

In building classifiers with independency form, thorough steps include data collection, preprocessing, selecting appropriate models, and ensuring robust independent validation. Each of these elements is pivotal for creating effective and reliable classifiers.

The integration of advanced techniques such as feature selection, hyperparameter tuning, and ensemble methods can lead to substantial improvements. Continuous learning through tools and community engagement ensures staying updated with best practices in classifier development.

Encouragement to explore further by experimenting with new techniques and engaging with active communities is essential for advancing classifier development capabilities.

For pdfFiller’s FAQs

Below is a list of the most common customer questions. If you can’t find an answer to your question, please don’t hesitate to reach out to us.

How can I manage my building classifiers with independency directly from Gmail?

How do I make edits in building classifiers with independency without leaving Chrome?

How do I edit building classifiers with independency straight from my smartphone?

What is building classifiers with independency?

Who is required to file building classifiers with independency?

How to fill out building classifiers with independency?

What is the purpose of building classifiers with independency?

What information must be reported on building classifiers with independency?

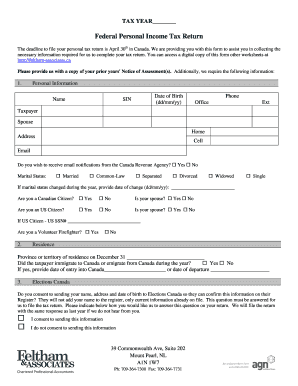

pdfFiller is an end-to-end solution for managing, creating, and editing documents and forms in the cloud. Save time and hassle by preparing your tax forms online.