Comprehensive Guide to Artificial Intelligence Systems Evaluations Form

Understanding artificial intelligence systems evaluations

Artificial intelligence systems evaluations are systematic assessments designed to appraise the performance, reliability, ethical implications, and effectiveness of AI solutions. Evaluating AI systems enables stakeholders to determine whether these systems can meet defined objectives and user needs. The significance of such evaluations has surged, particularly as AI technologies proliferate across diverse sectors, where ensuring safety, transparency, and fairness cannot be overstated. Key stakeholders involved in these evaluations include AI developers tasked with designing algorithms, regulators who establish standards, and end-users who interact with the technology.

Responsible for creating and refining AI algorithms to ensure they meet technical standards.

Oversee compliance with legal frameworks and ethical guidelines in AI implementation.

The final users of AI systems whose experiences and feedback are critical for evaluations.

Components of an effective AI evaluations form

An effective artificial intelligence systems evaluations form is typically structured to include essential sections that guide users through the evaluation process. Each component of the form is vital for collecting meaningful data and insights about the AI system’s functionality. The overview of these sections includes General Information, Objectives of Evaluation, Evaluation Criteria, Data Collection Methods, and Stakeholder Feedback. Understanding these components allows developers and regulators to make informed decisions based on robust data.

Basic details about the AI system, its purpose, and context of use.

Clear statements defining what the evaluation aims to accomplish.

Specific parameters against which the AI system will be assessed.

Techniques employed to gather information, such as surveys or usage statistics.

Opportunities for input from all parties involved in or affected by the AI system.

Developing your AI evaluations form

Creating an AI evaluations form requires a methodical approach, beginning with identifying core objectives that align with the intended use of the AI system. These objectives should stem from direct stakeholder needs and regulatory requirements. Following that, one must select appropriate evaluation criteria—metrics or benchmarks that will guide the assessment process. Developing evaluation queries that are relevant to the AI’s performance or impact allows for targeted evaluations.

Additionally, when designing the form, it's crucial to prioritize user-friendliness. This can be achieved through the clarity of language, ensuring questions are straightforward and jargon-free. Establishing a logical flow among the questions helps respondents navigate the form smoothly. Incorporating visual aids like charts or graphs can also enhance understanding and engagement.

Determine what aspects of the AI system need evaluation.

Select measurable metrics that reflect the AI's performance.

Craft questions that align with the objectives and criteria chosen.

Best practices for filling out the AI evaluations form

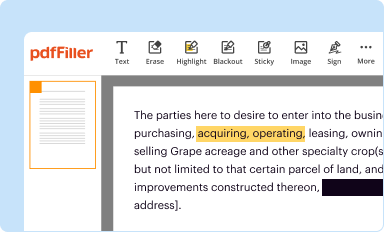

Filling out the AI evaluations form requires collecting relevant data and insights effectively. Data should be gathered through various methodologies such as user surveys, quantitative metrics, and observational studies. Collaboration among team members is essential to capture diverse perspectives and ensure a comprehensive evaluation. Using collaborative tools like pdfFiller can facilitate this process, allowing team members to contribute, edit, and provide feedback seamlessly.

Setting clear deadlines for input and feedback encourages timely contributions and keeps the project on track. Once the form is completed, a thorough review is essential for clarity and accuracy, which involves verifying all responses and identifying common pitfalls such as ambiguous questions or incomplete sections.

Utilize surveys and direct feedback to inform evaluations.

Leverage tools for editing and feedback to optimize group efforts.

Ensure all responses are clear and accurately reflect findings.

Advanced techniques for evaluating AI systems

Evaluating AI systems requires a combination of quantitative and qualitative metrics to capture a comprehensive picture of their effectiveness. Quantitative metrics offer statistical insights into performance, while qualitative evaluations provide human experiences and feedback that contextualize those numbers. Incorporating user feedback directly into evaluations can enhance the reliability of outcomes, ensuring that AI systems align with user expectations and needs.

Transparency and fairness are paramount in any AI evaluation. This means being clear about the processes and metrics used to evaluate AI systems and ensuring that evaluations are free from bias. Looking toward the future, we can expect to see trends in AI evaluations that prioritize automation in data analysis, increasing efficiency and reducing the risk of human error.

Use a combination of both types for well-rounded evaluations.

Engage end-users to validate evaluation insights.

Maintain clear processes to mitigate bias in assessments.

Special considerations in AI evaluations

AI evaluations come with their unique challenges, particularly concerning ethics and regulatory compliance. Ethical implications must be considered seriously, as AI systems can inadvertently perpetuate biases or lead to undesirable outcomes. Ensuring adherences to established regulatory standards is crucial to maintain public trust and safeguard against potential harms. Furthermore, evaluation forms may need customization to address specific AI applications effectively.

For example, in healthcare AI, privacy regulations dictate that patient data must be handled with the utmost care, hence, the evaluation form must account for these variables. In contrast, evaluation efforts in autonomous vehicles might focus more on safety metrics and road usability, while financial services may highlight risk management algorithms. Tailoring evaluation methods to meet these unique demands ensures comprehensive evaluation and accountability.

Address potential bias and fairness in AI systems.

Align evaluation processes with existing laws and guidelines.

Adapt forms to meet the unique requirements of different sectors.

Evaluating performance and outcomes

After completing the AI evaluations form, tracking success metrics is essential to measure the effectiveness of the evaluation process. Success can be assessed through improved operational performance, enhanced user satisfaction, or increased compliance with regulatory standards. Utilizing iterative improvements based on insights gleaned from evaluations allows organizations to refine their AI systems continuously, maximizing their potential with minimal risk.

Moreover, reporting evaluation results to stakeholders is crucial for transparency and accountability. Clear communication of findings helps to build trust among all involved parties, fostering a culture of trust and collaboration. These reporting phases should not only showcase successes but also outline areas for improvement, creating a balanced review that encourages continual progress.

Use identified metrics to assess post-evaluation effectiveness.

Implement changes to enhance system performance continuously.

Share clear findings to maintain transparency with all parties.

Leveraging technology for AI evaluation forms

Utilizing available tools and applications can significantly enhance the efficiency and effectiveness of creating AI evaluations forms. Platforms like pdfFiller offer robust features for crafting, managing, and editing evaluation forms. By leveraging such software, users can easily incorporate feedback mechanisms, enabling continuous input throughout the evaluation process. Additionally, the cloud-based nature of these platforms fosters greater collaboration, allowing teams to work together in real time, regardless of geographical barriers.

Enhancing collaboration through these platforms comes with multiple advantages, such as streamlined version control and improved accessibility of documents. The integration of features like automated reminders for document submissions ensures participants remain engaged, reducing the potential for lapse in feedback and promoting timely submissions.

Explore platforms like pdfFiller that facilitate form management.

Enable continuous feedback throughout the evaluation process.

Use cloud tools for real-time collaboration and version control.

Case studies of successful AI evaluations

Real-world applications provide invaluable insights into the effectiveness of AI evaluations. For instance, a leading AI organization in healthcare implemented an evaluations form that focused on patient data security, yielding significant improvements in compliance with health regulations. Lessons learned from these evaluations emphasized the importance of stakeholder input and robust data collection techniques, reinforcing the need for collaboration among developers, healthcare professionals, and regulators.

In the financial services sector, another case study revealed how evaluations geared towards algorithmic transparency resulted in enhanced client trust and product performance. This shift not only improved operational metrics but also fostered an environment of accountability, driving compliance with evolving government standards. Analyzing pre- and post-evaluation outcomes allows organizations to recognize successful strategies and identify areas needing attention, further refining their processes.

Investigate successful implementations of AI evaluations.

Capture insights that can mindfully inform future evaluations.

Assess the effectiveness of implemented changes post-evaluation.

Conclusion and forward-looking insights

The future of artificial intelligence systems evaluations holds great promise, particularly as technology continues to evolve. Predictions suggest a growing emphasis on continuous improvement in evaluation practices, asserting that organizations that proactively adapt their systems and evaluations will have a competitive edge in an increasingly AI-driven landscape. By integrating advanced techniques, such as AI-assisted evaluations, organizations can streamline processes, enhance accuracy, and optimize performance.

As they develop more sophisticated evaluations forms, organizations will need to remain vigilant regarding ethical standards and regulatory compliance, ensuring responsible AI deployment. The path forward in AI evaluations will not only celebrate successes but actively address challenges that arise, making room for more thoughtful and impactful technologies.