Get the free Model ensembling as a tool to form interpretable multi-omic predictors of cancer pha...

Get, Create, Make and Sign model ensembling as a

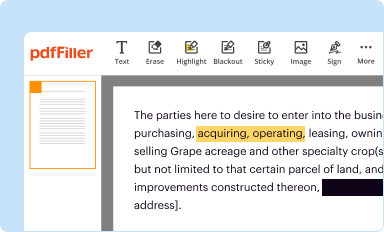

How to edit model ensembling as a online

Uncompromising security for your PDF editing and eSignature needs

How to fill out model ensembling as a

How to fill out model ensembling as a

Who needs model ensembling as a?

Model ensembling as a form

Understanding model ensembling

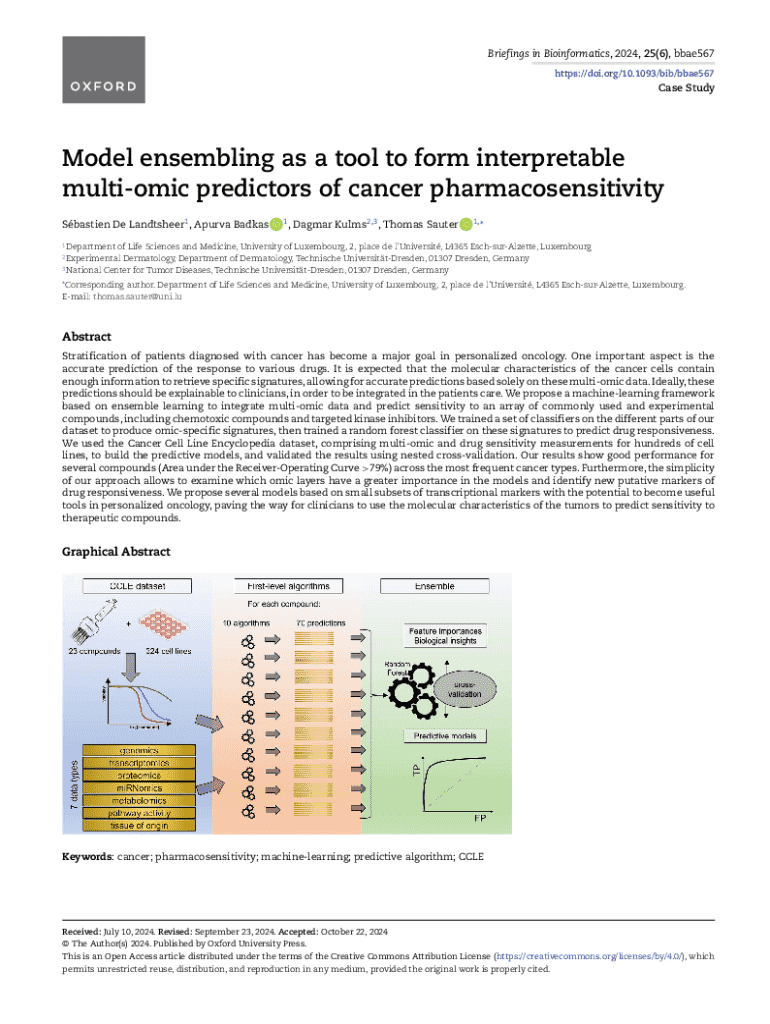

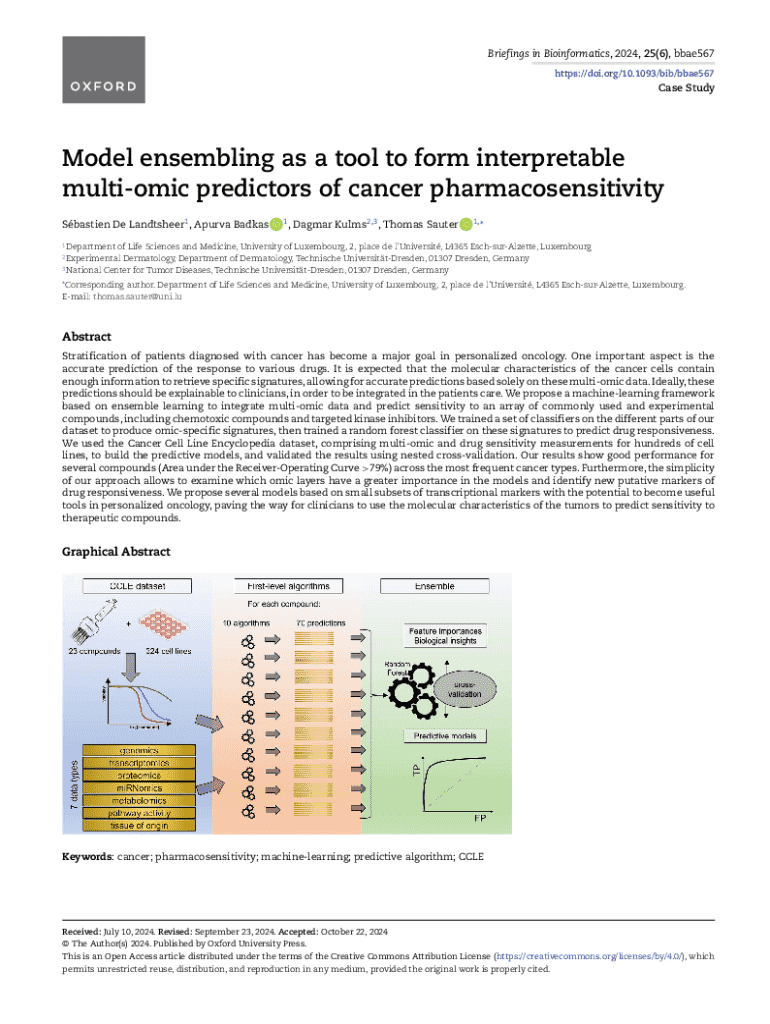

Model ensembling is a powerful technique in machine learning that combines multiple models to enhance prediction accuracy. By leveraging the strengths of various algorithms, ensembling creates a more robust final model capable of capturing complex patterns in data, often outperforming individual models. The significance of model ensembling lies in its ability to reduce bias and variance, making it a pivotal approach when dealing with intricate datasets.

This method is particularly useful in applications where high accuracy is required. For instance, in healthcare, ensemble models can improve diagnostic predictions by integrating several algorithmic perspectives. As a form of model improvement, ensembling not only boosts performance but also provides a layer of reliability, which is crucial in decision-making processes across various industries.

Types of ensemble models

Ensemble models can primarily be categorized into two types: bagging and boosting. Bagging, or bootstrap aggregating, aims to reduce variance by training multiple base models independently on random subsets of data and then averaging their predictions. A notable example is the Random Forest model, which uses decision trees as base learners to derive a strong ensemble learning strategy.

On the other hand, boosting focuses on reducing bias by sequentially training models, each one paying more attention to data points misclassified by previous learners. Techniques like Gradient Boosting and AdaBoost exemplify this approach, effectively improving prediction outcomes. Furthermore, stacking and blending models represent advanced ensembling techniques wherein predictions from several models are combined through a meta-learner or weighted averages, allowing for sophisticated integration of diverse model outputs.

When to use ensemble models

Ensemble techniques are especially advantageous in scenarios plagued by high variance issues, such as when single models tend to overfit the training data. Additionally, complex prediction problems that require tackling non-linear relationships are prime candidates for ensemble modeling. By integrating multiple algorithms, the final output can better account for intricate data patterns that a single model may overlook.

Consider industries such as finance, where ensemble models can outperform single models by providing comprehensive insights into market trends. For example, an ensemble approach might be utilized to predict stock performance by simultaneously considering various factors such as historical price movement and macroeconomic indicators. In healthcare, combining different predictive models can lead to improved patient diagnosis and treatment strategies by analyzing multifaceted data sources.

The mechanics of ensemble models

Understanding how ensemble learning works is essential for effectively utilizing this approach. At its core, ensemble learning combines the predictions of multiple models to produce a more accurate and stable prediction. This can be achieved through various methods, including simple voting, averaging, or more sophisticated ranking approaches. Voting can be either majority voting, where each model gets one vote, or weighted voting based on model performance.

Frameworks such as Scikit-learn and TensorFlow provide robust tools for implementing ensemble models. Scikit-learn, in particular, offers a straightforward interface for creating bagging and boosting ensembles, facilitating experimentation with different base learners. A simple step-by-step guide for building a Random Forest in Scikit-learn involves selecting the dataset, initializing the model, fitting it to the training data, and then evaluating its performance on testing data.

Best practices for building ensemble models

Choosing diverse base learners is crucial for building effective ensemble models. A selection strategy based on different algorithms, model complexities, and data representations can significantly enhance model performance, as diversity among models reduces the risk of correlated errors. Incorporating various types of models, such as decision trees, linear models, and support vector machines, can help capture different aspects of the underlying data.

Data quality also plays a vital role in the success of ensemble learning. Addressing issues such as missing values, ensuring proper feature selection, and standardizing features can lead to substantial improvements in the final ensemble's predictions. Furthermore, strategies to avoid overfitting, such as applying regularization techniques or cross-validation, are necessary to maintain robust performance in unseen datasets.

Potential pitfalls and considerations

Common mistakes in ensemble techniques often stem from misjudging model correlations. Using models that are too similar can reduce the effectiveness of an ensemble, leading to diminishing returns. Moreover, overfitting risks can increase as more models are added, particularly if they don’t generalize well to new data. Balancing the complexity of the ensemble with its predictive capability is essential.

To evaluate the effectiveness of ensemble models, it’s crucial to utilize appropriate performance metrics. Key metrics include accuracy, precision, recall, and F1 score, which provide insights into model performance across different dimensions. Implementing cross-validation ensures that model evaluation remains resilient against data leakage and overfitting, promoting more reliable performance tracking.

Advanced topics in model ensembling

The landscape of model ensembling is continually evolving. Recent trends include the rise of auto-ensembling techniques, which automate the selection and combination of models based on underlying data characteristics. This innovation promises to enhance predictive performance by optimizing ensemble architecture without requiring extensive manual intervention.

Moreover, the integration of deep learning with ensemble methods is revolutionizing how predictions are made across fields. Researchers are exploring how neural networks can effectively be ensembled, maximizing their synergy with traditional ML methods. As these innovations arise, future research and tool development will likely provide even more capabilities and refinements to model ensembling techniques.

Interactive tools for implementing ensemble models

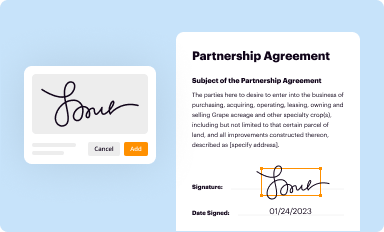

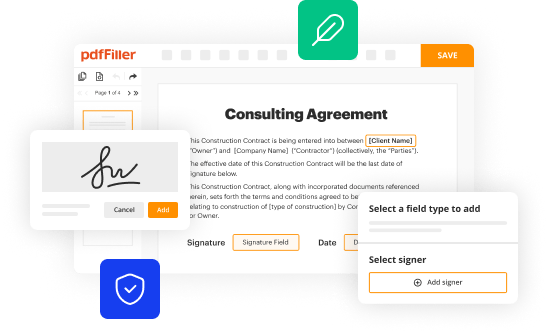

Utilizing interactive tools can significantly simplify the implementation of ensemble models. Platforms like pdfFiller offer users the ability to create fillable forms for data collection, enabling seamless data management and collaboration among team members. This capability not only enhances model development processes but also ensures better organization of model results for future reference.

By leveraging pdfFiller's cloud-based document management features, teams can easily collaborate on ensemble modeling projects, share insights, and iterate on their models efficiently. Such tools integrate with the ensemble strategy, facilitating a streamlined workflow from the initial data collection phase to model deployment and validation.

Managing your modeling documentation

Effective documentation management is critical in machine learning projects. Structuring project documentation enables clear tracking of methodologies, predictions, and outcomes, allowing for easier audits and evaluations of ensemble models. Recording details such as model versions, hyperparameter configurations, and evaluation metrics helps to maintain an organized and manageable project history.

Using tools like pdfFiller for versioning and archiving models ensures that all project members have access to the latest documentation and model iterations. This capability supports consistent communication within teams and aids in maintaining a historical record of developments, fostering an environment of continuous learning and innovation.

Engaging with the community

Active engagement with the machine learning community can greatly enhance one's understanding of ensemble models. Platforms such as dedicated forums, GitHub repositories, and collaborative websites provide spaces for sharing insights and discussing effective strategies in ensemble modeling. Participating in community challenges, and collaborating on projects, can significantly expand one’s knowledge and practical skills.

Moreover, engaging with peers fosters the exchange of novel ideas and techniques, which can lead to innovative approaches to ensemble modeling. Leveraging collective wisdom helps bridge gaps in understanding complex concepts while facilitating the sharing of resources and complementary skills.

For pdfFiller’s FAQs

Below is a list of the most common customer questions. If you can’t find an answer to your question, please don’t hesitate to reach out to us.

How can I modify model ensembling as a without leaving Google Drive?

Can I create an electronic signature for the model ensembling as a in Chrome?

How can I edit model ensembling as a on a smartphone?

What is model ensembling as a?

Who is required to file model ensembling as a?

How to fill out model ensembling as a?

What is the purpose of model ensembling as a?

What information must be reported on model ensembling as a?

pdfFiller is an end-to-end solution for managing, creating, and editing documents and forms in the cloud. Save time and hassle by preparing your tax forms online.