Get the free ucla site pdffiller com site blog pdffiller com

Get, Create, Make and Sign ucla site pdffiller com

How to edit ucla site pdffiller com online

Uncompromising security for your PDF editing and eSignature needs

Discrimination by algorithm form: Understanding and Mitigating Bias in AI Systems

Understanding algorithmic discrimination

Algorithmic discrimination occurs when automated systems produce biased outcomes based on race, gender, or other demographic factors. These systems, fundamental to many decision-making processes, utilize data-driven algorithms to make predictions that can affect individuals' lives, from job applications to loan approvals. A stark example is seen in hiring algorithms that favor certain demographics over others, often unintentionally reflecting societal biases.

Such discrimination isn't limited to job applications; it's prevalent across sectors. For instance, healthcare algorithms can misclassify the severity of illness based on the racial background of patients, leading to unequal treatment. In the financial sector, credit scoring algorithms may reinforce historical inequalities by denying loans to applicants based on predictive models that utilize biased data.

The mechanisms behind algorithmic discrimination

Understanding the mechanics of discrimination by algorithm form requires a look at the underlying data and design flaws. Firstly, data bias and its origins play a crucial role in shaping algorithmic outcomes. Historical prejudices reflected in datasets can perpetuate discriminatory practices. For example, if an algorithm is trained on data depicting a history of racial discrimination, it might learn to replicate these patterns inadvertently.

Additionally, algorithm design flaws contribute significantly to outcomes. Developers, often lacking diversity, might unintentionally embed their own biases into algorithms. Furthermore, feedback loops can worsen the situation; if a biased algorithm consistently generates poor outcomes for certain demographic groups, it reinforces prejudice within the system, continually disadvantaging these groups.

Identifying algorithmic discrimination

Detecting discrimination by algorithm form involves recognizing patterns in results across different demographic groups. Certain algorithms may disproportionately impact marginalized communities. For instance, a job recruitment tool might show a lower success rate for applicants from a specific background. Documented case studies highlight critical instances where algorithms have failed to treat everyone equitably, underscoring the need for vigilance.

To identify algorithmic discrimination effectively, organizations can utilize statistical testing methods to uncover biases. Tools such as FairTest and AIF360 help assess algorithms for fairness by analyzing datasets against various demographic criteria. Regular audits using these methodologies can reveal lapses in fairness and enable corrective action.

Legal framework surrounding algorithmic discrimination

Currently, several laws address algorithmic fairness, but enforcement remains challenging. For instance, the General Data Protection Regulation (GDPR) in Europe emphasizes data protection and user rights, yet it doesn't explicitly tackle algorithmic discrimination. In the United States, the Equal Credit Opportunity Act provides a legal framework but struggles to keep pace with technological advancements in automated decision-making.

Advocacy groups play a crucial role in pushing for algorithmic fairness. Organizations like the Electronic Frontier Foundation (EFF) and the Algorithmic Justice League are raising awareness about biased algorithms, engaging in discourse around ethical design, and promoting policy changes. Prominent cases, such as the lawsuits against facial recognition technologies, are pushing legal discussions around accountability in algorithm development.

Strategies for mitigating algorithmic discrimination

Mitigating discrimination by algorithm form requires a multi-faceted approach starting with ethical algorithm design. Developers and companies are encouraged to adopt best practices that emphasize inclusivity and fairness throughout the development cycle. This includes ensuring team diversity, which fosters varied perspectives and reduces the risk of embedding biases into algorithms.

Regular audits of algorithms are vital to maintaining fairness. Setting benchmarks for algorithmic performance based on equitable outcomes helps organizations identify discrepancies. Tools like Google's What-If Tool and IBM's AI Fairness 360 provide frameworks for ongoing evaluation. Moreover, advocating for transparent algorithms empowers users, enabling them to understand and challenge decisions made by these systems.

Engaging with algorithmic discrimination as a user

Individuals play a critical role in identifying and addressing bias in algorithmic systems. Steps include observing and documenting any discriminatory outcomes experienced, such as being unfairly denied credit or job opportunities. Providing feedback to companies when encountering bias fosters accountability and can ignite changes within organizations to prioritize fairness.

Navigating the digital landscape requires users to familiarize themselves with their rights regarding data and algorithms. Understanding how to safely use digital services involves recognizing potential biases that may affect personal experiences with technology. By engaging in critical dialogue about algorithmic decisions, users can advocate for more fair practices.

The future of algorithms: Hopes and challenges

The future promises advancements that could help combat algorithmic discrimination. Emerging practices in artificial intelligence development emphasize fairness and transparency, incorporating ethical principles from the onset. New technologies, such as explainable AI, offer the potential to make systems more transparent and accountable, thus fostering greater trust and fairness in algorithmic systems.

However, unchecked algorithmic discrimination poses serious societal implications, including deepening inequality and limiting access to essential services. Engaging the public in conversations about algorithmic policies is crucial to ensure that the designs reflect societal values and protect against systemic biases.

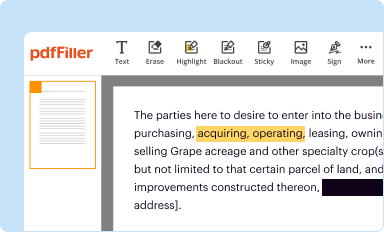

Interactive tools and resources

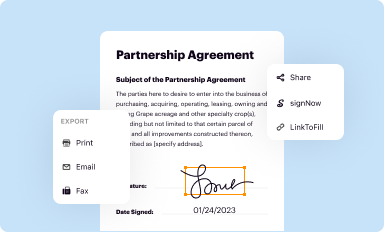

Individuals can leverage tools like pdfFiller for document management related to algorithmic discrimination. With features that allow users to create, edit, and manage essential documentation, it makes engaging in these conversations easier. Tutorials on pdfFiller provide insights into how to efficiently utilize the platform, ensuring that users can effectively contribute to discussions around fairness.

Accessing forms for reporting and legal action is also streamlined through pdfFiller. The platform offers templates for grievances and guides on how to fill them out correctly. This ease of access encourages individuals to take action when they encounter discrimination, providing necessary resources for accountability.

Case studies and insights

Exploring success stories where organizations have effectively mitigated algorithmic discrimination reveals valuable lessons for other entities. For instance, companies employing diverse development teams have demonstrated significant improvements in algorithmic fairness. Through collaboration and thorough audits, these organizations constructed systems that account for biases and generate equitable outcomes.

Ongoing research continues to provide insights into algorithmic fairness, with studies revealing new strategies for improving inclusivity in AI systems. Academic institutions are increasingly focused on themes of ethics in technology, which indicates a progressive shift towards more responsible practices within the industry. These findings could lead to better frameworks that help organizations navigate the complex dynamics of algorithmic decision-making.

For pdfFiller’s FAQs

Below is a list of the most common customer questions. If you can’t find an answer to your question, please don’t hesitate to reach out to us.

How can I manage my ucla site pdffiller com directly from Gmail?

How can I send ucla site pdffiller com for eSignature?

How do I fill out ucla site pdffiller com on an Android device?

What is discrimination by algorithm?

Who is required to file discrimination by algorithm?

How to fill out discrimination by algorithm?

What is the purpose of discrimination by algorithm?

What information must be reported on discrimination by algorithm?

pdfFiller is an end-to-end solution for managing, creating, and editing documents and forms in the cloud. Save time and hassle by preparing your tax forms online.