Get the free Benchmarking Large Language Models toward reasoning ... - openaccess uoc

Get, Create, Make and Sign benchmarking large language models

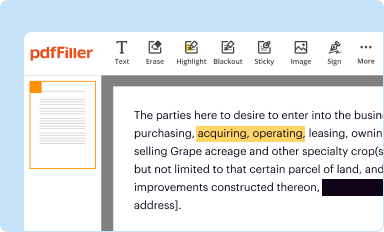

How to edit benchmarking large language models online

Uncompromising security for your PDF editing and eSignature needs

Benchmarking Large Language Models Form

Understanding large language models (LLMs)

Large language models (LLMs) are advanced algorithms designed to understand, generate, and manipulate human language. They harness vast amounts of training data and complex architectures to perform various natural language processing tasks, making them indispensable in today's AI landscape.

The significance of LLMs is reflected in their applications across industries. From generating coherent text and translating languages to powering chatbots in customer service, the potential of LLMs is transforming how we interact with technology.

The need for benchmarking large language models

Benchmarking large language models is essential to evaluate their performance accurately. It allows developers and researchers to measure how well these models fulfill specific tasks compared to others, encouraging continuous improvement.

Without a standardized benchmarking process, it becomes challenging to assess whether innovations in LLMs truly lead to better user experiences or outcomes. This is where benchmarking provides valuable metrics and insights.

Benchmarking methodologies: Approaches and techniques

In the realm of benchmarking LLMs, several methodologies are employed to ensure thorough evaluations. These can be generally categorized into quantitative and qualitative approaches.

Quantitative methodologies involve metrics such as accuracy and processing speed, while qualitative assessments may focus on the relevance and coherence of the output. Selecting the right methods can significantly impact the benchmarking outcome.

Setting up an experiment for benchmarking an LLM can follow a structured approach. Define the use case, select an appropriate dataset that aligns with your goals, and design an evaluation process that captures both qualitative and quantitative data.

Tools and frameworks for benchmarking LLMs

While performing benchmarks, leveraging the right tools and frameworks is essential for streamlined processes. OpenAI's API and Hugging Face Transformers are industry-leading platforms providing robust functionalities for benchmarking purposes.

These platforms enable easy integration and provide pre-built datasets and model assessments, making them suitable for both developers and researchers.

Evaluation frameworks: Ensuring reliable results

Evaluating the outcomes of LLM benchmarks requires careful consideration of numerous factors to ensure reliability. It's not just about statistical significance but also about practical implications of the results.

Interpreting results effectively involves best practices such as cross-validating findings with established benchmarks and examining outputs against real-world applications.

Case studies: Successful implementations of benchmarking

Examining successful case studies in LLM benchmarking provides insights into the practical implications of these evaluations. Businesses across various sectors have streamlined operations and improved user interactions through their benchmarking initiatives.

For example, companies in the healthcare sector have utilized language models to process clinical texts and enhance patient communication, evaluating models based on accuracy and contextual understanding.

Best practices for ongoing benchmarking

Establishing a routine for benchmarking is vital to stay ahead in the rapidly evolving AI landscape. Organizations must regularly revisit and refine their benchmarking practices to incorporate new models and methods.

Fostering collaboration among teams can enhance benchmarking efforts, bringing together diverse perspectives to keep benchmarking relevant and practical.

Future directions in benchmarking

The future of LLM benchmarking is brightly lit by emerging technologies and innovative approaches. As the capabilities of language models continue to expand, so do the metrics and methods for benchmarking them.

Anticipating future roles for these benchmarks, stakeholders can expect nuanced evaluations that integrate user feedback and evolving language understanding.

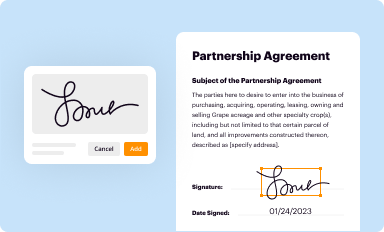

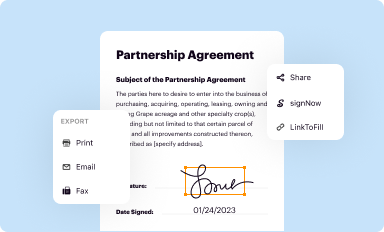

Interactive tools for enhanced document management

Effective document management is essential in the context of LLM benchmarking, and utilizing interactive tools can significantly streamline this process. pdfFiller’s features allow seamless editing and management of documents related to benchmarking efforts.

Leveraging cloud solutions not only enhances document collaboration but also supports remote teams in accessing essential materials from anywhere, fortifying productivity.

Considerations for choosing your benchmarking strategy

When determining your approach to LLM benchmarking, customization based on specific business needs becomes paramount. It's crucial to balance performance efficiency with resource allocation to derive the most value from your benchmarking efforts.

Consider engaging with managed service options, which can provide comprehensive support and expertise in navigating complex benchmarking landscapes.

For pdfFiller’s FAQs

Below is a list of the most common customer questions. If you can’t find an answer to your question, please don’t hesitate to reach out to us.

How can I edit benchmarking large language models from Google Drive?

How do I execute benchmarking large language models online?

How can I edit benchmarking large language models on a smartphone?

What is benchmarking large language models?

Who is required to file benchmarking large language models?

How to fill out benchmarking large language models?

What is the purpose of benchmarking large language models?

What information must be reported on benchmarking large language models?

pdfFiller is an end-to-end solution for managing, creating, and editing documents and forms in the cloud. Save time and hassle by preparing your tax forms online.