Get the free Apache Spark API By Example - La Trobe University - homepage cs latrobe edu

Show details

Apache Spark API By Example A Command Reference for Beginners Matthias Larger, Then He Department of Computer Science and Computer Engineering La Tribe University Indoor, VIC 3086 Australia m.larger

We are not affiliated with any brand or entity on this form

Get, Create, Make and Sign apache spark api by

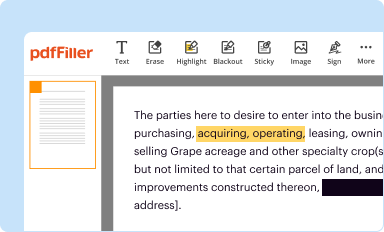

Edit your apache spark api by form online

Type text, complete fillable fields, insert images, highlight or blackout data for discretion, add comments, and more.

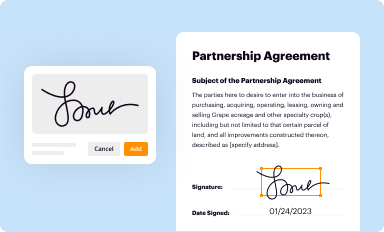

Add your legally-binding signature

Draw or type your signature, upload a signature image, or capture it with your digital camera.

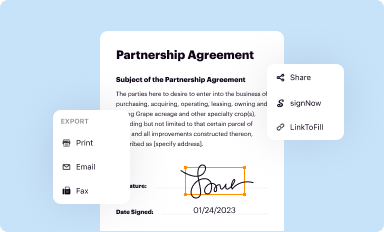

Share your form instantly

Email, fax, or share your apache spark api by form via URL. You can also download, print, or export forms to your preferred cloud storage service.

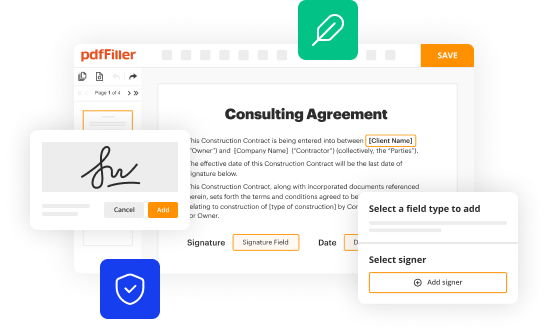

How to edit apache spark api by online

Follow the steps below to benefit from a competent PDF editor:

1

Register the account. Begin by clicking Start Free Trial and create a profile if you are a new user.

2

Simply add a document. Select Add New from your Dashboard and import a file into the system by uploading it from your device or importing it via the cloud, online, or internal mail. Then click Begin editing.

3

Edit apache spark api by. Replace text, adding objects, rearranging pages, and more. Then select the Documents tab to combine, divide, lock or unlock the file.

4

Save your file. Select it from your records list. Then, click the right toolbar and select one of the various exporting options: save in numerous formats, download as PDF, email, or cloud.

Uncompromising security for your PDF editing and eSignature needs

Your private information is safe with pdfFiller. We employ end-to-end encryption, secure cloud storage, and advanced access control to protect your documents and maintain regulatory compliance.

How to fill out apache spark api by

How to fill out Apache Spark API?

01

Start by understanding the basics of Apache Spark and its core concepts. Familiarize yourself with concepts such as Resilient Distributed Datasets (RDDs), transformations, and actions.

02

Determine the specific goals or tasks you want to accomplish using Apache Spark. Identify the data sources you will be working with, such as structured data in tables, streaming data, or unstructured data.

03

Choose the appropriate programming language for your implementation. Apache Spark supports multiple programming languages, including Scala, Java, Python, and R. Select the language you are most comfortable with or the one that best suits your project requirements.

04

Set up your development environment by installing Apache Spark and the necessary dependencies. It is essential to ensure compatibility and the proper functioning of the API.

05

Import the Apache Spark libraries into your project. Depending on the programming language you are using, you will need to include the relevant dependencies and import statements.

06

Familiarize yourself with the available documentation and resources provided by Apache Spark. The official website offers extensive documentation, tutorials, and examples that can help you understand and utilize the API effectively.

07

Begin writing code to fill out the Apache Spark API according to your specific requirements. Start by initializing a SparkSession object, which serves as the entry point for interacting with Spark.

08

Utilize the available APIs and functions to perform data transformations, aggregations, filtering, or any other operations required for your tasks. Explore the various methods and functions offered by Spark, such as map(), filter(), reduce(), join(), and more.

09

Test and validate your code by running it against sample or real data. Monitor the results and make necessary adjustments if needed.

Who needs Apache Spark API?

01

Data Scientists: Apache Spark provides a powerful and scalable platform for processing massive amounts of data. It enables data scientists to perform complex data analytics, machine learning, and statistical computations on large datasets efficiently.

02

Data Engineers: Apache Spark offers extensive capabilities for data processing and manipulation. Data engineers can leverage the API to build data pipelines, perform ETL (Extract, Transform, Load) processes, and integrate with various data sources for data processing and integration tasks.

03

Software Developers: Apache Spark API allows software developers to incorporate distributed computing capabilities into their applications. It provides an interface for processing and analyzing large datasets, enabling developers to build scalable and high-performance applications.

In summary, filling out the Apache Spark API involves understanding its core concepts, setting up the development environment, utilizing the available documentation, writing code to perform required operations, and testing the implementation. Apache Spark API is valuable to data scientists, data engineers, and software developers for various data processing and analytics tasks.

Fill

form

: Try Risk Free

For pdfFiller’s FAQs

Below is a list of the most common customer questions. If you can’t find an answer to your question, please don’t hesitate to reach out to us.

What is apache spark api by?

Apache Spark API is a set of libraries and tools for building and deploying big data processing applications.

Who is required to file apache spark api by?

Developers and data engineers who are working on big data processing projects using Apache Spark are required to file Apache Spark API.

How to fill out apache spark api by?

To fill out Apache Spark API, developers need to document their code, configurations, and dependencies used in their Spark applications.

What is the purpose of apache spark api by?

The purpose of Apache Spark API is to provide a consistent interface for developers to interact with Spark and its components such as RDDs, DataFrames, and Datasets.

What information must be reported on apache spark api by?

Developers must report details about their Spark applications, including data sources, transformations, actions, and configuration settings.

How can I send apache spark api by to be eSigned by others?

Once your apache spark api by is ready, you can securely share it with recipients and collect eSignatures in a few clicks with pdfFiller. You can send a PDF by email, text message, fax, USPS mail, or notarize it online - right from your account. Create an account now and try it yourself.

How do I complete apache spark api by online?

Easy online apache spark api by completion using pdfFiller. Also, it allows you to legally eSign your form and change original PDF material. Create a free account and manage documents online.

How do I complete apache spark api by on an Android device?

Use the pdfFiller mobile app and complete your apache spark api by and other documents on your Android device. The app provides you with all essential document management features, such as editing content, eSigning, annotating, sharing files, etc. You will have access to your documents at any time, as long as there is an internet connection.

Fill out your apache spark api by online with pdfFiller!

pdfFiller is an end-to-end solution for managing, creating, and editing documents and forms in the cloud. Save time and hassle by preparing your tax forms online.

Apache Spark Api By is not the form you're looking for?Search for another form here.

Relevant keywords

Related Forms

If you believe that this page should be taken down, please follow our DMCA take down process

here

.

This form may include fields for payment information. Data entered in these fields is not covered by PCI DSS compliance.