Get the free R: Parallel Computing Toolset for Relatedness and Principal ...

Get, Create, Make and Sign r parallel computing toolset

How to edit r parallel computing toolset online

Uncompromising security for your PDF editing and eSignature needs

How to fill out r parallel computing toolset

How to fill out r parallel computing toolset

Who needs r parallel computing toolset?

R Parallel Computing Toolset Form: Accelerating Your Data Science Workflows

Understanding R for parallel computing

The R programming language has evolved significantly since its inception in the early 1990s, becoming a cornerstone of data science and statistical analysis. R provides robust tools for analyzing complex datasets; however, as data sizes grow, so do the demands on computational resources. This is where parallel computing steps in, allowing R users to leverage multiple computing cores or even distributed networks to boost processing speed. By employing parallel computing concepts, data scientists can enhance their workflows, ensuring faster results and improved efficiency.

Parallel computing with R is particularly beneficial in data-heavy scenarios such as simulations, large-scale data analysis, and machine learning tasks, where single-threaded execution can be a bottleneck. As R continues to integrate parallel processing capabilities, users are equipped to tackle real-world challenges more effectively.

R parallel computing toolset: Key features

Within the R ecosystem, several packages facilitate parallel computing, with the most notable being `parallel`, `foreach`, and `doParallel`. These packages provide an interface to enable users to execute R code simultaneously across multiple processing units, drastically reducing computational time for demanding tasks.

The features of R’s parallel computing toolset go beyond mere execution. These packages offer synchronization and communication capabilities among processes, allowing for collaborative data analysis across different compute environments. Moreover, the toolset is scalable, supporting tasks across multiple cores on a single machine or nodes in a distributed network. R's compatibility with cloud computing platforms also empowers users to run large workloads dynamically without the constraints of local resources.

Setting up your R parallel computing environment

To begin leveraging the R parallel computing toolset, proper setup is essential. The first step is assessing system requirements, ensuring that your environment can support the necessary processing capabilities. R itself, along with RStudio, must be installed on your machine, preferably with the latest stable versions. If you aim to utilize multiple cores effectively, it's advisable to have a multi-core processor, which is commonplace in modern systems.

Installing the required parallel computing packages involves using the R console, where commands such as `install.packages('parallel')`, `install.packages('foreach')`, and `install.packages('doParallel')` can be executed. Configuring these packages effectively is crucial; for instance, setting the number of cores to utilize is typically done via functions like `registerDoParallel()`, which allows users to specify their desired parallelism level. Paying attention to these configurations ensures optimal performance in your workflows.

Building your first parallel computing application in R

As you dive into parallel computing with R, structuring your code for parallel execution is paramount. Instead of running tasks sequentially, identify segments of your code that can be processed independently. For example, iterative tasks, like applying a function over rows of a data frame, are prime candidates for parallelization.

An example of implementing parallel processing could involve using the `foreach` loop in conjunction with `doParallel`. In this scenario, a series of simulations could be run in parallel to gather results faster. By doing so, the time taken to complete the entire operation can be drastically reduced, showcasing the power of parallel execution in data-intensive tasks.

Advanced parallel computing techniques in R

For more complex applications, distributed computing can provide substantial benefits. R supports distributed processing using frameworks like Apache Spark through the `sparklyr` package. This enables users to run R code on large clusters, making it an excellent choice for handling vast datasets or intricate computations that cannot be effectively managed on a single machine.

Real-world use cases of R parallel computing toolset

The R parallel computing toolset has been instrumental in numerous real-world applications. For instance, in a case study focusing on genomic data analysis, researchers were able to streamline their data processing workflows significantly by utilizing parallel computing techniques provided by R. This approached allowed them to reduce analysis time from days to mere hours.

Community contributions are vital to the continued development of R's parallel computing capabilities. Numerous open-source projects leverage these toolkits to enhance their data processing capabilities, showcasing the versatility and power of R in tackling diverse problems across fields like finance, healthcare, and technology.

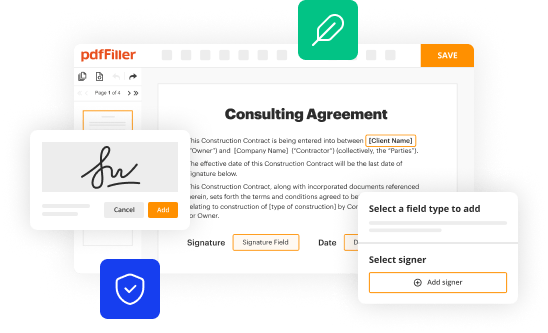

Integrating pdfFiller with R parallel computing

Integrating pdfFiller with your R parallel computing workflows can bring an entirely new level of efficiency. With R scripts, users can dynamically generate documents based on their analyses, automating the report creation process. This enables you to document your findings in a structured format without manual intervention.

Moreover, pdfFiller’s cloud-based platform enhances collaboration among team members. By sharing R-generated reports directly through pdfFiller, you can utilize its editing and eSigning features, making it simple to collaborate, review, and finalize documents. This not only saves time but also ensures a streamlined approach to documentation.

FAQs about R parallel computing toolset

Many users encounter challenges when first implementing parallel computing in R. Common issues include configuring parallel environments correctly or understanding the nuances of synchronization. One primary misconception is that simply adding parallel functions will automatically yield performance improvements. In practice, optimizing tasks for parallelization requires careful planning and an understanding of the underlying processes.

Interactive tools for mastering R parallel computing

To deepen your understanding of R parallel computing, numerous resources are available, including webinars and online workshops that explore advanced topics. Engaging in these sessions can provide insights into best practices and innovative use cases directly from industry experts.

For pdfFiller’s FAQs

Below is a list of the most common customer questions. If you can’t find an answer to your question, please don’t hesitate to reach out to us.

How can I manage my r parallel computing toolset directly from Gmail?

Can I create an electronic signature for signing my r parallel computing toolset in Gmail?

How do I fill out the r parallel computing toolset form on my smartphone?

What is r parallel computing toolset?

Who is required to file r parallel computing toolset?

How to fill out r parallel computing toolset?

What is the purpose of r parallel computing toolset?

What information must be reported on r parallel computing toolset?

pdfFiller is an end-to-end solution for managing, creating, and editing documents and forms in the cloud. Save time and hassle by preparing your tax forms online.