Get the free State-of-the-Art Deep Learning Architectures for Identifying ...

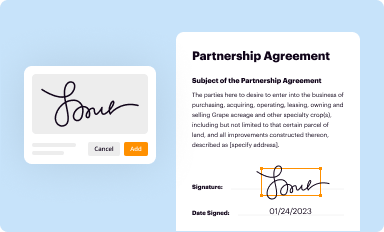

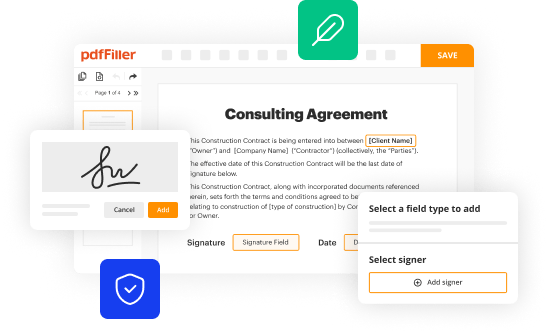

Get, Create, Make and Sign state-of-form-art deep learning architectures

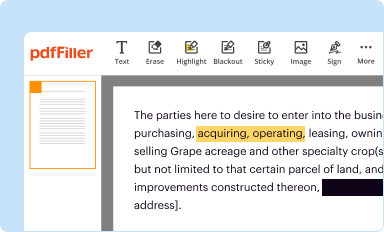

Editing state-of-form-art deep learning architectures online

Uncompromising security for your PDF editing and eSignature needs

How to fill out state-of-form-art deep learning architectures

How to fill out state-of-form-art deep learning architectures

Who needs state-of-form-art deep learning architectures?

State-of-the-art deep learning architectures form

Understanding deep learning architectures

Deep learning is a subfield of machine learning that deals with algorithms inspired by the structure and function of the human brain. Its significance in today's technology cannot be overstated, as it powers various high-impact applications such as image recognition, language translation, and autonomous driving. To grasp the essence of state-of-the-art deep learning architectures, it's crucial to understand their evolution and the foundational concepts that lead to their development.

Key terminology in deep learning

When delving into deep learning, several terms frequently arise. Neural networks are the backbone of these architectures, functioning through interconnected layers of nodes. Each layer consists of nodes that perform computation based on their activation functions, which define how the output of each node is determined. This interconnected architecture allows deep learning to model complex data relationships effectively.

Overview of state-of-the-art architectures

Several deep learning architectures stand out for their performance and application versatility. Convolutional Neural Networks (CNNs) are primarily used for image-related tasks due to their ability to process pixel data hierarchically, making them ideal for visual data analysis. They derive features from images by applying convolutional operations, making them robust to shifts and distortions. Recognizing their strengths, CNNs are employed in diverse applications like medical imaging, facial recognition, and video analysis.

On the other hand, Recurrent Neural Networks (RNNs) and Long Short-Term Memory Networks (LSTMs) are designed to handle sequential data, making them perfect for applications like natural language processing and time series forecasting. RNNs provide feedback loops allowing information to persist while LSTMs enhance this capability by overcoming issues like vanishing gradients, thus retaining information over extended sequences.

Transformer models

Transformers have revolutionized natural language processing by facilitating parallel processing of data. This architecture employs self-attention mechanisms, enabling models to weigh the influence of different words in a sequence regardless of their position. As a result, transformers have dramatically improved applications such as translation, sentiment analysis, and content generation.

Generative adversarial networks (GANs)

GANs introduce a novel approach to model training, where two neural networks, the generator and the discriminator, compete against each other. This adversarial process results in the generation of highly realistic data. GANs find prominent applications in areas like image generation, video production, and even creative arts, allowing the creation of deepfake technology and high-resolution image generation.

Comparative analysis of architectures

When evaluating deep learning architectures, performance metrics become pivotal. Accuracy is a primary measure that indicates how well a model performs on unseen data, while training time assesses the efficiency of model building. Moreover, scalability and flexibility are essential for adapting models to various tasks without requiring extensive redevelopment.

Different architectures demonstrate strengths across various domains. For instance, CNNs excel in computer vision, while RNNs and LSTMs dominate in speech recognition tasks. Understanding these strengths can guide the selection of the appropriate architecture for a given application, fostering better results in deep learning endeavors.

Tools for implementing deep learning architectures

A variety of frameworks and libraries are available for developing deep learning models. TensorFlow is a widely-used open-source library that provides flexibility and scalability for large-scale applications. PyTorch, another popular choice, is recognized for its dynamic computation graph, making it user-friendly for research purposes. Keras, a high-level API running on top of TensorFlow, simplifies the process of building complex models, enabling rapid prototyping.

When it comes to hardware, selecting the right computational resources is crucial. Graphics Processing Units (GPUs) significantly accelerate the training process when compared to traditional Central Processing Units (CPUs). Leveraging cloud-based solutions offers scalability and flexibility, enabling teams to run experiments and manage heavy computations without the need for extensive local hardware resources.

Best practices for building and training deep learning models

Effective data preparation is essential for building robust deep learning models. High-quality data ensures that models can learn accurate patterns. Techniques like data cleanup and normalization are critical in this phase, as they directly impact the model’s ability to generalize from training to unseen data.

Hyperparameter tuning plays a vital role in optimizing model performance. This process involves adjusting parameters such as learning rates and batch sizes to enhance training efficiency and minimize overfitting. Additionally, implementing robust validation techniques like cross-validation ensures that the model's performance is reliable across different data splits.

Challenges in deep learning

Selecting the right architecture can be daunting, particularly when many architectures claim to excel in varied tasks. A clear understanding of the problem at hand and the strengths of each model ensures informed decisions in architecture selection. This technical insight fosters better outcomes and avoids common pitfalls.

Ethical considerations play a crucial role in AI development, necessitating ongoing attention to bias in training datasets. Understanding where biases emerge and implementing strategies to mitigate their impact ensures equitable outcomes and builds trust in AI systems.

Future directions in deep learning architectures

Emerging trends point toward increasing integration of explainable AI (XAI) to enhance transparency in deep learning models. As systems grow more complex, understanding their decision-making processes becomes crucial for both developers and end users. Additionally, innovations in real-time processing will enable on-the-fly predictions, significantly advancing applications in areas like autonomous systems and live analytics.

Looking ahead, the potential of quantum computing presents a transformative opportunity for deep learning. By harnessing quantum principles, researchers could significantly speed up training processes and enable complex problem-solving capabilities. Additionally, cross-disciplinary applications will likely emerge, combining insights from various fields to drive innovation.

Interactive tools and resources

For practitioners looking to explore deep learning, various online platforms facilitate experimentation and knowledge sharing. Google Colab allows users to run Python code in a web-based environment, fostering collaborative programming in Jupyter Notebooks. Meanwhile, Kaggle Notebooks provides a community-driven platform where users can access datasets, submit codes, and engage in competitions, making it ideal for aspiring data scientists.

Comprehensive guides and tutorials are instrumental for beginners and professionals alike. Step-by-step tutorials facilitate a solid understanding of foundational concepts, while advanced techniques cater to experienced users looking to refine their skills or explore newer methodologies.

How pdfFiller enhances document management in deep learning projects

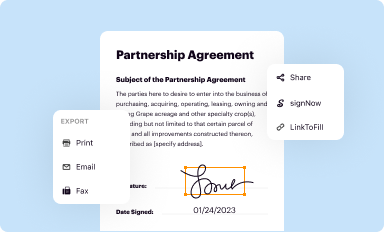

In the context of deep learning projects, effective document management plays a crucial role in enhancing collaboration and efficiency. pdfFiller offers a platform tailored to meet the needs of teams engaged in developing deep learning architectures. With real-time document editing capabilities, teams can work simultaneously on project documents, significantly improving workflow and collaboration.

Accessibility is another hallmark of pdfFiller, allowing users to manage and edit documents from anywhere. The cloud-based solution ensures mobile accessibility, enabling teams to remain productive irrespective of their location. Moreover, stringent security measures help maintain compliance and safeguard sensitive data, solidifying pdfFiller's position as a reliable tool for sophisticated document management.

For pdfFiller’s FAQs

Below is a list of the most common customer questions. If you can’t find an answer to your question, please don’t hesitate to reach out to us.

How do I edit state-of-form-art deep learning architectures in Chrome?

Can I edit state-of-form-art deep learning architectures on an iOS device?

How do I edit state-of-form-art deep learning architectures on an Android device?

What is state-of-form-art deep learning architectures?

Who is required to file state-of-form-art deep learning architectures?

How to fill out state-of-form-art deep learning architectures?

What is the purpose of state-of-form-art deep learning architectures?

What information must be reported on state-of-form-art deep learning architectures?

pdfFiller is an end-to-end solution for managing, creating, and editing documents and forms in the cloud. Save time and hassle by preparing your tax forms online.